Well, the DAC, an MCP4812 (10bit) shown in the schematics as well as the MCP4822 (12bit) I am currently using have an SPI-interface (marked SCK and SDI in the schematics). Any Arduino has some of its input/output-pins dedicated to a specific function. Specifically, with the Arduino Nano, the pin D13=SCK, the pin D11 = MOSI and the pin D10 = SS is of interest here. Have a look at this page for some further reference.

Normally, in the Arduino world, you connect an external IC either directly, via I2C or SPI. For a specific device, you also need a library in order to talk to the different registers of an IC for example. Usually, you are lucky and someone already did write an Arduino library. Than it’s easy sailing. You can find numerous examples at these pages here and otherwise.

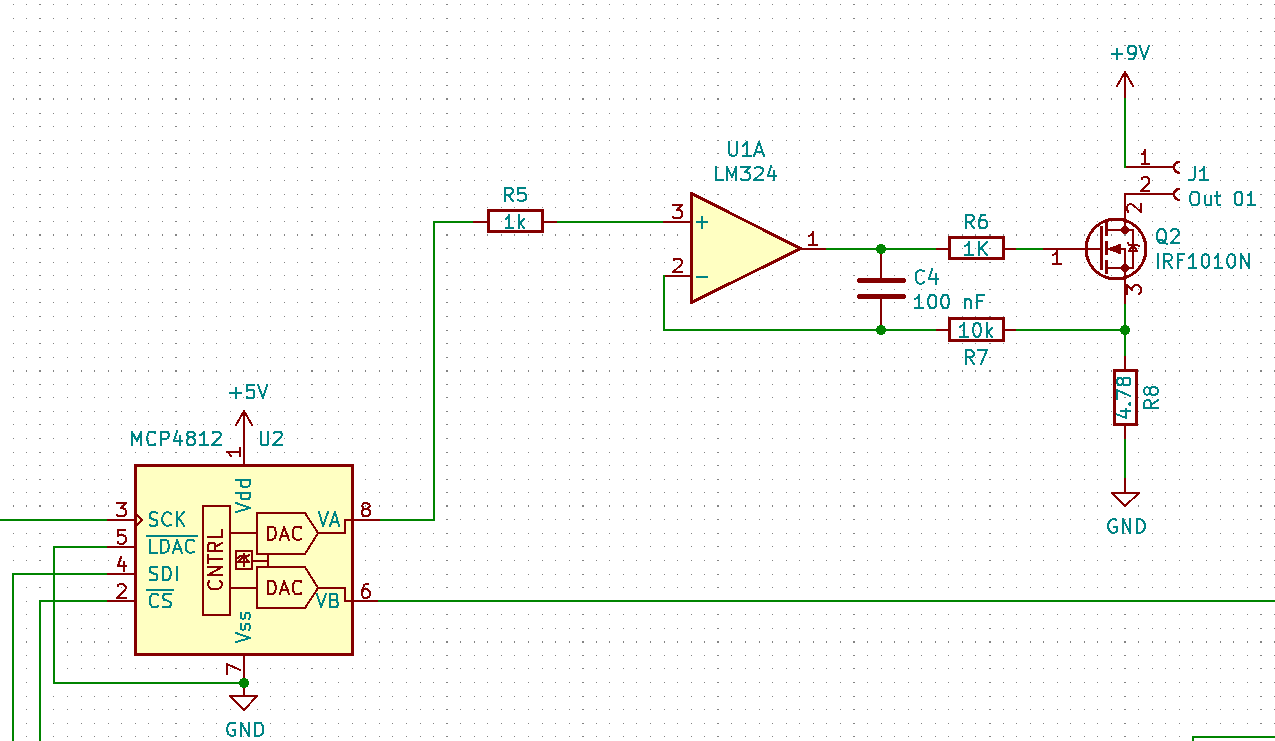

Well, to illustrate the schematics a little bit in more detail: the MCP48?? gets a register set to a value between 0 to 4096 (12 bit version) or 0 to 2048 (10 bit version). This causes the DAC to output a certain voltage on its pin 8. This voltage is piped to the input of the operational amplifier (U1A)

This unit is trying to keep its inputs 2 & 3 at the same voltage; it can only do this by opening more or less the IRF1010N (Q2) so that over the resistor R8 a sufficient voltage drop occurs. The current flows from the +9V via the connector J1, where the LED is connected, through transistor Q2 and resistor R8 to ground. That circuit is a simple voltage-to-current converter. C4 and R6 are a simplistic low-pass filter to avoid that the operational amplifier starts oscillating. As I mentioned earlier, a crude design with parts I found in my part bin. You can probably find better designs for programmable current sources on the internet.

One important point: there are basically two ways to dim a LED. The one used in dimmable LED-strips for example is PWM - pulse width modulation -, where high frequency constant current pulses are driving through the LED. The light intensity is controlled by varying the width of the pulses. That is a simple, cheap design - stay away from it for scanning purposes. You invite all kinds of trouble.

The design sketched above works by actually changing the current through the LED continously - this is more involved and more expensive. Mainly because you have to take care of large amounts of excess heat generated by the transistor (Q2 in the schematics) and the measuring resistor (R8). Both have to be quite beefy.

One last comment: like any analog film material, Super-8 film stock has a limited dynamic range. Material which was over-exposed during shooting too much will have burned-out highlights and there is no way to recover that lost information. On the other end of the scale, severely under-exposed material will show very little structure in dark shadows, with a lot of noise. This is also very difficult to recover, and at a certain point, everything will be just a structureless dark noise blob. However, if you can see structure in the highlights and shadows in a normal projection, chances are that you will be able to recover this electronically.