I think an Arduino Mega is a good choice for machine control. This board has certainly sufficient input and output pins and close to real-time response.

Note in that context that within the CNC-, Laser engraver and 3D printer community, there are already very cheap add-on boards available for the Mega which are able drive several steppers.

Slowly, the 3D printing community is drifting toward faster 32bit processors, mostly to be able to calculate more evolved speed profiles. But currently, the Mega is still the main option. Also, lately, the stepper driver ICs have gotten more intelligence (“silent step mode”).

A little bit on my background with respect to the following comments: I have done image processing on anything from FPGAs to PCs and also spend a lot of my time in devloping neural network algorithms.

The most universal option from a user perspective is certainly a PC. Most people already have one. It might not be a fast machine, but most of the time, it’s upgradable. If not, you can always wait longer for the results or speed up things by lowering the resolution. You do not always need to work in 4k or even higher resolutions. From my experience, old Super-8 material does not warrent the use of 4k - only if you want to record and show to the viewer not only the content, but also every single the film grain…

(Archivists might opt for that film grain. However, for Super-8 material, the scripts Fred Van de Putte (avisynth scripts) of are famous. But these script do everything to get rid of film grain and camera shake to enhance viewer experience.)

Continueing: you might want to base software development on a (small) community of freelancers - if so, you need a sufficient broad basis of more or less interchangable hardware. Which brings me to the next point:

Utilizing a special Linux machine like the NIVIDA Xavier or the like risks that this hardware combo might be rather short-lived (such things happened before) and that the supporting community is rather limited in size. So it might be difficult to find continuing support for the hardware chosen. In any case, I think these “special” computers are just a host processer (based on a standard ARM-design) and a more or less standard GPU for “AI” and “image processing” - however, if your bought your desktop computer with video-editing in mind, you probably already have a much better suited machine at your desk… (same comment applies also to any small factor PC like a Windows NUC).

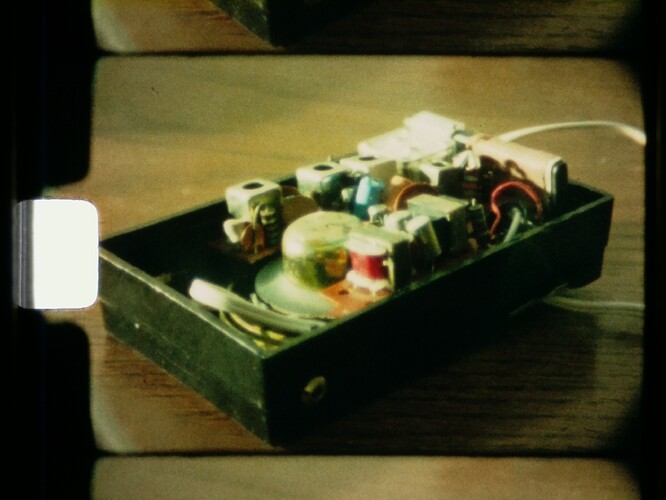

Trying out another perspective: if one defines “the machine” as just the core, mechanical setup for advancing the film material, issuing trigger signals for the camera, maybe controlling the lights, than a combination of an Arduino-like microprocessor with a tiny host which can talk LAN and/or USB is sufficient as hardware setup. Probably the cheapest option around at this time would be a Raspberry Pi (Linux host) + Arduino Mega + host shield with stepper drivers.

Explicitly taken out of the above sketched setup is the “picture taking”. The reason is teh following: how the hardware/software for frame caputure needs to be realized depends highly on the camera chosen.

Currently, most machine vision cameras feature at least C- or C+±libraries for Windows-based machines. So in a straight forward way, you would end up in writing capture software within the C- or C++ software environment. Or you would have to use whatever software is available for that camera.

Specifically, general libraries, for example OpenCV, lack currently sufficient support for USB3. I tried recently to get a See3CAM_CU135 with USB3-interface working within the OpenCV environment. The basic functionality is there, no question, but for example the white-balance of the camera can currently not be set via the OpenCV-lib.

Ok, let me throw into the discussion yet another perspective: what will be the people who use the nect generation of Kinograph? Libarians and archivist, just wanting to preserve important material? They would probably not bother or even shy away from most post-procesing steps. Or the experimental film maker using old analog technology in the digital age? This guy certainly would rather see a lot of post-processing options availble on the system. Or the hobbyist, using the Kinograph design as a base for own ideas? Might only be interested in the mechanical setup. Or, probably all of the above?

I have no idea about the target group, but my gut feeling is that one could make a cut between (picture-taking/hardware-control/storing on disk) versus post-processing (frame-detection, color-grading, etc).

Being only software, the whole post-processing could certainly be written in such a way that the software runs on all major operating systems.

Options for programming languages which come to my mind are certainly C/C++ (not so easy to support multiple OSs), Java (in its “Processing” disguise rather easy to use, it is even possible to utilize shaders there to speed up certain image processing task) or Python.

I must confess that Python is were I ended up after a long journey starting with Basic, Pascal, Fortran 77, PL/1, …, and years of C/C+±programming.

One additional note in this context: I know that you can write in Python interfaces to existing C/C+±libraries, but so far I have never done this by myself. But that might be an (easy?) option for integration of existing camera libraries.

). Seriously, if you are aiming at archival copies, you might want to have that grain defined precisely. On the other hand, if you just want share a few family movies via Youtube or Whatsup, you might not want any grain at all: the fine structure of the grain increases your bandwidth requirements substantially with standard encoding schemes like .mp4. You might even opt to deshake the original material (reduces bandwidth as well), recut it and finally adjust the color-balance. That would be as far away from an archive copy as you can imagine. Well, it all depends on your goal, I guess.

). Seriously, if you are aiming at archival copies, you might want to have that grain defined precisely. On the other hand, if you just want share a few family movies via Youtube or Whatsup, you might not want any grain at all: the fine structure of the grain increases your bandwidth requirements substantially with standard encoding schemes like .mp4. You might even opt to deshake the original material (reduces bandwidth as well), recut it and finally adjust the color-balance. That would be as far away from an archive copy as you can imagine. Well, it all depends on your goal, I guess.