Hi @Rowan,

In my device for transporting the film I use an old 8mm/Super8 projector.

In this projector, as in many others, the space for the lighting system is quite limited. For this reason, from the beginning I have always used, with more or less success, commercial white light LED lamps.

The one I currently use is the following:

It is a very cheap lamp, €1.3. I do not know the CRI parameter that does not appear in the technical data.

However, in my opinion, for 8mm film scanning application, it gives good results.

For the adjustment of the white balance, in its day I used exactly the same procedure described by @cpixip

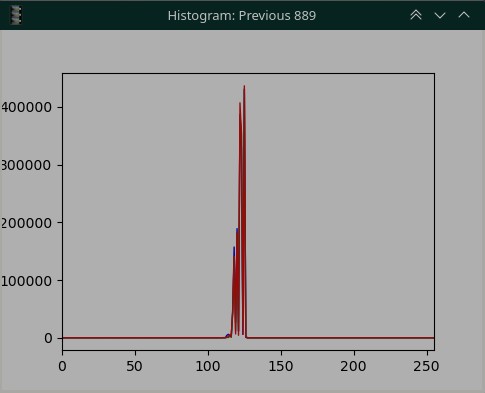

Attached is the histogram of the image captured by the RPi HQ camera with no film threaded in the projector.

In the histogram there are actually three superimposed curves corresponding to the blue, green and red channels. Only the red curve is visible, which is the one drawn last.

An ideal histogram would be three overlapping vertical lines. It would mean that all pixels receive the same level of illumination. Of course this is impossible to achieve in practice. Instead we should try to get the base of the curves as narrow as possible, meaning there is little spread in light levels.

The histogram and images in the above link were taken on the HQ camera using the old Picamera library.

As a comparison, I attach histogram and an image of the same frame captured with the HQ camera but using the new Picamera2 library. The camera has been tuned with the imx477_scientific.json file (@cpixip thanks for sharing)

I am finalizing a new version of my DSuper8 software that already uses the new library. I hope to post it on the forum shortly, as with previous versions.

Regards