– what specifically to you mean by “blue halos”?

Let’s go through a little process of adjusting your color science.

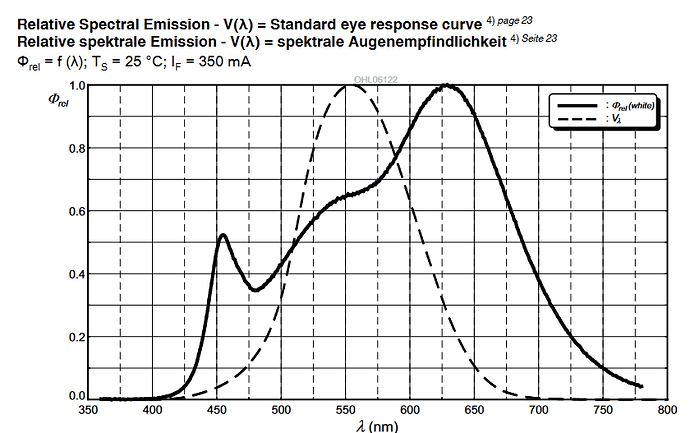

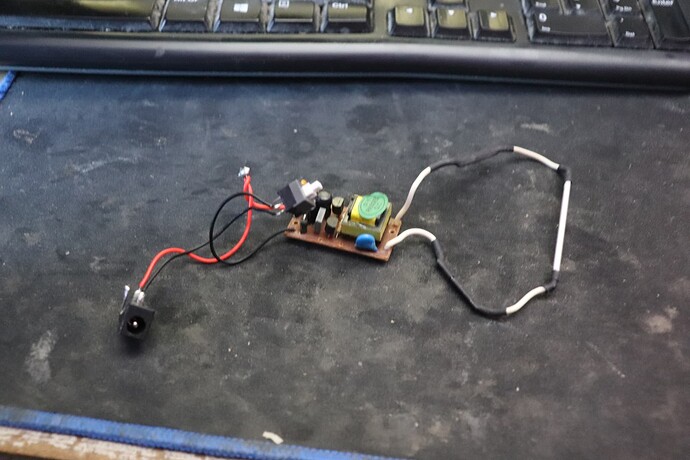

But before that, one note of caution: if you want to get good scan results, you should refrain from using a lightsource of unknow origin. There are all sorts of different illumination source out in the wild, very often with very weird spectra. Google a little bit for spectra of flourescent lightsources (or other sources you might be interested in) and then google “spectrum D65” to see one of the spectral distribution your HQ camera is tuned too (in fact, practically every other color camera as well). You should notice some differences…

Any manufacturer of a scanner can use - within limits - cheaper illumination sources, as long as he adapts the color science of his processing pipeline accordingly. In a way, manually developing a raw image so that “it looks good on the screen” is doing a similar thing. But such an esthetic-based manual approach is nowhere close to the real thing, an exact color sciene result.

For most of us building a film scanner with available hardware, we are limited by the camera we opted to purchase for the project. Most of the time, this specific camera comes with software which will deliver an sRGB-output, a .jpg for example. The color calibration for this is backed into the software delivered with the camera and can usually not be changed by the user. There is a simple reason for this: color calibration is difficult. And of course, most manufacturer-based calibrations are based on spectra like the D65 one - i.e. “daylight”, not any exotic light source. So: get your light source close to such a spectra, and things should improve colorwise.

The Raspberry Pi foundation’s choice of the libcamera image processing pipeline opens up for the first time the possibility to do your own color science. That’s a great step forward, but, as already noted, the standard file describing this processing for the HQ camera has some deficits.

There are threads on this forum with more detail about this, but the variation of the CCM-matrices with respect to color temperature found in the original tuning file indicate that it is not trivial to capture good quality calibration images. Another rather annoying thing is a backed-in lens shading correction. This shading correction is only valid for a specific lens - and it is unknown which lens this is. The result of this missmatch might be a slight vignetting of your image. It really depends on the lens you are using. Many people, including myself, are using a lens computed for the much larger 35mm format. This 35mm-lens displays no vignetting at all at the scales we are working at (8mm) - that’s the reason the lens shading correction is missing in the “imx477_scientific.json” tuning I advertized before.

The color science in the “imx477_scientific.json” tuning file is also based on a different approach not using calibration images at all. So the variation of the CCM-matrices with color temperature are much smoother. But the most noticeable impact on colors is actually caused by the (wrong) contrast curve of this tuning file. The original one features a rather strong contrast curve, enhancing the saturation of colors and flattening dark and bright image areas. The “imx477_scientific.json” tuning file features a standard rec709-curve instead.

So, your scan results will look different for the two tuning files available in the standard Raspberry Pi distribution.

There is yet another thing I want to suggest to you. Make sure that your whitebalance is close to the appropriate point. That is, do not use automatic whitebalancing but set your red and blue gain manually.

Here’s a simple procedure to do so:

- Remove the film from your unit.

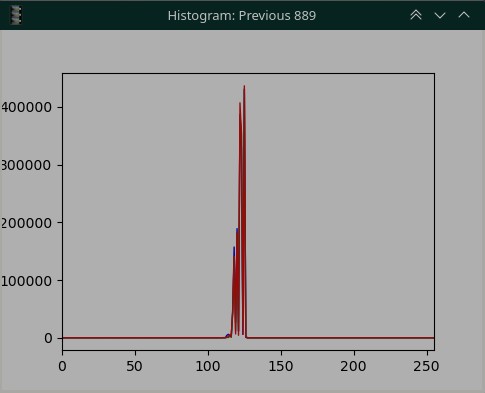

- Set a fixed exposure in such a way that .jpg-images delivered by your camera show a level around 128 in the green channel of your images.

- Now adjust the blue gain in such a way that the green and blue channel values are approximately the same.

- Next, adjust the red gain so red and green channels share the same values.

- Repeat steps 3. and 4. until the values of all color channels have converged to approximately the same value (around 128).

The above procedure will assure that your camera looking at your light source will see just a boring grey image. If you notice any intensity or color variations in that image, you need to check your hardware setup. Some of the things which can happen:

- Dark spots, either sharply focussed or blurry - that is usually caused by dirt on either the diffusor of your light source, the lens or the sensor itself. Clean the stuff.

- Vignetting - can be caused either by not diffusing enough in the light source, or by the lens being of less than perfect quality. This could be solved by an appropriate lens shading compensation in software (but: quite a deep dive into libcamera’s lens shading algorithm required, plus the challenge of capturing appropriate calibration images) or by improving the hardware (either improving the light source or choosing a better lens, depends where the vignetting is happening).

- Color variations - that should actually not happen with a whitelight LED. Can easily happen if using separate LEDs for red, green and blue with not enough mixing occurring in the light source. Might happen with a whitelight LED if for example strongly colored areas are close to your LED which reflect light onto the frame as well.

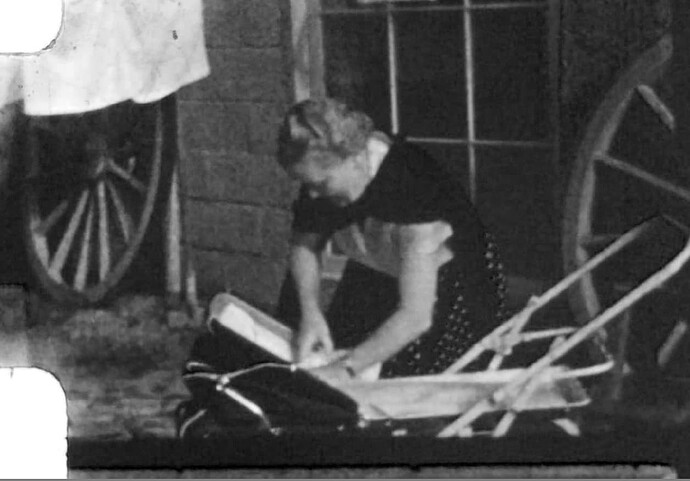

Anyway. Once you have arrived at a nice grey image in the above setup, you should be good to go for an initial scan of your film stock. Insert a film, adjust the exposure for the film and see what you get.

Different film stock will have different color characteristics of the dyes and film base. The white balance you achieved with the procedure above should get you close to a film-specific white balance, but probably not quite to the point. You can fine-tune your manual whitebalance point by the following procedure:

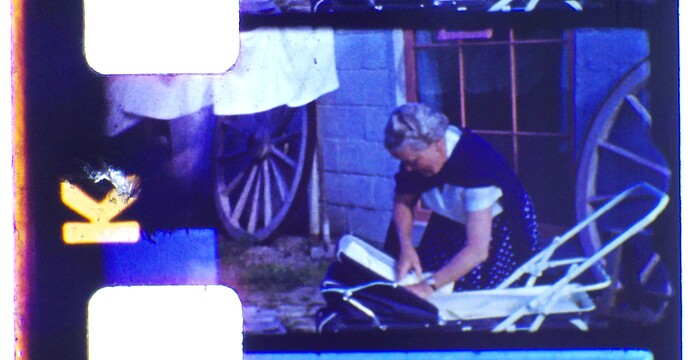

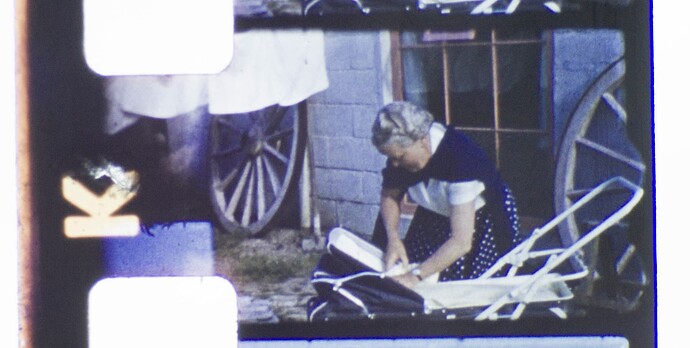

- Adjust your exposure in such a way that the brightest areas of your film scan are again a medium gray.

- Make sure that the content of these areas of interest are indeed expected to be “white” (or, in our case, “grey”). Do not use things like the sun or other areas which originally had a color. In your above scan, for example the laundry in the background would be an appropriate target).

- Adjust again your red and blue gain in such a way that these brightest image areas all have similar values in the red, green and blue color channels.

(A note of caution: I have experienced sometimes an unwanted color shift at this point of procedure. For example, I have Kodachrome film stock which has a tiny magenta color shift in very bright image areas. In this case, it is better not to use the brightest image areas for this fine-tuning, but rather image areas with a medium intensity which are known to be grey in the original scene. Or: simply stick to the whitebalance setting you have obtained in the first adjustment step.)

At this point you should have color-wise a scan quality which is usable. As I mentioned above, the whitebalance of amateur film stock is anyway never spot on, because only two different color temperature were available (daylight and tungsten) when the original recording happened. So it’s pure luck to have a match. Furthermore, there were also variations in the processing labs, leading to small color shifts between different film rolls. While that was barely noticable when projecting the material in a darkened room, your scanner will pick these color shifts up. But these kind of color variations can easily be handled in postproduction.

![]()