Well, this kind of warp is exactly what happens if the cut between the scenes is not precise. Here’s a way to check this:

Select for both scenes the “nearest neighbour” interpolation in the “Retime Process” selection. Now check out the cut. Usually, your cut will be off by a single frame. That’s kind of challenging to detect - you need to go frame by frame through the footage.

In any case, repositioning of the cut should solve the issue.

Once the cut is set correctly, do not forget to set the interpolation mode of both scenes back to whatever you decided would work best for the scene. Remember, you can activate different interpolation schemes for each scene.

I usually run simply the automatic scene detection on the whole footage. Again, it is important to have selected “nearest neighbour” at this point. (I assume that your footage is 18 fps and your timeline is set to something like 30 fps.)

Once the auto scene detection has done its thing, I go through the footage checking the precision of every detected cut.

While the auto detection is exceptional good, it sometimes misses a cut (which I introduce manually) or has detected the cut one or two frames off. In this case, I simply readjust the cut.

Once all of the footage has been precisely separated into scenes, from scene to scene, I do color correction, image stabilization and select the appropriate image interpolation mode (“Retime Process” and “Motion Estimation” settings) for each scene.

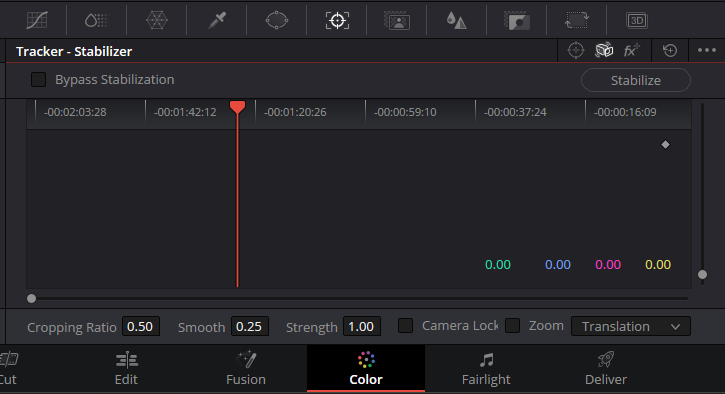

Anyway. With respect to loosing part of the image during stabilization: that’s what is expected. Afterall, you are compensating footage of a shaking camera where no additional image space was recorded at the time the footage was taken.

You have several countermeasures available here. First, do use as much of your original scanned image as possible. That is: do not crop before the stabilization run. In this way, you potentially can use every image information available to you. Remember, the original Super-8 projection window is smaller than the original camera frame.

Second, use your stab settings wisely. Staying with the default “smoothing” setting of 0.25 will perform a mild correction; setting this to 0.6 will yield a much stronger effect.

In any case, there will be cases where frame borders will show up after stabilization.

There are basically three ways to deal with that.

In order of raising complexity, the simplest approach is to simply switch off the stabilization (yes!) and use the original footage as it is.

However, my standard choice for correcting frame borders is to increase for the scene in question the zoom level slightly, just enough to get rid of the borders. That’s usually not noticable at all.

One can refine this “zoom” approach by adjusting also the x- and y-positions of the clip to get rid of frame borders. At that level of correction, you can iteratively change zoom level and image positions until most of the original frame is used.

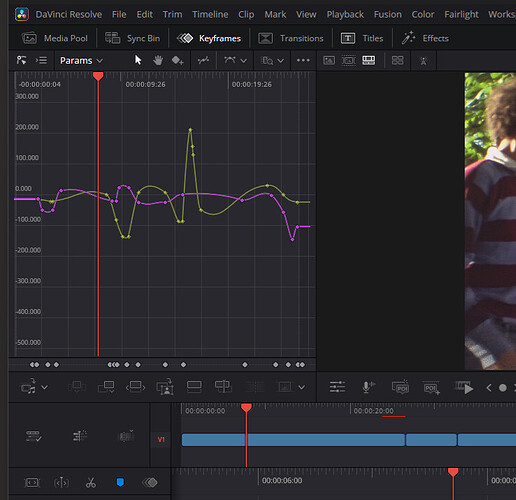

The most involved approach is to select an appropriate zoom level and than manually keyframe x- and y-positions of the scene over time. Here’s an example of how such a thing looks like:

The yellow and magenta curves above are the keyframe curves for x- and y-position over the course of a single scene. Each dot corresponds to a manually adjusted keyframe.

As you can see, this is a lot of work - so I usually do this only if absolutely necessary.

Again, normally, I simply adjust the zoom factor in such a way that no image borders become visible. Done.

One additional note: I separate in my workflow image stabilization and motion interpolation. That is, I have a first timeline where I only do image stabilization (at 18 fps) and a second, different timeline where I do image interpolation (that is: raising 18 fps to 30 fps, at 30 fps) on the stabilized footage. The reason is that you help the image interpolation processes to perform their action by pre-stabilizing the footage. I am not too sure which workflow daVinci uses if you do all of this in one timeline (could be first frame interpolation, than image stabilization - which would be the wrong way around, or it could be first image stabilization and than frame interpolation, which is the correct way).