tiff is lossless compression, jpeg not. Only use lossless compression schemes.

Some software has difficulties reading certain tif-compression schemes - be sure to select a format compatible with all your software. png 16 bit might also be an alternative to try. Again, depending on the lossless compression scheme selected, some software might refuse to read your images.

Keep your signal path to at least 16bit if possible. jpeg uses normally only 8 bit. Be careful as some software can handle internally only 8 bit - be sure to check. Otherwise 16 bit image do not make much sense. VirtualDub plugins as well as avisynth modules are main culprits here. Try to keep your signal path at least to 16 bit, if possible. It gives you head room you might need with difficult scenes. Otherwise, for most purposes, 8 bit works as well, provided you handle shadow and highlights already at the raw development stages. Throwing in a log encoding will get you yet more head room without too much visual impact.

Generally, to get file sizes down, the most effective factor is your image size. From my experience, Super-8 has at most a real resolution comparable to HD 4:3 sources (1440x1080). So an easy way to reduce demands on disk space is to scale the images down a little bit.

There are two camps here: one operates under the statement “grain is the film”. That is, the appearance of the film grain specific to the footage is important. If that is your goal, you will need to work with the highest resolution possible to capture the grain structure as good as possible. Real film grain has a much finer texture than the optical information imprinted in the film which is basically limited by the lens used to record the scene. If you are more in the “film grain” camp, you will want to work with the highest resolution you can afford throughout your pipeline.

The other camp is more interested in transforming historical footage as best as possible to todays standards. That is, get good digital colors, even if the source footage has strong color casts. Ideally, get rid of all the grain, as this allows you to sharpen the original footage quite noticably. Finally, push the frame rate from the meager 18 fps most Super-8 footage was shot with to at least 30 fps. This will improve perception of pan and tilt movements dramatically in the final result.

I am (currently) more in the second camp. Here’s a breakdown of my signal path - all image formats are 16bit images:

- Capture: 4056 x 3040 px, .dng raw file, 23.5 MB per frame

- Raw-development via DaVinci: 2400 x 1800 px, tif, 23.2 MB

- Cutout, stabilization, initial color correction and zoom-down: 2124 x 1594 px, .png-file, 18.3 MB per frame

- denoise and enhance algorithms: 2124 x 1594 px, .tif-file, 18.1 MB per frame

- final editiing: 1440 x 1080 px, arbitrary video format

The final .tif-file are piped into DaVinci which handles the 18 fps → 30 fps upscaling. Also at that stage, the central portion of the frame is cut out to the final 1440 x 1080 px resolution. The slightly larger intermediate frame size is utilized in the degraining operation (an avisynth/VirtualDub based software, full 16bit pipeline) as well as in a deshaking operation. The later gets rid of some annoying camera shakes usually found in handheld Super-9 footage and helps also the degraining stage a little bit.

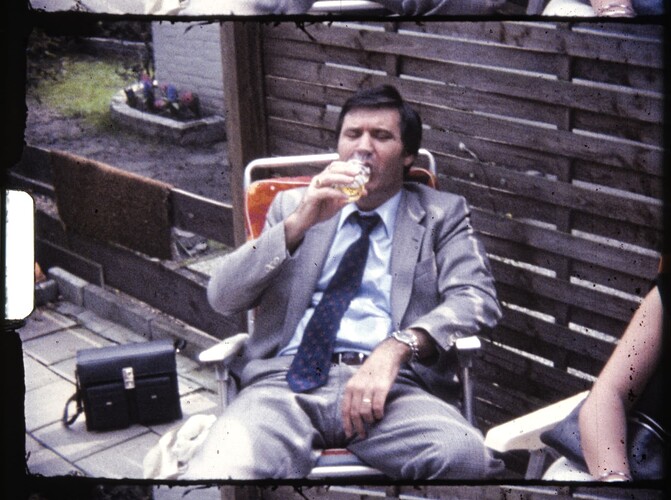

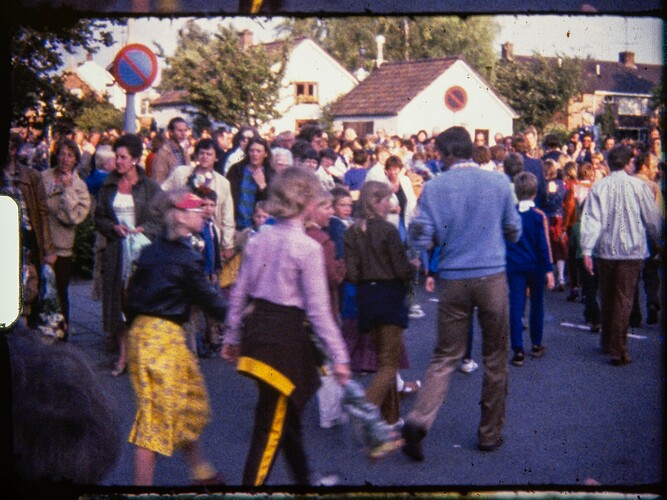

Well, the resulting footage has lost all what generally is considered “film look”. Here’s an example of the current state of affairs.

Compare the cutout labeled “raw” with any of the other three. The cutout correlates approximately to the full frame like this:

For certain scenes, the improvement of the details in the footage is quite noticable, for other scenes not so much - that is my current area of interest. If for esthetic reasons I want a more film-like output, I add artifical grain, flicker and scratches in post. (no, I am not really doing this  )

)

Incidently, that is an active area of research - grain pushes up the bandwidth demands on digital transmissions. So one idea people are researching is actually to separate film grain from the original footage, send only the cleaned film over the transmission line together with the noise characteristics of the orignial footage - which does not need much transmission capacity. Only at the viewer’s side articifical noise mimicing the detected noise characteristics is added, just before displaying the stuff. Funny times…