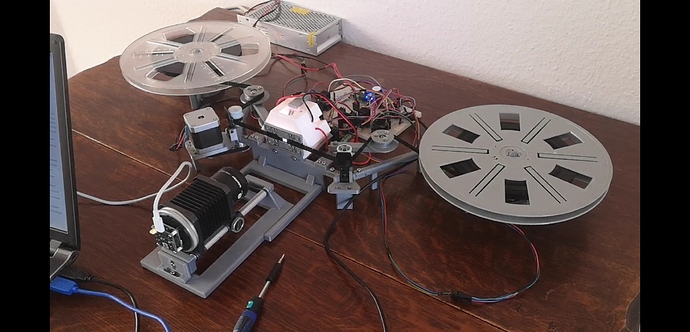

Well, have a look at my current setup, utilizing a see3CAM CU135 ( AR1335, sensor size about 1/3.2", or 4.54 x 3.42 mm, diagonal 5.68 mm, max 4096 x 3120 px), mounted onto a Novoflex bellow via a 3D-printed adapter. On the other side, the Componon-S 50 mm is placed - not in reverse mode.

As you can see, the distance between sensor and lens is about double the size of the focal length of the lens, and the distance between lens and movie frame is about the same. Such an optical setup leads to a magnification scale of about 1:1. In truth, the optics are adjusted to a scale factor of 1:1.7. So the camera sensor sees an area of about 8 x 6 mm. This allows me to image the sprocket neighbouring the frame and have a little headroom for the frame position. The actual camera frame of a Super-8 movie is 5.69 x 4.22 mm, the projector is supposed to use only 5.4 x 4.01 mm of the camera frame.

Now, I have used the same setup with the Raspberry v1-camera (OV5647, 1/4", 3.6 x 2.7 mm, diagonal 4.5 mm, max 2592x1944 px) as well as the v2-camera (IMX219, same dims as OV5647, max 3280x2464 px). For these sensors, the lens only has to be moved a little bit to get the same scale as with the see3CAM.

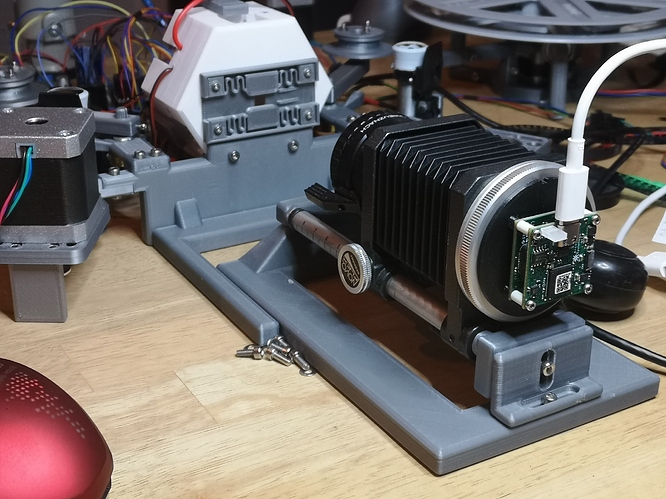

Here’s a more detailed view of the see3CAM mounted on the 3D-printed adapter which fits directly into the standard Novoflex mount:

I would prefer to use the same setup for testing the new Raspberry HD-camera (IMX477, 1/2.3", 6.17x4.56 mm, diagonal 7.66 mm, max 4096x3040 px), but the bulky C-mount of the camera is getting into the way of designing an adapter. Currently, the only option I see is to unscrew the whole aluminium mount and just use the bare sensor - I am not sure at the moment if I want to go along that path. Otherwise, I might opt to design a totally different camera setup.

Both routes will take some time, I am afraid, as I am currently using the scanner to digitize Super-8 material. I would also need to partially rewrite my old software to handle an appropriate camera mode for the new HD-camera. So it will take some time for me until I can share scan results with the new camera chip.

I am currently using the see3CAM in a resolution of 2880 x 2160 px, running at about 16.4 fps. From the specs of the Raspberry HD-camera, I think you would get maximal 10 fps out of the HD-camera at that resolution (in fact, I got about 7 fps with my python-based client-server software). So frame-rate wise, the new HD-camera is not better than my current setup.

My primary goal is to do fast HDR-scans - and the major bottleneck here is the time a camera needs to reliably settle onto a given exposure value. Not sure whether the new HD-camera behaves in this respect better than the old ones. Maybe the new libcamera-interface is helpful here. I will update on this as soon as I know more.