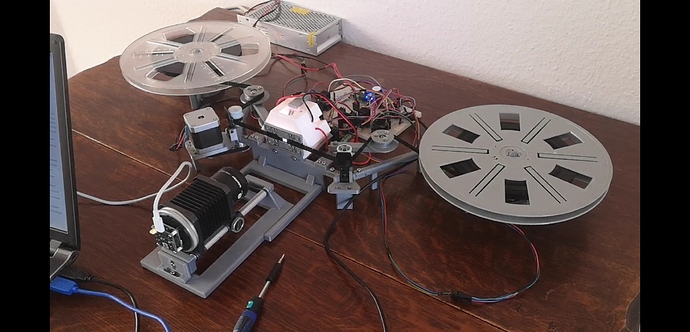

ok, here’s the promised update on the Raspberry HQCam:

Raspberry Pi cameras feature different processing pipelines for still and video imagery. In fact, there is also a third pathway, namely output of raw sensor data, but it is prohibitively expensive in terms of scan speed and post-processing time.

The Raspberry Pi HQCam is based on a sensor mainly intended for video application, which is reflected in the available “camera modes” of this unit - only two of the four modes listed feature a still image pipeline, but all are available to output video.

The original sensor resolution is 4056 x 3040, but the maximal frame rate is only 10 fps at that resolution. The most reasonable mode to use is half of that resolution, 2028 x 1520, which is specified with a maximal 50 fps. This mode is also binned with 2x2, so it reduces the sensor noise by a factor of two.

The maximal frame rates specified are only achievable in video mode, because in still mode, the Raspberry Pi cameras need to capture actually several images before outputting a single result (mainly due to the automatic tuning algorithms adapting to the scene which is photographed).

This means that the data coming from the camera is MJPEG-encoded. So the data is heavily processed before it is available for use. However, people have seen characteristics in the raw data (mainly certain noise characteristics) which indicate some image tweaking is happening already at that sensor level - so chances are that even the offical “raw” image delivered by the sensor has already some (mainly noise-reducing) image processing applied.

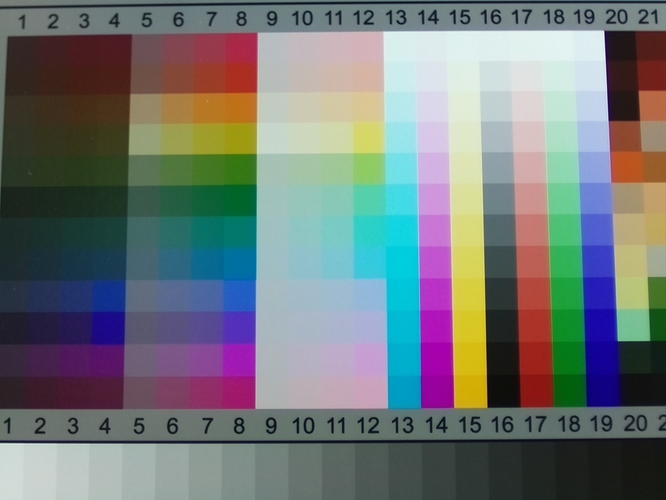

However, the MJPEG encoding available with this sensor easily outperforms the encoding which is available with the v1- and v2-cameras of the Raspberry foundation. The encoding quality is reasonable already at a quality setting of 5%, and it increases drastically when the quality is raised above about 20%. Visually, there is no difference noticable with a quality setting of 60%, compared to a higher quality setting of, say, 95%.

The HQCam can be accessed with the usual software available, provided the OS is up-to-date. Access via raspistill and raspivid in a film scanning project is prohibitive slow or complicated (make your choice), but access via the picamera lib is fine - even so this library hasn’t seen any major update for quite some time.

The color separation of the new camera is fine, but the automatic whitebalance seems to be not as good as with the v1- or v2-cameras.

Optically, this is the first sensor from the Raspberry Pi foundation which is designed for attaching arbitrary lenses. The older v1- and v2-cameras feature a micro lens array designed for the working with the mobile phone lenses supplied with the camera. If you exchanged the lens of the older Raspberry Pi cameras with a lens with a different focal length, you ended up with color shifts and desaturation effects which are impossible to correct in post production. While the v1-camera performed here substantially better than the v2-camera, this issue is totally gone with the HQCam.

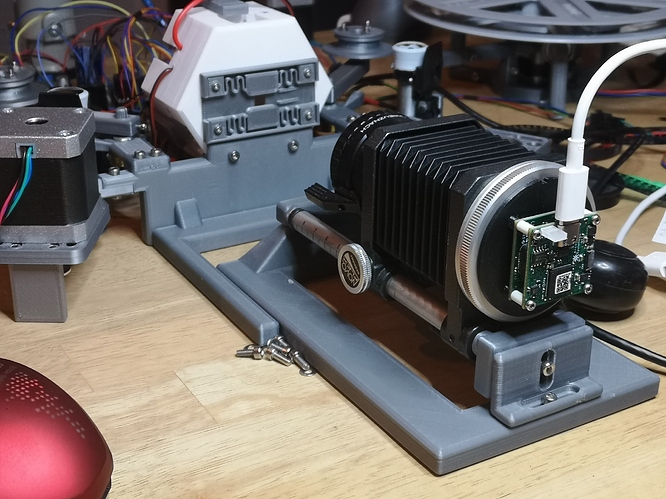

Mechanically, the camera features a machined block with standard connectors for lenses and tripods. Integrated into this block is also the IR-stop filter, so you can attach basically any lens you have available. I am using the trusted Schneider Componon-S for scanning purposes.

The outline of the new camera (angled, larger spatial footprint) makes it somewhat more complicated to design a housing or mounting for an existing design, but it is not too challenging.

For my specific application - HDR-scanning of Super-8 stock - it is mandatory that scanning and processing is reasonably fast. Standard Kodachrome film stock for example has a dramatic dynamic range, which is difficult if not impossible to capture with a single exposure. So several different exposures need to be taken of each single frame in order to capture the full dynamic range of the film image. This leads to huge amounts of data to be captured, transfered and processed. The most reasonable resolution to work with in the case of the HQCam is 2028 x 1520. In my setup, this gives me capture times of about 1.5 - 2 seconds per frame, with about additional 1 to 3 seconds for combining the different exposures via software (this time increases quadratically with image size).

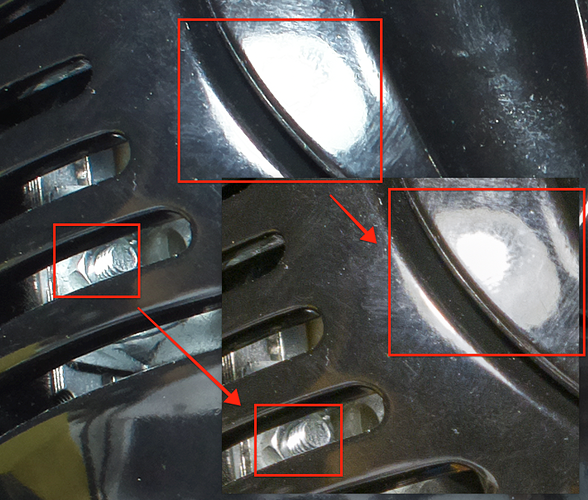

Here’s an example of a HQCam capture:

It is a single frame from a Kodachrome movie, recorded in the 80s, which I got from ebay for testing purposes. The lower central image is the final output image, the surrounding images are the raw captures from the HQCam, spanning a range of 5 exposure values. Clearly, at least this huge range is needed to capture all the information available in the film frame.

The different exposures were not realized by switching the HQCam to different exposure values. It turned out that this takes way too long time. This is a behaviour which was already noted with the older Raspberry Pi cameras, but it seems that the new HQCam is even slower to respond to changes in exposure time. So instead changing the exposure time of the camera, the LED-light source was switched rapidly to different light settings for capturing the different exposures. The HQCam was run at a constant exposure time of 1/32 sec.

)

)