This was definitely one of the most exciting reads for me here, yet. Thank you so much for all the detail. I’ll keep you posted about my results…

Super interesting, definitely something to learn from that is very helpful!

Do I read this correctly, that using multiple exposures and the exposure fusion algorithm allows you to essentially set the exposure once (say on the first frame) and then leave it, giving “good enough” results even if later scenes are not exposed quite the same?

I have actually been bouncing around the question of what to do about exposure (automatic vs. manually setting it to a fixed one once) for a while now. Never quite got around to it yet, though, because I’m still figuring out some of the mechanics on my build.

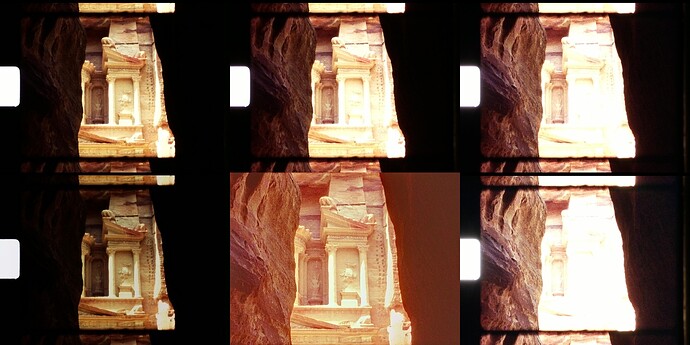

Yes, that is basically the case - of course, within limits.Let me give four further examples to illustrate that. The captures below are from the same run as the example above, with all settings unchanged. Here’s the first, rather extrem example:

Again, the center image in the lower row is the output result of the processing pipeline, slightly color-corrected and cropped with respect to the input images. As one can see, the central region of the film frame is most faithfully recorded in the -5EV image which is the leftmost thumbnail on the lower row. The same region is totally burned out in the +0EV image which is the right one in the lower row.

Let’s focus a little bit more on this central region - here are areas near the columns which were grossly overexposed already during filming. There is no structure visible here, the Kodachrome film stock was pushed here to the limits by the autoexposure of the camera. There is however still color information (yellow color) available here. You can see this if you compare these overexposed areas with the sprocket area of the -5EV capture. Here, the full white of the light source shines through. If you use a color picker you will see that in the sprocket area, we have about 92% of maximal RGB values. That is actually the exposure reference point for the -5EV capture and all the other (-4EV tp +0EV) captures which are referenced to this one.

In any case, the minimal image information available in the central region of the frame (which is just some structureless yellow color) is detected by the exposure fusion and transfered to the output picture.

Turning now to the right side of the frame, you notice a really dark area. Here, image structure can be seen only in the -1EV (top-right) and +0EV (bottom-right) captures. However, the little structure which is there shows up in the output image as well, albeit with a rather low contrast. Given, you might get a slightly better result by manual tuning, but for me, the automatically calculated result is useable (and less work).

Remember that the wide contrast range of this image has to be packed by the algorithm into the limited 8bit range used by standard digital video formats, convincibly.

Another point which is important in this respect is how the algorithm performs when there is actually a low contrast image - will the algorithm in this case just produce an output image with an even a lower contrast?

Well, it is actually difficult to find a scene in my test movie which has a low contrast. Here’s the closest example I could find:

Because of the low contrast, the capture with -4EV (top-left) or -5EV (bottom-left) is actually a perfectly valid scan of this frame. You would need only one of these single captures to arrive (with appropriate chosen mapping) at a nice output image. Some of the other exposures are even worthless, for example the +0EV image (bottom-right) which is mostly burned out in the upper part and can in no way contribute to the output image in this image area.

Now, as this example shows, the output of the exposure fusion turns out to be fine again. There is no noticable contrast reduction here. This is an intrinsic property of this algorithm.

To complement the above, here’s another example, again with the same unchanged scanning/processing settings:

This example frame was taken indoors with not enough light available, so it is quite underexposed in the original source. Because of that, the -5EV image (bottom-right), which in normal exposed scenes carries the most of the image information, is way too dark and more or less useless. However, the +0EV scan (bottom-right) is fine. Because the exposure fusion selects the “most interesting” part of the exposure stack automatically, the output image is fine, again.

(One question I would have at that point if I would have read until here - what about fades to black in the film source? The answer is that they work fine. ![]() )

)

Well, the Super-8 home movies seldom feature a finely balanced exposure setting; usually, they were shot just with the available light, mostly direct sun light. So here, in closing, is a typical frame a Super-8 scanner will have to work with: dark shadows mixed with nearly burned out hightlights.

Thank you for the great examples!

One question that comes to mind: What happens if the frame you expose for (say the first frame of a roll?) is not exposed correctly? Does this cause problems later down the roll? Or is this not an issue at all in practice?

It is no issue at all with the process described. The important thing is that the whole exposure sequence is set to the light source of the scanner itself. In setting it, I do not care about the frame content at all.

The exposure is set with a measurement of the sprocket area, where there is no film at all. Actually, it was measured once and I have never changed it between scans.

As through the sprocket hole the full intensity of the light source is shining through, even the clearest film stock will have a lower exposure value anywhere else in the frame.

This trick ensures that any structure in the highlights of the film source does not overwelm the capture device (at least in the -5EV exposure).

Now, any other structure present in the scanned film will be darker, actually much darker. Remember that color-reversal film is exposed to the highlights, not the shadows.

Capturing the shadow details is what the other exposures are for. You need to got into the darkness as much as possible.

The most challenging film stock I encountered so far is Kodachrome 40; here, the 5EV-exposure range is really needed for most of the film frames.

The darkest part of a scan is always the unexposed frame border, and if use a color-picker on one of the examples above, you will notice that unexposed frame border is slightly above the background in the brightest exposure (bottom-right).

In fact, my illumination can not go too much over the 5EV range, for various reasons. Ideally, I would like to push it a little bit further than currently possible. This has to do with the gain curves of the sensor.

Let me elaborate this a little bit.

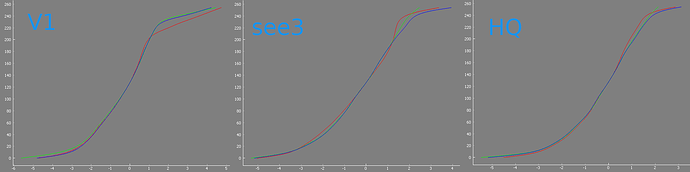

I do not have any gain curves measured for the HQCam (yet), so let me use the gain curves of the Raspberry Pi v1-camera:

The y-axis shows different EVs, the x-axis the range of 0x00 (0) to 0xFF (255) of 8bpp pixelvalues these EVs are mapped to. There is a more or less linear segment from 6EV to 8EV approximately. That is the “beauty range” of this camera.

Clearly, there is another range above 8EV, mapping to values 230 or higher, which is different from the main transfer range (from about 20-230). This is the range where the camera compresses the highlights.

Ideally, you would set the exposure in a scanner in such a way that the bright sprocket area (the light source itself) is mapped to 230 or less, not using the range where the highlights are compressed. If you just take a single exposure per frame, you actually can not afford this, especially because at the other, darker end of the range, shadows are compressed as well (in this example in the range of 6EV to 3EV).

The exposure fusion algorithm in fact ignores for each intermediate exposure the compressed regions in the highlights and in the shadows. The compressed highlight region is only used in the darkest exposure, and the compressed shadow region only in the brightest exposure.

I am setting the exposure reference in the darkest frame a little bit higher than 230; experiments have shown that this does not affect the visual quality of the scan at all and I gain a little bit more structure in the dark shadows this way. But I would love to have some slight additional head room here, say 0.5 EV or so. Can’t realize that with my light source, as the DACs have only 12 bit resolution and the LED color changes at different illumination levels pose an additional challenge as well.

I hope the above was not too technical. To summarize: the exposure in my scanner is never set/changed with respect to the film source. It is only set once, with respect to the light source. It is normally not changed from film to film.

ok, out of curiosity, I did a quick rerun of gain-curve estimations for the cameras and source materials I have at hand. Nothing precise, but interesting to look at, I think.

Here are recovered film response curves (using a similar algorithm as described in the 1997-paper by Debevec & Malik, “Recovering High Dynamic Range Radiance Maps from Photographs”) for the 3 camera sensors I have access to:

(I have swapped here the axes with respect to a previous post so that the film response curves have a more familiar shape. “V1” is the response curve of the Raspberry Pi v1-camera, “see3” of the See3CAM_CU135 camera, and “HQ” the new Raspberry Pi HQCam.)

The x-axes of each graph are the different exposure values (or scene radiances). The y-axis shows the full dynamic range a 8 bits per pixel format can work with (jpeg, most movie formats or a usual display, from 0x00 (0) to 0xFF (255)).

The S-shape of these curves is the practial standard of mapping the huge dynamic range found in natural scenes onto the limited dynamic range available for display. If you look at any of the curves, you find an intermediate, more or less linear section, adjacent by flatter sections in the shadows (low EVs) and highlights, which compress the information.

Actually, the problem of huge dynamic range is and was present right from the start of analog photography. In those days, the chemical process of developing film stock lead by itself to an S-shaped curve, and the expertize of the photographer consisted in choosing exposure, development times etc. to optimize this transfer function for a given scene. Ansel Adams was very influential in this respect (and put some science into the subject) by developing his zone system.

In electronic times, this whole process is handled by the image processing pipeline which transfers the raw sensor data (digitized to 10 to 14 bits) into the 8 bits available in standard image/video formats and displays. If you work directly with raw files, you have to come up on your own with a suitable transfer function (“developing the raw”), if you work with jpegs or similar image formats as output, the transfer function is a given thing by the camera manufacturer.

The exposure fusion algorithm I described above works with 8 bit data (“jpegs”), but intrinsically, only data from the middle section of the film response curve is used, mostly. This is because there are appropriate weigthing functions build into the process which drop the information from pixels too dark or too bright. For such outlying pixels, the algorithm simply takes information from an exposure which is better exposed.

Only in the darkest exposure of the sequence, there is simply no additional data available for the brightest highlights. Here, the data has to come from that darkest exposure.

That is why in my scanner the darkest exposure is the reference for the all other exposure settings.

In fact, I do not care about the film itself, I require that in the darkest exposure, the light source itself is mapped into a suitable digital range.

Looking at the response curves, one can see that the curves flatten out at around a 8 bit value of 240 or so. As I do not want the highlights of the film frame to be compressed, that is the value I am aiming for the full illumination intensity. As anything in the film frame will certainly be darker, I can be sure that I am using only the linear section of my transfer curve, as intended.

… continuing the testing of the Raspberry Pi HQCam further.

Here’s some information on the behaviour of the sensor + processing pipeline with respect to the quality setting.

The sensor used in the HQCam is primarily designed for video applications, not actually for taking still images. That’s probably why it has more video modes available (4) than image modes (2).

Well, that matches my use case, namely capturing massive amounts of data which are only later combined into an image representing the scan of a film frame; I use as camera output neither raw, nor any RGB-format, but straight video.

The camera outputs video as compressed MJPEG frames. There is a quality setting available in the JPEG/MJPEG-format, and it might be interesting to see how the camera performs with various settings.

Here’s a small cutout of a 3456 x 1944 px frame at a quality setting of 60; which I will be using as reference:

It was taken with the Raspberry Pi standard 16mm lens. This lens performs quite good here (f-stop was at 5.6 in the experiment). By the way, forget about the alternative 6mm lens for scanning purposes - the quality of that wide-angle lens is inferior.

Let’s compare the capture above (q=60) to one with a quality reduced to q=5:

Clearly, there are JPEG artifacts showing up here, but it is still an amazingly good result. The file size of such a single frame with q=5 is so low that my image capture pipeline can run the 3456 x 1944 px resolution at the maximum speed the sensor chip is able to: 10 fps. For comparision, with the q=60 setting, the frame rate achievable is currently about 2 fps. That is mainly caused by my decision to use a client-server setup which transfers the images via LAN - a direct storage of the images at the Raspberry Pi might be a better approach here. I am currently not doing this, as the Raspberry Pi tends to get quite hot during capturing: 81°C CPU-temperature is easily achieved if the Raspberry is not actively cooled.

Well, the Raspberry Pi used in the film scanner is actively cooled and stays less than 50°C always.

The effect of the quality setting can more easily seen by looking at the pure color information of the frames. Here’s this color signal extracted out of the q=5 frame:

One clearly sees now the beloved blocking artifacts of low JPEG-compression. Raising the q-factor to 10, the results improve:

One step further, at q=20, the color information

improves further. This is especially noticable in the top-right corner of the image. But already at that low q-factor, a useable frame is received. Comparing with the q=60 image,

one sees especially in the dark area of the trees some further improvements. However, returning to the real image data, the difference between a q-factor of 20 (left image) and 60 (right image) is visually rather low:

The JPEG-encoder of the new HQCam seems to perform much better than the encoders of the old v1- and v2-cameras; here, such low quality settings did not perform well at all. I am currently using a q=10 setting (7 fps) for focus adjustments, and a setting of q=60 for scanning. The above posted exposure fusion examples were actually captured with a low quality setting of 10.

Finally, here’s a full frame of the scene, with 3456 x 1944 px resolution and a q-setting of 99 (close to the max), for reference (acutally, the original resolution was lost - the following image is only 1728x972 px):

… to complement my above experiments with MJPEG-output of the HQCam:

The " Strolls with my Dog" blog has two excellent and detailed investigations concerning raw image capture with the HQCam:

and

Very informative for the technical minded, right to the point - actually, so much that the blog-author got banned on the Raspberry Pi Camera forum for asking too detailed questions… ![]()

Hi there

Rare poster here, but eager reader

And please bear with me if I ask stupid questions.

Just was wondering if any of your knowledge might be applicable the other way round, by inserting an image sensor (raspi hq cam or arducam) behind a super 8 camera film gate and have the raspberry take an image every time the shutter opens, I.e. when dark changes to light.

Then writing it on an card and get an image sequence.

Would love converting a super 8 cam to digital while retaining the original triggering method.

Son just using a video camera would not do the trick, the rolling shutter usually gets really nasty.

Arducam has a global shutter color cam, which could avoid this. Still wondering, if the hq would be able to capture without the rolling shutter effect if it was single-image-triggered by the super8 cam’s shutter at 12 - 24 FPS? After all, the specs for it sensitivity- and resolution-wise speak in favor of the hq cam.

Sorry if this is a little vague, ask me anything you like if you need to.

Also if I’m in the wrong forum…

you should not pair a mechanical shutter with an electronic sensor if it is not nescessary. If you do so, you definitely need to use a sensor with a global shutter. With a rolling shutter, you will get funny results, but probably not what you are expecting. The Raspi HQ camera is, like any original Raspberry Pi sensor, a rolling shutter type and will not work with a mechanical shutter in front of the sensor.

Also probably important: you can not cancel the rolling shutter effect by adding a mechanical shutter. The rolling shutter effect is backed into the way the sensor functions, no chance to change that.

If you goal is to convert an old Super-8 camera into an electronic one, there have been various attempts to do so. Look for an example here. Google might bring up a few newer attempts for such a conversion.

Thanks for taking the time to respond

Yeah, of course I’ve combed the net. There’s a lot of vapor ware, like the good old Nolab cartridge which shows up regularly in various blogs still. Then there’s numerous contraptions with external gear an processors, stuff sticking out of the cam as in your example and people just gutting a camera and shooting through the hole were the lens was… not that I thwart these things, I also think the builders went through a lot of tinkering and mostly did a good job. There’s one promising digital super8 cartridge by some guys in the Netherlands, though that one too relies on an external stuff and kinda stalled for some time now.

I’m aware of the rolling shutter of the HQ cam, but thought capturing single images activated by the light intensity of the opening shutter could avoid that.

On the other hand there’s an Arducam with 2MP that takes color imagery and has a global shutter. The sensor is also pretty close to a super8 frame size wise. What I don’t know is if a raspberry pi would be fast enough to react to the light, shoot an image, write it to memory and be ready for the next one in time. Using the shutter light as a trigger would be convenient for being able to use a selectable FPS from the camera. Plus it would be closer to the roots of film making. Just like your scans. I also thought about using the claw mechanism to trigger, but I guess that has to be removed in order to get the sensor on the focal plane.

My main goal, as probably that of a bunch of other people, is to have a device I can drop into any stock super8 and shoot video with that good old tactile feeling and handling I love about those cameras.

Plus I like the fact that there are many cameras available that have great optics at a great price.

That is simply not the case. On a sensor with rolling shutter, different horizontal lines of the sensor are exposed at different times. Therefore, really fast moving objects get distorted. This is just how rolling shutter sensors work, and there is no work-around this.

Instead of ripping out all the wonderful mechanics of an old Super-8 camera to create something in disguise, may I suggest to look at a more modern approach to a cinematic Raspberry Pi camera?

Just followed your link, that’s quite some impressive background info you put there!

See, that camera may be great and feature rich, but to me 1500x1080 would be ok, also could live with a lower resolution. And definitely with a more compact case, like e.g. a stock super8 one

In case you didn’t notice, “ ripping out all the wonderful mechanics of an old Super-8 camera to create something in disguise” isn’t exactly what I want. As I wrote, I want to keep as much of the original camera intact. Thus the need to trigger exposure via the light level variation caused by the original shutter. Removing the film gate to better get the chip at the focal plane, which can be reinserted again (at least with some Nizos I know of) and popping in a digital replacement instead of a film roll doesn’t seem too cruel to me.

And if it would help those cameras to get used again instead of lurking in some boxes in collections not doing what they were intended for: To me that would be a great plus.

Just my opinion.

Ah, understanding now slightly better what’s your plan. Well, first: the difference between global and rolling shutter is barely noticeable for usual subjects. Your mileage will vary, depending on your intension. Sensors with global shutter are more complex and thus more expensive than rolling shutter sensors with otherwise similar specifications.

While a lot of available sensors have sizes similar to the Super-8 format, the surrounding support circuits need a noticeable larger space. Either you will need to rip out the complete film gate, or you need to redesign a new circuit board for the sensor with an appropriate footprint.

Not all sensors/cameras feature an external trigger input. You would need something like that to make your sensor running in sync with the mechanical trigger. The Raspberry HQ camera actually has one, but it is at the moment largely undocumented, runs at a rather low voltage and needs additional soldering to be usable. Some Arducam clones have at least solder holes instead of small pads, which make operations like this slightly easier. One of the first fixed topics on the Raspberry Pi camera forum discusses the external sync.

Actually, I think it would be possible to design a system which would fit completely into an old Super-8 cassette, so no modifications would be needed to the camera. You would need a transfer optic to get the sensor away from the film gate and use one of the smaller formfactor Raspberry Pi computing units. You could actually get the frame trigger directly from the film transport pin with an appropriate sensor. A global shutter sensor with external trigger would be essential. It would be a quite a challenging project, with optical design challenges, designing and handling special surface-mounted PCBs and quite some software challenges…

Wow! I love this setup;

Can I ask where you got it from (the models to 3D print) or if you’d be willing to share them (if you did them yourself?

Hi @oldSkool - welcome to the Kinograph forums!

I do all my designs by myself, from optics over software to 3d-models. However, I share only what I consider to be a somewhat workable solution. So I won’t release (at this point in time) the design files of my xyz-slider, as I do not consider this to be a workable design. But, at the bottom of this specific post, you can find at least the .stl-file for the camera-body of the Raspberry Pi HQ cam, in combination with a Schneider Componon-S.

As far as I remember, I did post two core designs of my scanner, namely the integrating sphere and the 3D-printed film gate. I was able to find the film gate (including stl-files). If you scroll down a little bit in this thread, you will also find links to the stl-files for the integrating sphere.

I was preparing a similar post! So there are very important changes in the camera software with the bullseye release. With this release is installed a new stack based on libcamera and applications equivalent to raspistill, raspivid,… The old applications based on MMAL including the Python Picamera library are no longer available (but it would be possible to reactivate them?). A new officially supported python library would be under development. So for Picamera users it is absolutely necessary to stay with Buster until all this stabilizes.

– not only that, the libcamera-stack is far from matured. It’s in a state of flux. And of course: no picamera-lib replacement, only an announcement by the Raspberry Pi foundation that a picamera2-lib is “in works” - by the Raspberry Pi foundation itself! …which, from my experience, means that it might probably be ready for rudimentary use by the end of next year. Also, since computations are moved from the GPU to the CPU, expect a drop in frame rate.

Summarizing: at this point in time, I would stay clear of the libcamera-stack. Easiest option: stay with “Buster”.

Looks like there is a preview Python library for bullseye.

As nice as it is to see that there is progress with respect to making available the new libcamera via python, the picamera2 features a totally changed API. This is probably unavoidable because the libcamera stack has a different design philosophy than the old Broadcom stack. But this means that programs based on the old picamera lib have to be redesigned from the ground up. Also, both APIs of libcamera as well as picamera2 are expected to change - so there is actually nothing in this announcment which would make me to consider upgrading to the new software.