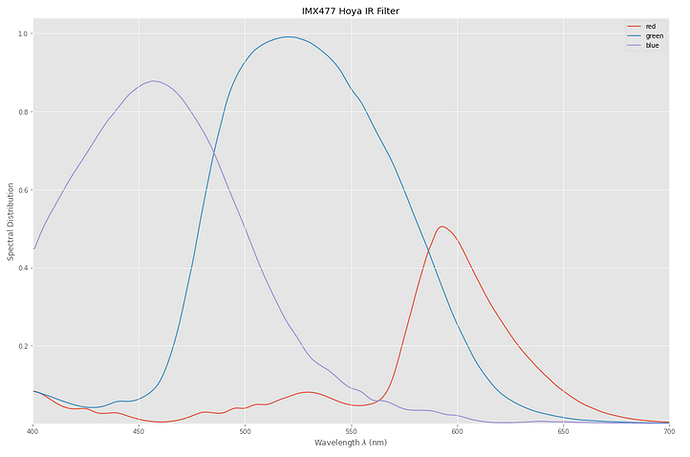

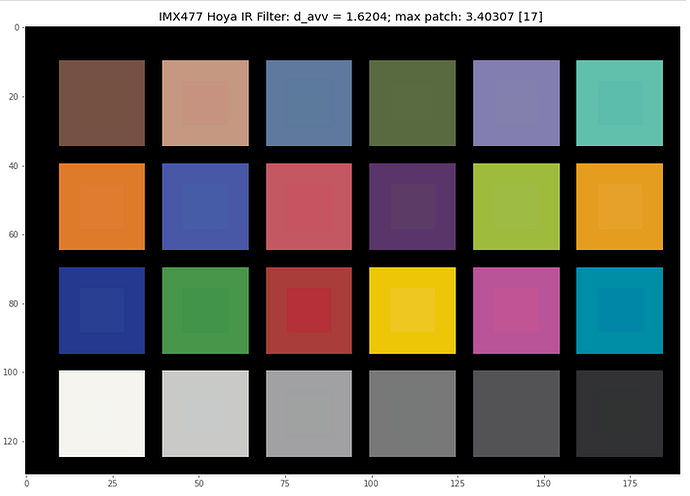

While testing the new Raspberry Pi HQCam, I stumbled about some odd behaviour. Imaging with HQCam + 16 mm standard optics an average scene with a lot of gray clouds, the auto whitebalance algorithm usually settles for red gain values of 3.3 and blue gain values of 1.5.

While the difference in value of the two gains are striking, I did a few film scans with that “normal” setting, obtaining quite satisfactory results (some examples can be seen in the New HQ Raspberry Pi Camera with C/CS-Mount thread).

For doing these scans, my LED-lightsource is routinely calibrated before the actual scan in such a way that the empty sprocket area where the light source shines through is imaged by the camera as pure white.

Now, continueing the test scans, I stumbled over a scene where the colors started to look odd. Here’s a cutout of a single frame showing this:

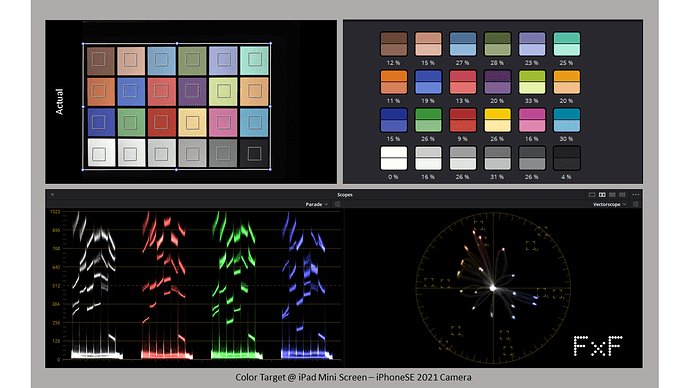

Notice specifically the red color tones, from the reddish strip of the tent (left image border) to the red skirt of the girl and the red shirt of one guy sitting to the right. These colors look weird, and if we compare them to some older scans (with the same technique: setting the whitebalance of the camera to “normal daylight gains” and adjusting the LED-source so that white is white), one can clearly see a difference. Here’s the scan with a Raspberry Pi V1-camera:

and this is the result of from a see3Cam_CU135:

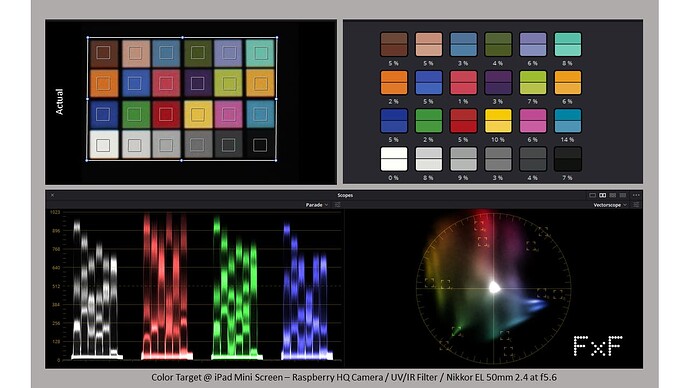

Now, for the last experiment, I have set the red as well as the blue gain of the Raspi HQCam to one and adjusted the ligth source again to render as pure white in the camera output. This resulted in the LED source shining in a dramatically red light, with little green and blue. Anyway, the scan

turned out to be better, colorwise, in my opinion.

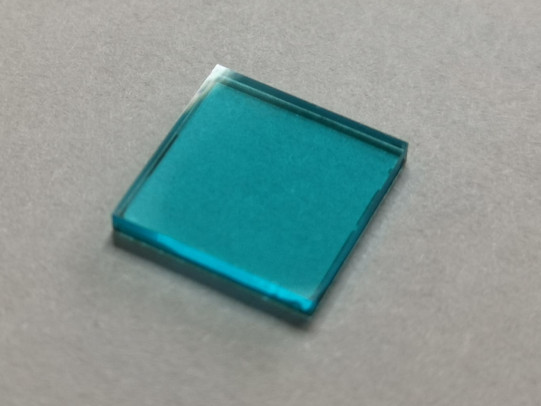

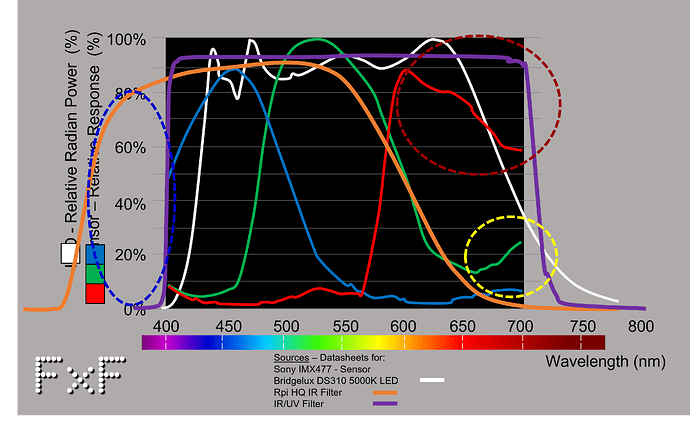

Now looking at the IR-filter of the new Raspberry Pi HQCam, one discovers that it has a quite bluish appearance. If this filter would be a good IR-cutoff filter, it would actually not appear to have any color tint.

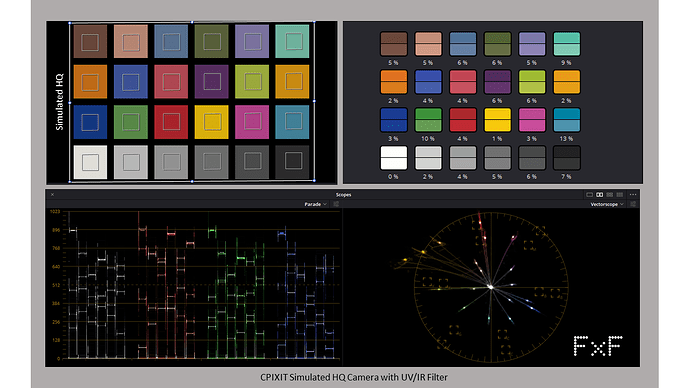

So that might be the reason for the odd relationship between the standard red and blue gain values: the Raspberry HQCam is usually operating with a not so perfect IR-cutoff filter, which is filtering already out part of the visible red color tones. This is then compensated by software, leading to the red gain on the HQCam being usually about two times higher than the blue gain.

Normally, this seems to work fine, but in extrem settings (like lots of slightly different, intense red colors) the processing pipeline creating the JPEG-image is driven into saturation, yielding unreal red color tones.

I would be happy for any comments about this and possibly ideas on how to solve this.