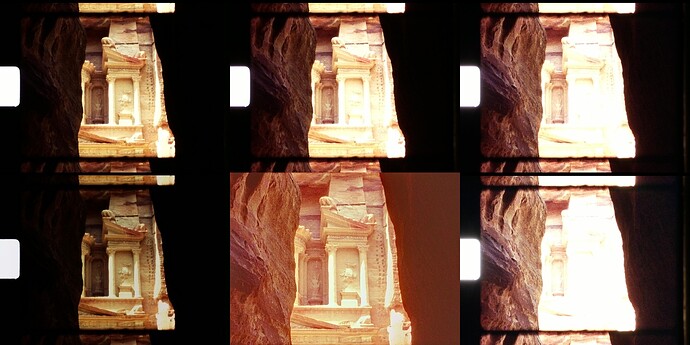

Yes, that is basically the case - of course, within limits.Let me give four further examples to illustrate that. The captures below are from the same run as the example above, with all settings unchanged. Here’s the first, rather extrem example:

Again, the center image in the lower row is the output result of the processing pipeline, slightly color-corrected and cropped with respect to the input images. As one can see, the central region of the film frame is most faithfully recorded in the -5EV image which is the leftmost thumbnail on the lower row. The same region is totally burned out in the +0EV image which is the right one in the lower row.

Let’s focus a little bit more on this central region - here are areas near the columns which were grossly overexposed already during filming. There is no structure visible here, the Kodachrome film stock was pushed here to the limits by the autoexposure of the camera. There is however still color information (yellow color) available here. You can see this if you compare these overexposed areas with the sprocket area of the -5EV capture. Here, the full white of the light source shines through. If you use a color picker you will see that in the sprocket area, we have about 92% of maximal RGB values. That is actually the exposure reference point for the -5EV capture and all the other (-4EV tp +0EV) captures which are referenced to this one.

In any case, the minimal image information available in the central region of the frame (which is just some structureless yellow color) is detected by the exposure fusion and transfered to the output picture.

Turning now to the right side of the frame, you notice a really dark area. Here, image structure can be seen only in the -1EV (top-right) and +0EV (bottom-right) captures. However, the little structure which is there shows up in the output image as well, albeit with a rather low contrast. Given, you might get a slightly better result by manual tuning, but for me, the automatically calculated result is useable (and less work).

Remember that the wide contrast range of this image has to be packed by the algorithm into the limited 8bit range used by standard digital video formats, convincibly.

Another point which is important in this respect is how the algorithm performs when there is actually a low contrast image - will the algorithm in this case just produce an output image with an even a lower contrast?

Well, it is actually difficult to find a scene in my test movie which has a low contrast. Here’s the closest example I could find:

Because of the low contrast, the capture with -4EV (top-left) or -5EV (bottom-left) is actually a perfectly valid scan of this frame. You would need only one of these single captures to arrive (with appropriate chosen mapping) at a nice output image. Some of the other exposures are even worthless, for example the +0EV image (bottom-right) which is mostly burned out in the upper part and can in no way contribute to the output image in this image area.

Now, as this example shows, the output of the exposure fusion turns out to be fine again. There is no noticable contrast reduction here. This is an intrinsic property of this algorithm.

To complement the above, here’s another example, again with the same unchanged scanning/processing settings:

This example frame was taken indoors with not enough light available, so it is quite underexposed in the original source. Because of that, the -5EV image (bottom-right), which in normal exposed scenes carries the most of the image information, is way too dark and more or less useless. However, the +0EV scan (bottom-right) is fine. Because the exposure fusion selects the “most interesting” part of the exposure stack automatically, the output image is fine, again.

(One question I would have at that point if I would have read until here - what about fades to black in the film source? The answer is that they work fine. ![]() )

)

Well, the Super-8 home movies seldom feature a finely balanced exposure setting; usually, they were shot just with the available light, mostly direct sun light. So here, in closing, is a typical frame a Super-8 scanner will have to work with: dark shadows mixed with nearly burned out hightlights.