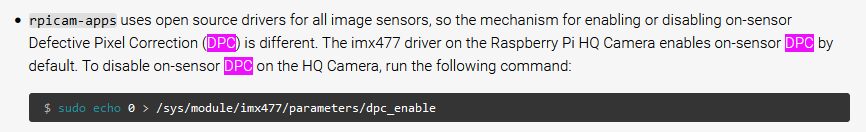

Can someone post an example of what that “digital” noise would look like? Most noise in image sensors is of the analog type. Given, the “raw” image presented to the user as raw sensor data is nowadays also processed digitally. For example, the HQ sensor (IMX477) features a switchable defect pixel correction (DPC):

There was a time when this feature had four choices to select from, but nowadays you can only switch it on or off.

But I doubt that this is the “digital noise” @verlakasalt and @jankaiser are talking about.

In any case, nowadays basically every sensor chip does some image processing and the “raw” image is not so raw as probably expected.

Which brings me to this topic:

Well, all .dng-files created by the picamera2 software and written to the disk are certainly uncompressed. The issue is that the RP guys introduced “visually lossless” image formats when the RP5 surfaced. And they are the default formats libcamera/picamera2 are using when capturing images. The very weird approach which is happening under the hood in case you are requesting a .dng file from picamera2 is the following: the “visually lossless” compressed image is decoded into a full uncompressed image which is than stored as a .dng raw. I discovered that wierd design choice by parsing the picamera2 code, trying to find out why the .dng-file creation was taking about 1 sec on the RP5. As far as I know it’s not explicitly stated in any of the RP documentations. @dgalland confirmed my suspicions in this thread here.

That was some time ago and the picamera2 lib has seen some updates. So I am not sure whether this is still an issue.

In any case: the important point is that you want the frontend of the libcamera software to operate with real, uncompressed raw data. Here’s a quick (partial) snippet of my initialization code which achieves this goal:

# image orientation on startup

hFlip = 0

vFlip = 0

# modes for HQ camera

rawModes = [{"size":(1332, 990),"format":"SRGGB10"},

{"size":(2028, 1080),"format":"SRGGB12"},

{"size":(2028, 1520),"format":"SRGGB12"},

{"size":(4056, 3040),"format":"SRGGB12"}]

# default modes

mode = 3

noiseMode = 0

logStatus('Creating still configuation')

config = self.create_still_configuration()

config['buffer_count'] = 4

config['queue'] = True

config['raw'] = self.rawModes[self.mode]

config['main'] = {"size":self.rawModes[self.mode]['size'],'format':'RGB888'}

config['transform'] = libcamera.Transform(hflip=self.hFlip, vflip=self.vFlip)

config['controls']['NoiseReductionMode'] = self.noiseMode

config['controls']['FrameDurationLimits'] = (100, 32000000)

As you can see, the startup mode selected is 4056 x 3040 px with format SRGGB12. And you can check that this mode is in fact selected by monitoring the debug messages of libcamera - one of the last lines of the message block issued right after camera initialization will tell you what mode libcamera actually selected. The format selection is a complicated process and might fail, depending on the libcamera-version. In my software, the relevant debug messages from libcamera/picamera2 are like this:

[0:02:32.329027649] [2233] INFO Camera camera.cpp:1183 configuring streams: (0) 4056x3040-RGB888 (1) 4056x3040-SGRBG16

[0:02:32.329162538] [2264] INFO RPI pisp.cpp:1450 Sensor: /base/axi/pcie@120000/rp1/i2c@88000/imx477@1a - Selected sensor format: 4056x3040-SGRBG12_1X12 - Selected CFE format: 4056x3040-GR16

It’s the 4056x3040-SGRBG12_1X12 sensor format which you want.

Well, you cannot switch on or off the DPC within the picamera2 context, you need to issue a command line as discussed above. Note that the above code snippets deactivates any noise processing by the line

config['controls']['NoiseReductionMode'] = self.noiseMode

as self.noiseMode is equal to 0.

Citing myself here…

Well, you probably want to update the link to the script, here’s the correct one.

That script is propably not something you really want to use in your film scanner project. It just gives you an idea of how to achieve something, but it is far from optimal. There is actually a lot to comment about this question. I will try to be short…

First, the example script you are using does not take into account that the opencv implementation of exposure fusion will create intensity values less than zero and larger than one. That is, the normalizing step is wrong. There is a discussion about that here on the forum, but I have that topic currently not at hand.

Taking a step back, there have been a lot of dicussions here at the forum which way of capturing S8 footage is the best. The issue here: typical S8 footage requires about 14 bits of dynamic range (if exposure is chosen carefully) and that is less than a single raw capture with the HQ sensor can supply. Your Canon, by the way, could do that.

That was the reason I initially scanned my footage with a stack of carefully chosen different exposures and combined them with a script implementing the Mertens exposure fusion algorithm by myself (not relying on the opencv-implementation). My script is a little bit faster than the opencv-implementation and does yield different results (the implementation is different and it subpixel-aligns also the different exposures). However, I switched lately to just taking a single raw image of each frame instead of an exposure stack. Again, browse the forum for the pros and cons. In short: for most footage, the 12 bit of the HQ camera is more than sufficient. In the extreme cases of high contrast images, you will keep the shadows dim anyway, reducing the noise print here. If you employ a noise reduction scheme, the advantage of capturing multiple exposures is only minor. On the other hand, you gain substantially on scanning speed. So: I started in the HDR camp and ended up in the single raw capture camp.

There are some other things were the exposure fusion algorithm performs better, as it performs intrinsically a local contrast equalisation. That helps with image detail and bad exposures by the S8 camera used in recording, but I decided that this does not outweight the scanning speed improvement (if you analyse closely the example video I posted here, you should notice the difference between exposure fusion and single raw capture).

There is “more behind” it. For starters, a still configuration selects different defaults than a video configuration. If you look at my code snippet above, I do select a still configuration for starters and than modify it. Most importantly, I increase the number of buffers to 4, among other things. Only if you increase the buffer cound, you can be sure that there will always be a buffer available when requested. That is actually the default value for video configurations and increases your capture speed.

As you can see, the HQ sensor is a quite complicated beast under the hood. Some of the options available with the sensor are not exposed by the libcamera/RP guys. But some stuff, for example the whole color science, is for the first time ever accesible for the user. On the other hand, what your Canon does in terms of image improvements is probably unknow to the world. And certainly not at your command. However, if you are more pleased with the Canon results, why bother with the HQ sensor?

From my experience, neither the sharpness of the input into your processing pipeline nor the color quality of your intial scan does actually matter too much. At least not if your main goal is to resurrect historical imagery for today’s people. You will always heavily postprocess your footage, deviating substantially from the quality/appearance of your initial scan.

Of course, if you goal is to archive as best as possible fading S8 footage (but: the most used Kodachrome film stock does not really fade…) in order to have the possibility of revisiting your interpretation of the footage in a few years from now (with presumably better post processing features available), you might put more weight onto the best raw capture you can achieve.