I think it might be interesting for others to get a little bit more detail on the different ways the above clip was recorded and processed.

As already remarked, it was one of my very first Super-8 recordings back in 1976, using a rebranded Chinon camera with not-so-great Agfa film stock available at that time.

While I initially started with the Raspberry Pi v1 camera, it soon turned out that the microlenses in front of the camera sensor (derived from a mobil camera) did not mate well with the Schneider Componon-S 50 mm lens I was using. Basically, color shifts occur which gives you a magenta-tinted center with a greenish tint on the image edges. This color spill caused by microlenses is non-recoverable. Took me some time and effort to find that out… Upgrading to the RP v2 sensor, the color spill got actually worse. At that point, I decided to abandon the idea of basing my scanner on RP sensors and bought a See3CAM_CU135 camera. This is a USB3-camera, so you get an image already “final” for display. The maximal image resolution I could work with, 2880 x 2160 px, was deemed to be sufficient to capture the full resolution of the medium-range Super-8 camera’s zoom lens.

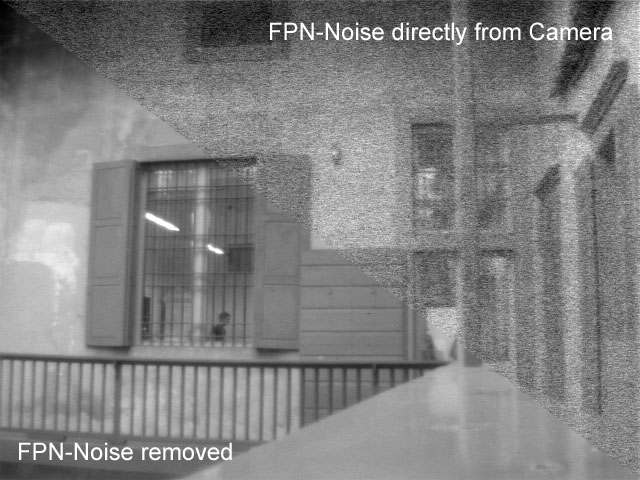

The dynamic range of this camera was however nowhere close to the dynamic range of the film stock, as evidenced by the following single capture (to see at full resolution, click on the image and choose the “orignal image” link) :

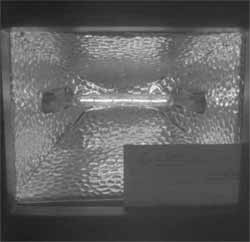

Having experience with HDR-capturing, I opted to capture from each frame an exposure stack with a total of 5 different exposures. Initially, I used Devebevec’s algorithm to compute a real HDR from the different exposures. Here’s the result (The image ranges over about 11 EVs; it is mapped for display into a LDR-image by appropriate linear rescaling (min/max normalization):

Quite a difference in terms of dynamic range, as hoped. However, the colors are quite washed out. Also, it seemed at that time to be difficult to automatically map the huge dynamic range of various footage into the LDR image (Low Dynamic Range) which would be video-encoded. So I tried instead Merten’s exposure fusion algorithm, which yields automatically a LDR image.

Running exposure fusion results in the following image:

Two things can be noted: the colors are more vivid and the overall sharpness impression is slightly better. Exposure Fusion increases the local contrast. Sadly, this applies to the film grain of this film stock as well: it’s noticably stronger.

Anyway - this exposure fusion image was the data used in creating the above 2020 version of the film. Pushing the color saturation a little bit further in an attempt to come closer to the impression the film would make if actually projected by a Super-8 projector, the film grain is enhanced even further. Actually, for my taste, to a point that the dancing film grain covers up a lot of the fine image detail I would like to show my audience.

As soon as the Raspberry Pi guys introduced the v3 sensor (HQ camera, IMX477 sensor chip), I switched back to a Raspberry Pi setup, since that camera would allow me to test out capturing higher resolutions, up to 4056 x 3040 px. Only to discover that the supporting software was less than satisfactory. That got even worse when the Raspberry Pi software switched from the closed-source Broadcom image processing to libcamera (and correspondingly, from picamera to picamera2). I have never seen a more badly designed software, and I have seen a lot.

However, it turned out that not all is lost. For starters, the image processing from the raw image data to human-viewable images is mostly governed by a tuning file which is user-accessible. So for the first time ever, it was possible to exactly define and optimize how images are created from the data a sensor chip sees. This resulted in some optimizations which ended up in an alternative tuning file, “imx477_scientific.json”, which is now part of the standard Raspberry Pi distribution (it’s not used by default, but there are various ways to activate it).

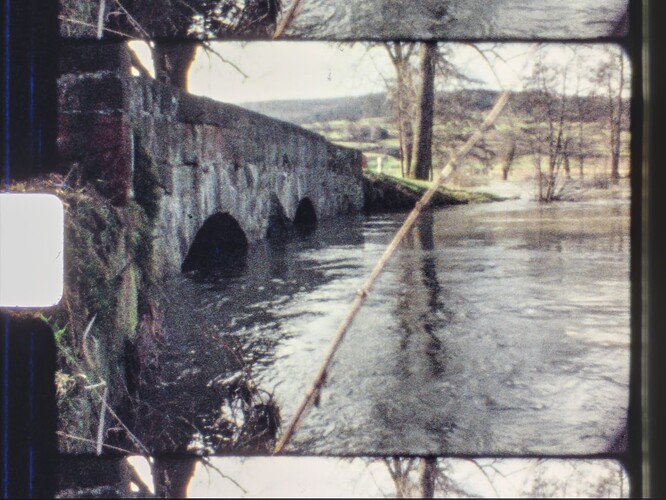

A second important development came along when the RP people added raw image format support to their software. Here’s (nearly) the same frame as above, but captured as a single raw image, developed by RawTherapee, with Highlights and Shadows pushed by +30:

The 12bit dynamic range of the IMX477 does not quite match the dynamic range available with the HDR-exposure stack, but it is close enough; some film stock can go up to a dynamic range requiring 14 bit, but this specific frame needs only 11 bit. I set my exposure level so no highlights are burned out, compromising a little bit the very dark shadows - you can see this comparing the dark areas under the bridge in this image and the HDR version.

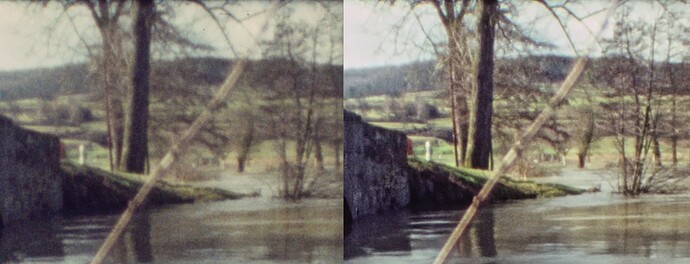

Still, the visual quality of the material is falling behind todays standards. As my primary goal is to recover the historical footage for today’s viewers, image enhancements are desirable. The basic tool here is spatio-temporal degraining. In a sense, the algorithm works very similar to human perception, creating from the available noisy and blurry data a world-model of the scene and recreates from this world-model a newly sampled digital image. That works under many circumstances, but not always. In any case, here is the result of that algorithm:

The chosen processing resolution is 2400 x 1800 px, much less than the inital scan. Here, the raw conversion/development was done via DaVinci Resolve instead of RawTherapee like in the previous image. The color science both programs are using is based on the “imx477_scientific.json” mentioned before. This data was used as the basis for the 2024 version.

Comparing the two files, the enhancement in terms of image resolution should be noticable. As this footage featured pans too fast for the 18 fps it was recorded with, there is occationally some jerky motion present which distracts viewers nowadays. So in a final postprocessing step, DaVinci was used to increase the frame rate to 30 fps. Ideally, both steps, spatio-temporal reconstruction and the creation of intermediate frames, could be combined. This would however require an extensive software development (mainly GPU-based), for which I currently do not have the time…

Anyhow, here’s a cut-out comparision between the raw scan (left side) and the processed image (right side) to show the image improvement possible: