… and here are some further infos about the integrating sphere implementation:

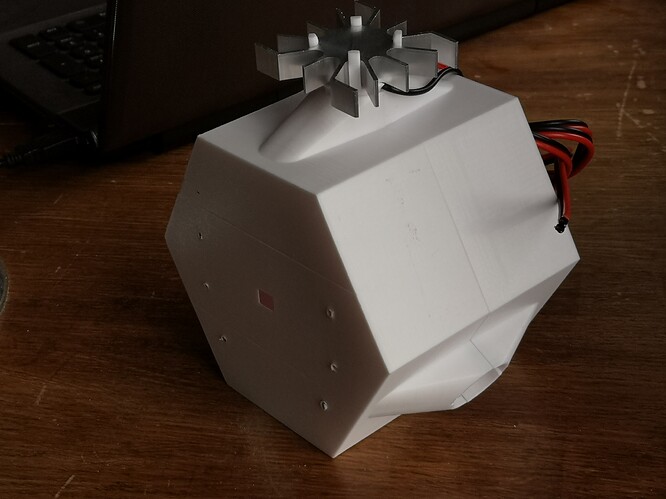

The inital integrating sphere was designed with nearly double the diameter (d = 98 mm) than the final setup (d = 50 mm), because I really wanted to stay below the 5% limit for the total port area. Here’s a photo of this intial setup:

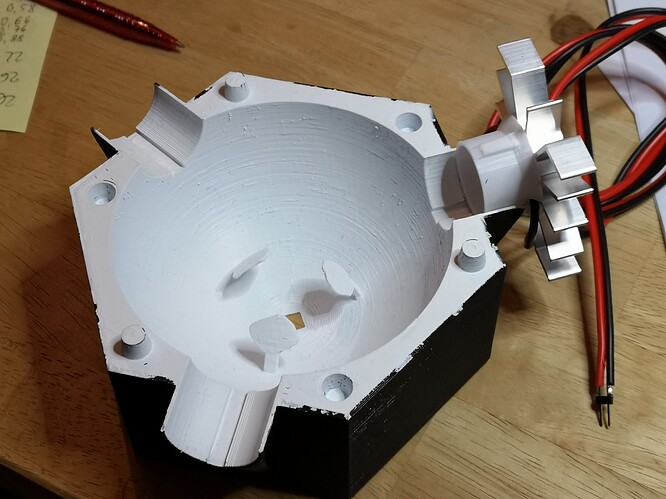

The unit was split into two halfs for easy printing. Three entry ports were used for the LEDs which were mounted on a rather large heat sink. These heat sinks were kept outside of the unit in free air. So LED-temperatures were no issue in this design. The next image

shows the internals of the second major iteration. Note the three baffles placed around the hole of the film gate. These baffles turned out to be important, as they block any direct light coming straight from the LEDs. Without the baffles, illumination of the film gate was uneven, in terms of color constancy.

The form of the baffles was calculated in such a way that they have the minimal surface necessary to block direct light from the LEDs (compare the size and shape of one of the baffles shadow with the film gate).

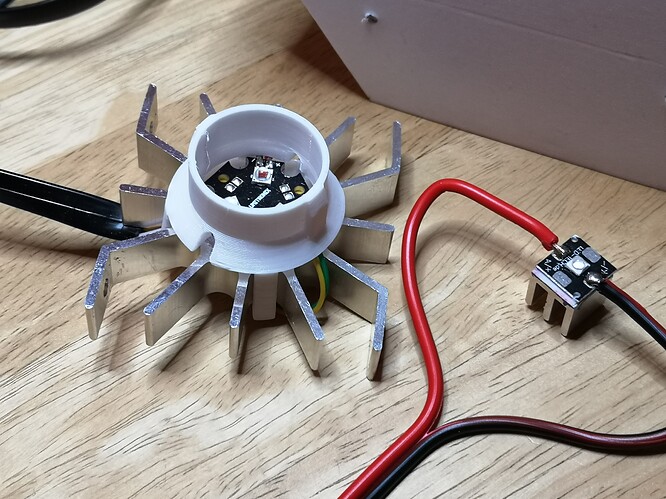

This design had the further advantage that you could easily replace or change the LEDs. However, the illumination levels where not really satisfactory. Using several LEDs mounted on a single heat sink was one option I tried, among others. Finally I settled for a different, much smaller form factor for the integating sphere, as well as the LEDs:

Left in the image above you can see the old, large design with external heat sink. A multicolor LED is fitted into the mounting tube of this old design. In the right half of the image you can see the type of heat sink/LED I am currently. The LED is an Osram Oslon SL 80 LED mounted on a small heat sink with dimensions 10 x 10 x 10 mm.

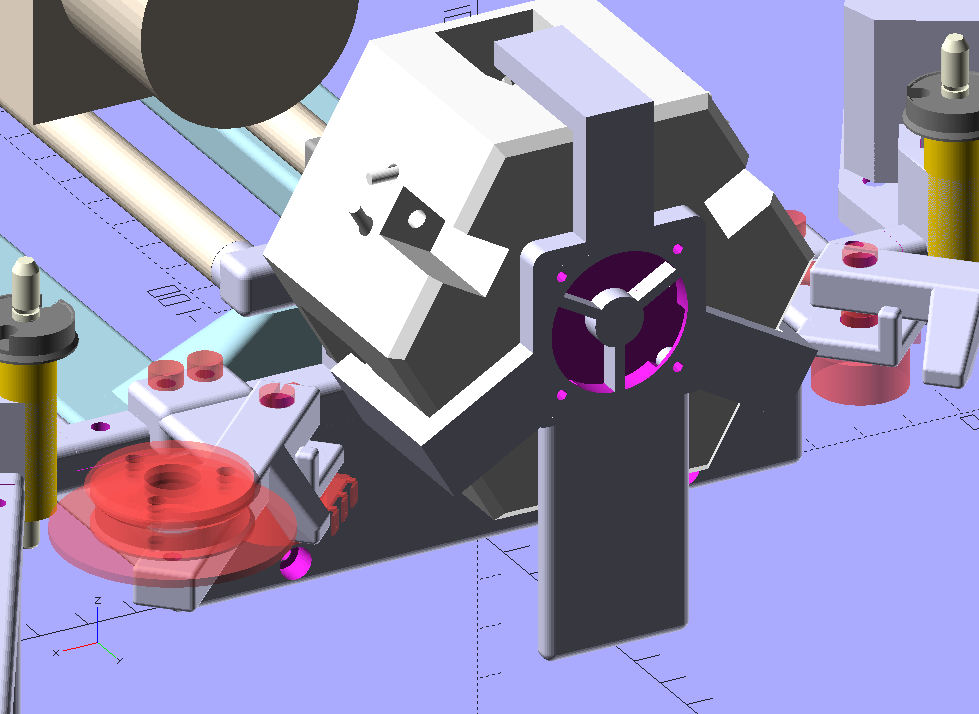

The most important change of the third design was a diameter of the integrationg sphere about half the size of the original design. This increased the illumination intensity by nearly a factor of four. Furthermore, the mounting of the LEDs was changed. They were placed way deeper and tilted in the unit. Therefore, the original baffles around the film gate in the original design were no longer needed and could be replaced by small tongues near the mounting holes of the LEDs (cmp. the illustration a few posts above).

As it turned out, the heat sinks alone were not sufficient to keep the LEDs cool enough, so an active cooling system with a fan in the back of the unit and corresponding airducts to the LED heat sinks was added:

This current setup performs ok so far. Temperatures of the LEDs stabilize to about 50°C, even if the unit is operated for days.

One disadvantage of my lastest design is that changing or replacing a LED requires the whole unit to be unmounted and opened.

By the way - I remember much simpler designs based on the “integrating sphere” idea. For example, in the 80s I owned a color enlarger that did use a simple cube-like box for light mixing. On the bottom of the box was a glass plate for the 6x6 cm film format. The other walls of the box were covered simply with styrofoam. The light of three adjustable color filters was shining through a small hole in one of the sides of the cube onto the opposite side of the cube, but not directly into the film gate. The performance of this simple setup was quite good.