@johnarthurkelly,@bwrich - yes, an integrating sphere (also called Ulbricht sphere) helps to reduce scratches and the like and mixes perfectly the lights from different LEDs. But it also lowers the contrast of the film somewhat, compared to other illumination systems.

Also, indeed, for an integration sphere a rule of thumb states that the openings should be less than about 5% in order not to loose the theoretically perfect mixing properties of an integrating sphere.

But, it turns out that at least for the Super-8 format, the sphere does not need to be that large.

Here’s a photo of my (third) iteration of an integrating sphere:

Internally, the sphere only has a diameter of 50mm. This result in a ratio of about 6%, slightly larger than what is generally considered the “limit”. But frankly, there is no difference noticeable in performance.

More important than the ratio “area opening” vs “total area of the sphere” is the inner coating of the sphere. Well, I used simple white paint, which is suboptimal. High end integrating spheres use barium sulfate as a coating, which has ideal reflection properties in the visible light.

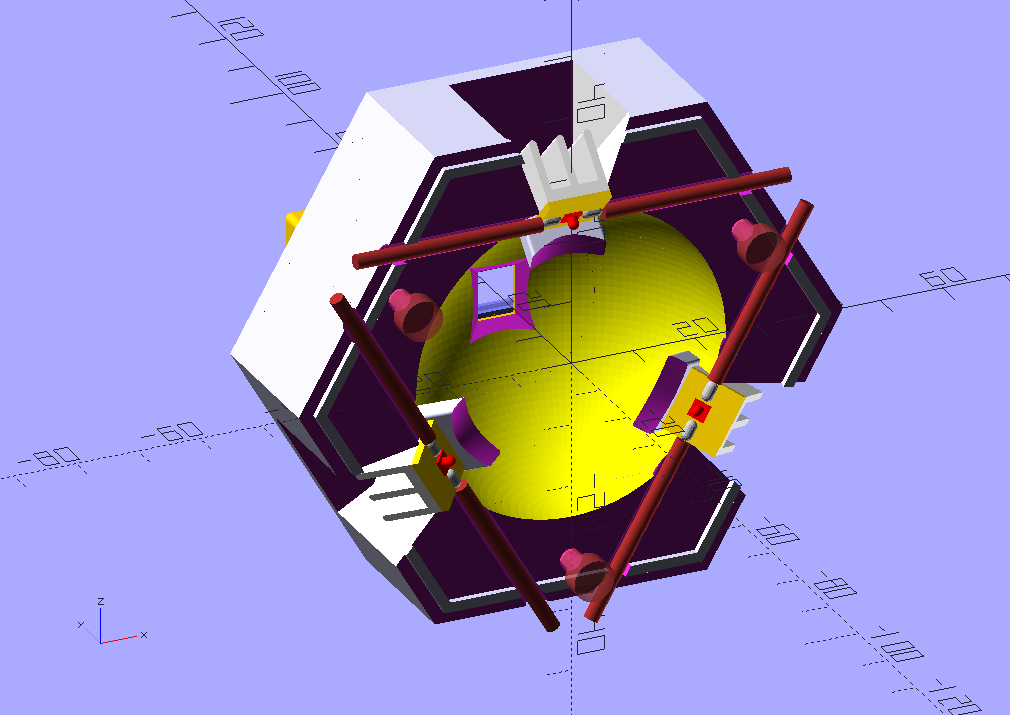

The sphere in the photo uses three LEDs (red/green/blue) which are burried quite deep in the module for optimal performance. You can see a small part of the heatsinks I used to carry the heat away from the LEDs away on the top part of the sphere.

Here’s a design image to illustrate what’s inside the sphere:

The LEDs are angled and shadowed in such a way that they do not shine directly into the filmgate. They are driven by programmable constant current sources.

As it turned out, the LEDs were getting too hot (90°C), so a fan was added to the initial design, together with air ducts. These are the gray tongues visible in the photo. With these air ducts, the LEDs stay at about 50°C, cool enough for the PLA which was used in this print. PLA melts at around 190°C and gets soft at even lower temperatures.

Note that the larger an integrating sphere is designed, the more power the LEDs need to have to ensure a specific exposure setting. For a 35 mm format, the design would need to be changed substantially, I guess.

What is also important is that the film is positioned as close as possible to the opening. Otherwise you loose the scratch-reducing effect of an integrating sphere.

Initially, I tried several film gates I ripped from old Super-8 projectors, but they all turned out to introduce a too large spacing between light source and film. So I ended up in designing a 3D-printable film gate which you can also see in the photograph above. It sits basically right upon the light output.