Hi, I’m going to realize a continue scanner for 8mm.

Since I’m planning to scan at least 5 frames per second, what exposure time should I use and how many lumens do I need? What light source do you suggest to use?

Thanks.

My scanner is stop motion, so take the information as theoretical.

Suggestion on how to unpack the question.

As you may have seen in the forum, the lens best sharpness and resolution for the typical lenses used for 8mm is between f4 and f5.6. That’s set.

Film speed motion will give you the camera exposure time, since you need to freeze the movement. A global shutter sensor (search the forum for more info of global vs rolling shutters) will be required.

The lens aperture (set) + exposure for freezing + sensor sensitivity = light required.

Good quality white LED. You will need a lot of light in a short time. You may need to flash it to avoid overwhelming the sensor. This posting provides some info. His scanner is a great example of continuous motion.

Hi, thank you for you reply.

Yes, I planned to buy a global shutter camera → USB 3.0 Camera | 3 Megapixel IMX265 Color | MER2-302-56U3C

I’ve seen these leds that have a very high CRI → YUJILEDS® CRI 98 5W COB LED - 135XL - 5pcs — YUJILEDS High CRI Webstore

When picking up LED, the Spectral Distribution Graphs are an important item. The 3200 K looks fairly smooth, and may be a good option.

I don’t know enough about these cameras. Decide early if that resolution is enough. You can find in the forum both sides of the argument regarding resolution (more is more vs less is more), there are some valid use cases on both sides.

To determine an approximation of how much light you need, check the camera sensor specifications. There is usually some information there. For the particular camera above is the IMX265. Not sure if is this specific version, but let’s use the data for illustration.

In the remarks for the sensor characteristics, it provides the testing conditions: 3200 K, 706 cd/m2,

1/30s accumulation.

The camera specs indicate 56 fps @2048 × 1536 resolution.

Think in photography. When you halve the exposure, you need to double the light. If the sensor needs 3200 K, 706 cd/m2, 1/30s accumulation, to maintain the level at 56 fps, it probably needs double that. And if you are going to flash the light (and it may be required in this case) the time of the flash will determine the light intensity.

Sorry I don’t really have practical experience with global shutter to provide more insight. Hope the information is helpful.

You’ll want at least 3-4 of those LEDs to get enough light. I used the same ones with the same sensor size.

I’d put even more light in there than you need, particularly for very dense, underexposed film

I can’t remember what shutter speed I was using sorry.

The helicoid/extension tube loses a lot of light when setup for 8mm.

Thanks for your helpful infos.

What if I get something like this? It has integrated fans, it’s dimmerable, and looks to have high CRI

Has anyone tried using these for making integrating spheres.

I have not personally constructed an integrating sphere. Here are some examples from others using cake molds or bath bomb molds. I would be concerned about the interface between the 2 halves (see the 3rd link.) Do the 2 halves form a perfect sphere or form an ellipsoid? If ellipsoid, how does the deviation affect light uniformity?

@justin: that is probably rather irrelevant. I have seen integrating “spheres” realized as a simple styrofoam box. Some enlargers which were used in the old days of analog photography worked with that principle.

More important should be that the coating inside the sphere is lambertian (ideally). That lambertian reflectance realizes the desired random mixing of light inside the sphere by multiple reflections. Small form deviations from the spherical form will not make a too much difference than.

Finally, the openings of the sphere should be sufficiently small with respect to the sphere’s diameter in order to get an evenly distributed light intensity out of the ports.

@agus35monk I am using 55mm ping pong (oversized) ball, which is decent for an 8mm target. No coating was used, I did select that the visible portion does not have the hemisphere seam or the injection mark.

@justin thank you for sharing these references. The last reference uses this interesting aluminum cake molds, which are available in various sizes. The part looks like a promising half sphere, perfect for light sanding and coating. As mentioned by @cpixip, the relation between the diameter and the port size, and the reflecting surface are critical.

As mentioned prior, imageJ is a great tool to measure the resulting flatness of the illuminant+reflector+lens+sensor+library and provides a great visual representation to confirm the system is flat.

Hi,

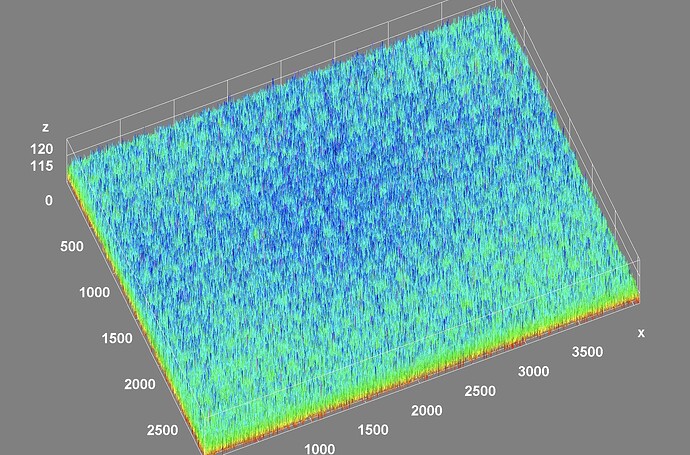

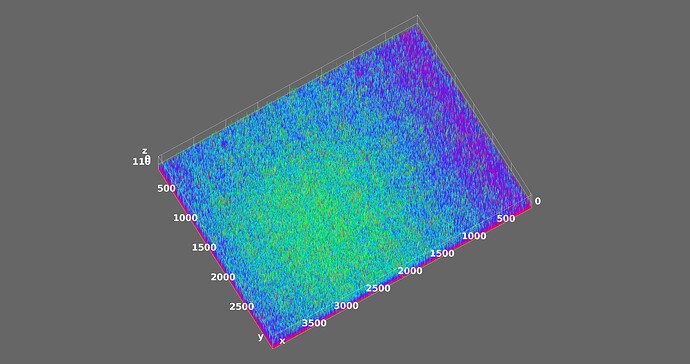

i was just revisiting my flat gray image when I found this pattern, and I’m just not sure at what I’m looking at:

this is with the HQ cam at full res with the scientific tuning file, png compression disabled. What may cause this too regular pattern to appear - don’t think it’s from the lens or the camera (15 * 20 peaks if I countered correctly), any hints?

@d_fens - could you elaborate more on how you obtained this image? You are mentioning png-format, but how did you arrive here? Taken by some of the libcamera-apps? Taken by own (python?) code? First thing I would check is whether this pattern is already present in a raw capture (probably not, but who knows?). If it’s not present in your raw capture, the issue might be created by libcamera’s processing pipeline. Did you notice that pattern before? Libcamera enjoys frequent updates, and there is a slight chance that something broke.

Whatever you used to obtain this data, try to change things systematically. For example, compare the results with the scientific tuning file vs. the standard tuning file. Check if using a different output format has any effect on the result. Try to use alternative capture software - that is, use picamera2 if you captured your data with a libcamera-app and vice versa.

I am not aware that anybody else encountered a similar pattern. As the pattern repeats in sync with the image borders, it’s unlikely (but not impossible) that it is caused by intensity fluctuations in your illumination setup.

@cpixip I tried to reliably reproduce it using the example_app from the libcamera2 repository and I think i may have found the cause: when requesting super short exposure times like 141 ( thats 114 “real” µs plus some digital gain) this pattern can be seen.

This is independent of raw/jpg/png, AWB on/off, etc and of course requires the scientific tuning file - otherwise the image is rather a bowl than flat.

so the simplest test case is smth. along

libcamera-still -o test.jpg --analoggain 1 --shutter 141 --tuning-file /usr/share/libcamera/ipa/rpi/vc4/imx477_scientific.json , would be interesting if you could repoduce?

Great result! I certainly will look into that, but it will take me one or two weeks. My setup is currently dismantled…

An exposure time of 141 is quite faster than the exposure times I usually work with, and I usually try to make sure that the digital gain is identical to 1.00, as I do not really trust this part of the libcamera approach/implementation. What happens if you request a shutter time the sensor can realize directly, without the digital gain adjustment? Second experiment: capture simultaniously a raw (I think this can be done with the -r flag) and check whether you can see the pattern already in the raw file. If so, it’s a sensor issue. Otherwise, I would tend to blame libcamera.

Again, if I understand your experiment correctly, you worked with a digital gain of about 1.24 - I always select exposure times which result in a digital gain of 1.0 (that should be 114 in your case?).

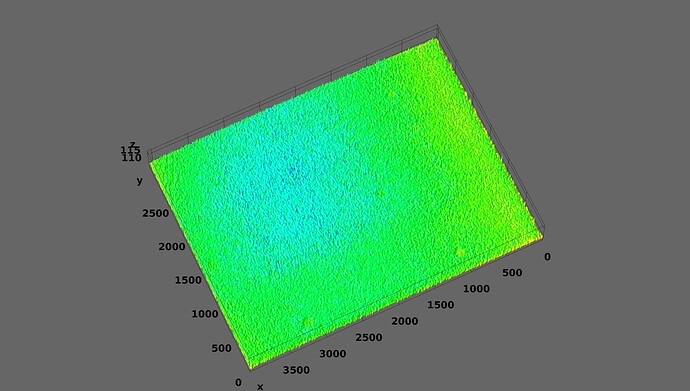

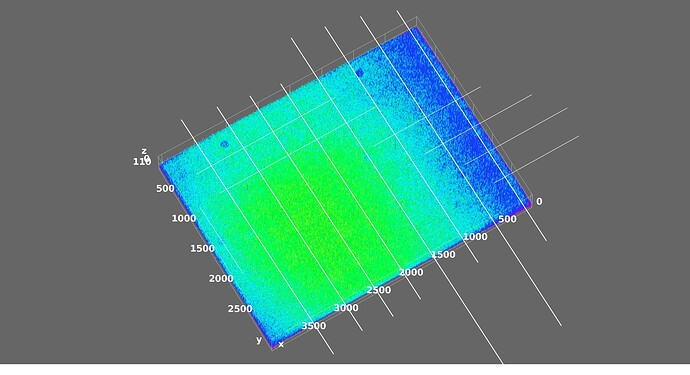

Here is an approximation to your setup, with a couple of limitations:

- The HQ sensor I have, does not have the original IR filter.

- Analog gain settings were adjusted for best white balance.

- The White LED is 5000K.

The command line used was:

libcamera-still --gain 1.0 --shutter 141 --awbgains=1.58,1.86 --awb=daylight --tuning-file=/usr/share/libcamera/ipa/rpi/vc4/imx477_scientific.json --output test.jpg

I adjusted the settings to have the approximate same Z range.

In the plot some known spots are visible. The lens is at f2.8, and the White Leds are set to max.

Not sure what the pattern you see is, but it does not appear to be a sensor issue. Hope this helps.

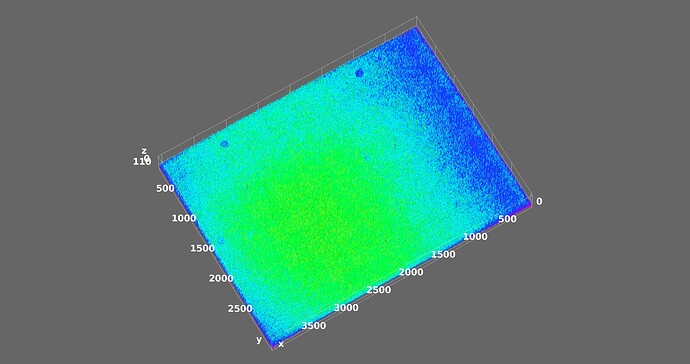

Had more time to play with the settings, and below is another plot of the same capture that I believe is closer to @d_fens above plot settings. The pattern is not visible, the known lens spots are.

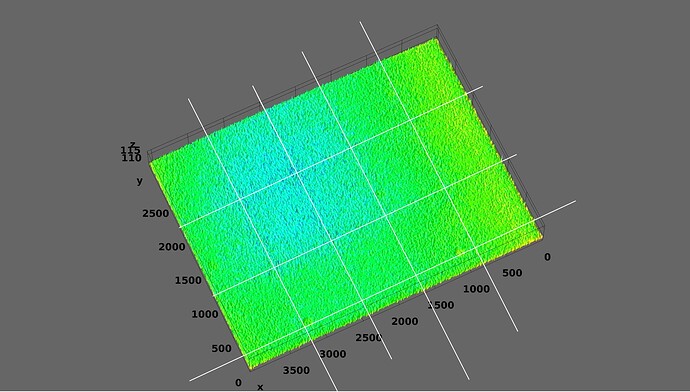

Additionally, I tried the following settings for a new capture.

libcamera-still --gain 1.0 --shutter 141 --awbgains=1.58,1.86 --awb=daylight --tuning-file=/usr/share/libcamera/ipa/rpi/vc4/imx477_scientific.json --denoise off --sharpness=0 --encoding=png --output test2.png

Using the png output file, and using the exact same plot settings, here is the plot.

With these camera settings… there is something resembling a pattern when ploting the png file.

To compare, below is the plot of the jpg file saved on the same capture… the pattern is not as visible.

Recap: All 3 surface plots above had the exact same ImageJ settings. The last two plots correspond to the same libcamera-still capture, the only difference is the encoding file analyzed.

With denoise off, sharpness off, and png encoding, a slight pattern was visible.

When using @d_fens simpler libcamera-still settings, no pattern was visible.

@d_fens, @PM490 - could you upload a raw capture with the exact same capture settings in which you obtained the periodic pattern? Also, it would be interesting to see whether this effect happens also when the digital gain is equal to 1.00 (for this, the exposure time needs to be changed).

For the .jpg or .png image to be reproducible and useful, it is important that no autowhitebalance algorithm is active (that is not the case with @d_fens’s commandline) and the color gains are fixed to some sensible values.

As one can see from @PM490’s results, the pattern changes slightly when using .jpg vs. .png - that could simply because the .jpg-format is compressing the image data with some loss in fidelity, while the .png-format should not do this. So to hunt for the periodic pattern, it is best to use the .png-format.

It would also be helpful to record some of the metadata associated with each image. Specifically, the digital gain as well as the gains for the red and blue channel should be important parameters.

To recap what I know about the libcamera’s way to obtain a .jpg or .png:

- once the user requests a certain exposure time, libcamera tries to approximate this exposure time by a shorter exposure time the sensor can realize in hardware, plus an appropriately chosen digital gain which scales the real exposure time to the value the user requested. I currently do not know whether this is realized in the sensor’s hardware (in this case, the pattern might already be noticeable in the raw image)

- Once the raw image has been aquired, the red and blue color gains are applied to the raw image. This is certainly already done in libcamera itself - and of course, the output result will depend on the color gains applied to the raw image. Since both of you have quite different setups (and: some images were taken with autowhitebalance active, some not) your results are a little bit difficult to compare.

- The next step is to apply a color matrix for the data. This step is actually quite complex, mainly because the color matrix chosen depends on a lot of variables estimated by libcamera. The actual color matrix chosen will be available in the metadata of the image as well. The matrix will not be identical to any of the matrices encoded in the tuning file, as it is computed from these matrices by libcamera.

- An appropriate contrast curve (which is Rec. 709 in the case of the scientific tuning file) is applied to the data of step 3. At this point in time, the image is “viewable” to a human observer.

- The image is finally stored into a file on your disk. Using .png should result in a larger file which has all the information available from step 4., using .jpg will result in some smoothing of the image data - jpg. is not a lossless storage format. Specifically, the pattern visible in your test will be less noticeable when storing the data as .jpg.

Technically, the periodic patter could be the result of some overflow/calculation gone wrong in the processing pipeline described above. Another issue might be that the lens shading algorithm reacts weird if it’s not present at all (as is the case with the scientific tuning file).

If you could post the actual captures (not the ImageJ plots) I could have a look with my tools what the ghost pattern is really all about. Ideally, the combination of a .png output with the raw DNG file would be quite helpful.

@PM490 thanks for checking it out - i do think that this pattern is in all of your images, although with a lower frequency and amplitude to really pop out.

Like for someone with a hammer everything looks like a nail I do see the patterns as I annotated (badly) here:

I have a suspicion what might cause these patterns. The “rpi.alsc” module, responsible for the “adaptive lens shading compensation”, is missing in the scientific tuning file. For good reasons.

According to available documents describing the actions of tuning file, a module which is not present should never be activated.

For the following, we need to take a closer look on how the ALSC module operates. It gets its compensation data out of the tuning file. Specifically, there are low resolution tables like this here

"luminance_lut":

[

1.548, 1.499, 1.387, 1.289, 1.223, 1.183, 1.164, 1.154, 1.153, 1.169, 1.211, 1.265, 1.345, 1.448, 1.581, 1.619,

1.513, 1.412, 1.307, 1.228, 1.169, 1.129, 1.105, 1.098, 1.103, 1.127, 1.157, 1.209, 1.272, 1.361, 1.481, 1.583,

1.449, 1.365, 1.257, 1.175, 1.124, 1.085, 1.062, 1.054, 1.059, 1.079, 1.113, 1.151, 1.211, 1.293, 1.407, 1.488,

1.424, 1.324, 1.222, 1.139, 1.089, 1.056, 1.034, 1.031, 1.034, 1.049, 1.075, 1.115, 1.164, 1.241, 1.351, 1.446,

1.412, 1.297, 1.203, 1.119, 1.069, 1.039, 1.021, 1.016, 1.022, 1.032, 1.052, 1.086, 1.135, 1.212, 1.321, 1.439,

1.406, 1.287, 1.195, 1.115, 1.059, 1.028, 1.014, 1.012, 1.015, 1.026, 1.041, 1.074, 1.125, 1.201, 1.302, 1.425,

1.406, 1.294, 1.205, 1.126, 1.062, 1.031, 1.013, 1.009, 1.011, 1.019, 1.042, 1.079, 1.129, 1.203, 1.302, 1.435,

1.415, 1.318, 1.229, 1.146, 1.076, 1.039, 1.019, 1.014, 1.017, 1.031, 1.053, 1.093, 1.144, 1.219, 1.314, 1.436,

1.435, 1.348, 1.246, 1.164, 1.094, 1.059, 1.036, 1.032, 1.037, 1.049, 1.072, 1.114, 1.167, 1.257, 1.343, 1.462,

1.471, 1.385, 1.278, 1.189, 1.124, 1.084, 1.064, 1.061, 1.069, 1.078, 1.101, 1.146, 1.207, 1.298, 1.415, 1.496,

1.522, 1.436, 1.323, 1.228, 1.169, 1.118, 1.101, 1.094, 1.099, 1.113, 1.146, 1.194, 1.265, 1.353, 1.474, 1.571,

1.578, 1.506, 1.378, 1.281, 1.211, 1.156, 1.135, 1.134, 1.139, 1.158, 1.194, 1.251, 1.327, 1.427, 1.559, 1.611

],

defining multiplication constants for luminance (taken above directly out of the standard tuning file) and the Cr/Cb color channels.

Now in order to be applied to the full resolution image, this low resolution data needs to be up-scaled to the full resolution of the raw image. If this (the up-scaling) is done improperly, a periodic pattern similar to the ones displayed above might appear.

Specifically, the correction tables have a 16 x 12 pixel size in the IMX477 tuning file - this does not quite match the pattern @d_fens detected initially.

It’s just an idea, and there are some points which argue against such a cause. @d_fens counted initially a semi-periodic pattern of 20 x 15, which does not really match the table size of 16 x 12 px in the tuning file. Also, according to the documentation, modules that are not present in the tuning file should never be active in libcamera’s processing pipeline. But at the moment, it’s the only point I can see where such a semi-periodic pattern might be created.

To advance this discussion further, one should initially check whether the pattern is already present in the raw data of the sensor. If not, libcamera’s processing is creating this pattern somehow. In that case, one would need to check each suspicious module in turn. The ALSC-module would be a prime candidate for me…

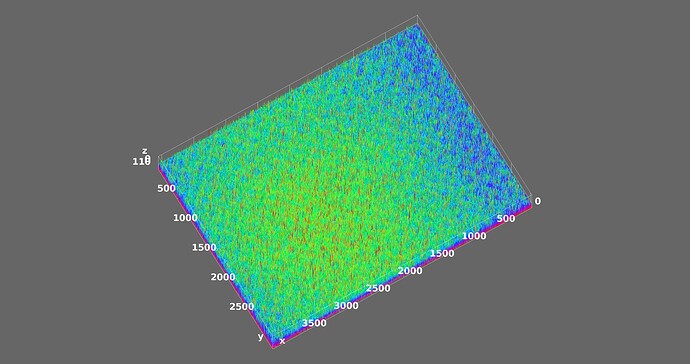

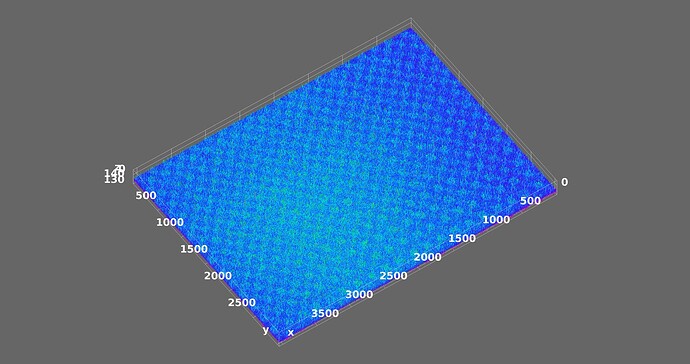

Happy to assist with the captures, since presently the setup is available.

The prior captures were done with a square mask on the sphere output, and the camera was/is not in perfect alignment with the center. In the captures today, I removed the square mask to eliminate the hot square in the plots.

The capture was performed with the following command:

libcamera-still --gain 1.0 --shutter 50 --awbgains=1.58,1.86 --awb=daylight --tuning-file=/usr/share/libcamera/ipa/rpi/vc4/imx477_scientific.json --encoding png --denoise off --sharpness=0 --rawfull 1 --raw 1 --metadata 20231025test1 --output 20231025test1.png

The raw (dng file) result was opened in Darktable (Ubuntu) and exported as an uncompressed 16 bit png. The resulting 16-bit-uncompressed-png was used to produce the following plot.

The pattern not only is there, it quite visible. To aid in @cpixip request/investigation, with the exception of the 16-bit-uncompressed-png (which size github did not like), all files are available here.

Looks like this confirms that is the case. Good hammer!