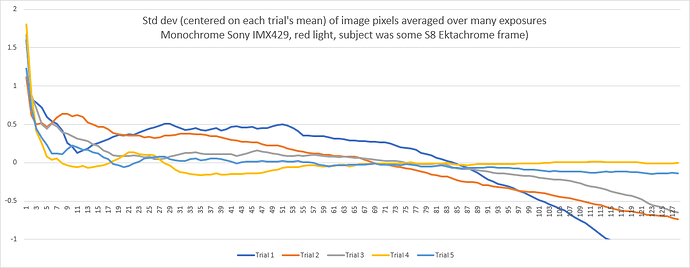

Following up on this, I ran the experiment where I measured the standard deviation of (16-bit, monochrome) image pixels after each successive identical exposure was averaged into the final result (all the way up to combining 127 exposures).

These were exposures of an S8 frame in the ReelSlow8’s (upcoming) film transport. It wasn’t under any sort of tension control (just the friction of unpowered stepper motors on either end as they haven’t been wired up yet), so I think all of the behavior seen after the first half-dozen exposures of each trial can be explained by the film loosening ever so slightly over the course of the minute or two it took to run all five trials. (If you look carefully, the drooping of each plot is weaker for each successive trial. And looking at the raw data before it was centered on each median, the trial plots don’t overlap and are monotonically decreasing.)

In any event, it’s pretty clear that you reach just about the best you’re going to get sensor-noise-wise after only a very few exposures. Three if you’re in a hurry. Or five if you want to be sure.

All of that was great advice, thank you. And now that I’ve seen that the number of exposures it takes to get the sensor noise out is essentially the same as it takes to build a reasonable HDR/Mertens stack for this type of subject, I agree that it seems like we’re able to solve both problems simultaneously. Nice!