Hi Dominique (@dgalland) - here are some comments.

-

the “trick” with the rotating exposure times came actually out of a discussion with David Plowman, the maintainer of the picamera2 library. He’s the one who came up with the basic idea.

-

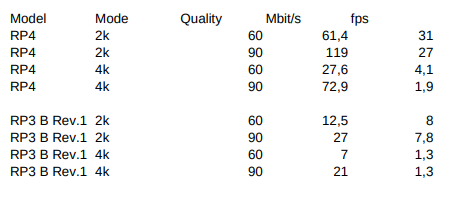

I timed a RP4 and a RP 3B, Rev. 1. Here are my results

Mbit/s is the amount network traffic; the RP4 is actually my scanner unit and the camera was looking onto a film frame. The RP3B camera was just looking out of the window, and I did not bother to focus precisely. My fps clocking is not too precise and sways a little bit. But overall, I consider the numbers not to bad. At least it did not trigger me to try to optimize my code.

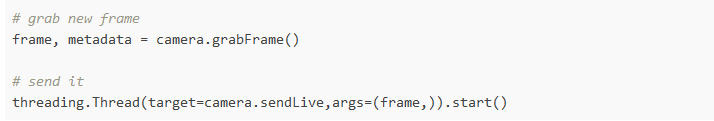

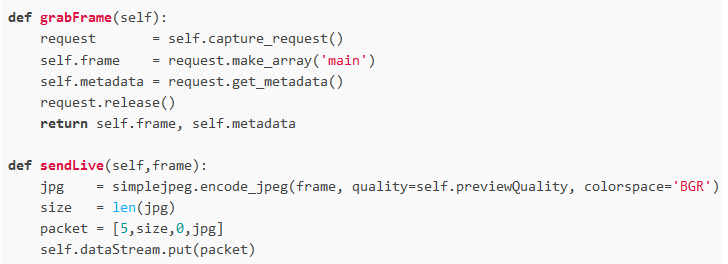

The code is really simple. In the main loop, it looks like this

camerais an object derived from the Picamera2 object, with the two subroutines

Well, that is exactly the topic of this thread. The short answer for me: a 12-bit-raw image is preferable to a multi-exposure capture for several reasons:

-

for most scenes, the results of expose fusion and raw-capture are comparable. To my own surprise, that was already the outcome of a test I did in July 2020. And the discussions and test results in this thread point to this as well.

-

capturing a single raw is much less demanding than capturing several exposures of a single frame, which needs to be non-moving to sub-pixel precision - or does require elaborate post-processing.

-

if the multi-exposure capture is used in an exposure fusion setup, you are going to experience tiny color-shifts which will make you a lot of work in post processing.

-

the drawback of working with only a single 12-bit raw is hidden in the shadows. There, sensor noise is spoiling the image, especially in the red channel (as this is usually the one with the highest gain). For all practical purposes, this can be resolved by applying a denoising step. But: again, this noise is hiding in the shadows - normally not image parts the attention of viewers is focused on. If you need to boost severly underexposed footage into a viewable result, a single raw capture probably won’t make you happy.

A little bit more detailed discussion. First, it is absolutely important to understand that exposure fusion is a way to create nice-looking images from an exposure stack. There is nothing in the whole algorithm that ensures proper colors. In fact, I have given above a few examples where the exposure fused result (EXP) differs from the real colors the developed raw displays (RAW). Exposure fusion even enhances colors and texture to a certain level. All fine if you like what you achieve with exposure fusion. It’s a personal decision.

Well, things are complicated. First, there is no concept of “black” in a color-reversal film. Only a concept of “very dark”. And even that very dark area can be pushed by a large enough exposure time to a medium gray level. I assume that with “black” you mean pixels which are zero in value, or at least close to zero? Well, these pixels are indeed mostly useless, but more because sensor noise and quantization noise will overtake the signal you are interested in: namely the image structure in those dark areas. So in reality, a 12-bit sensor will not give you a 12-bit range to work in. The upper limit is at 4095, and the lower limit depends on how soon you are going to say: “this looks aweful”.

As you are mapping a 12 or so-bit range into the smaller 8-bit range of your output, you still have some headroom in the shadows which you can use. It’s not large and might not be sufficient for your footage or your taste, but I think I will switch to single raw captures in the future. Before I finally do this, I need to establish the color science behind RP raws - there are still some questions unanswered here.

PS: capture your example image above as a raw (exposure time in such a way that no highlights are burned out) and load it in RawTherapee. Click on the left-most tab in the right column and reveal the “Exposure” tab. Play around with the controls. Especially, click on the circle symbol of the section “Shadows/Highlights” - this will turn on that processing step and the symbol will change to an “On”-symbol. Move the slider called “Shadows” (which is initially zero) to the right and watch what is happening to your scan.