@Manuel_Angel @jankaiser: guys, your experiments are very interesting!

A few comments…

A raw capture needs a finely-tuned exposure. You need to make sure that the highlights do not burn out (cmp this old post here). In fact, the exposure values chosen in your examples take care of that. You want to have the safety margin as small as possible, because every stop you are underexposing leads to missing/noisy data in the shadow regions. Lowering the exposure duration leads to a little bit of quantization noise in dark image areas; however, as the noise caused by film grain is much stronger, nobody will notice.

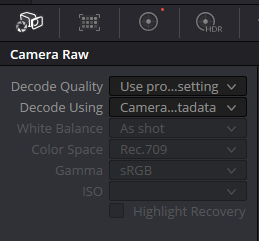

The development of the raw image is a two-stage process. You transform the raw color values into “real” color values with camera-specific color matrices. Normally, the .dng-file features at least two color matrices for two different color temperatures. Once you pick a whitebalance (and a corresponding color temperature) the actual color matrix used during development is interpolated from the two color matrices embedded in the .dng. I did not follow the latest steps of the libcamera development too closely, but I think the two color matrices embedded in a HQ camera raw are taken from data/experiments of Jack Hogan. These should be fine for all practical purposes.

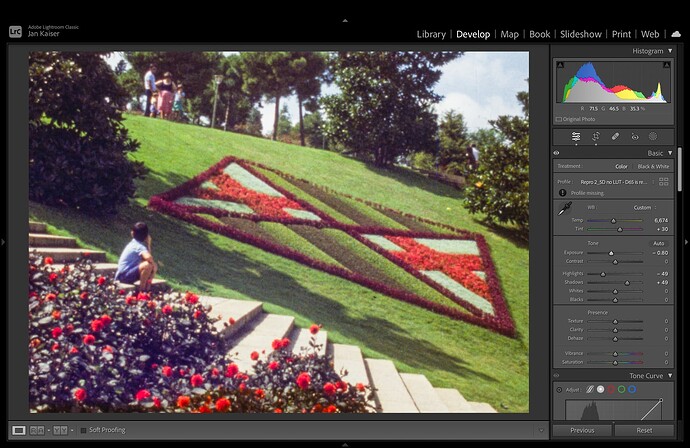

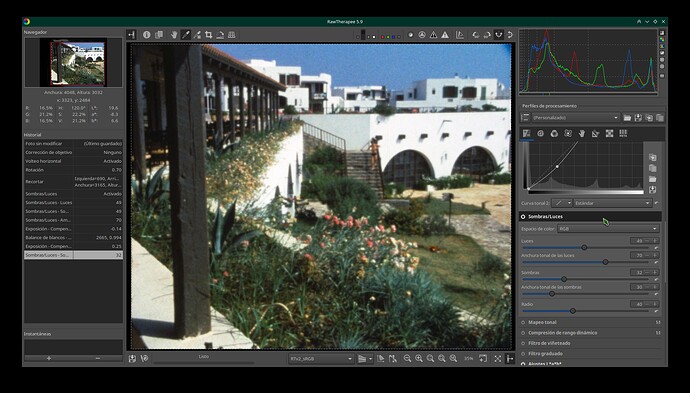

The second stage in the development of the raw image is the intensity mapping - both of you employ a noticably S-shaped curve by setting the highlight and shadow values appropriately. In the end, both shadow and highlight areas feature a reduced contrast, compared to the mid-tone range, and that ensures that you still see image features in these areas without highlights buring out or shadows drowning in the dark.

The Mertens-path (exposure fusion) is quite different. The jpgs used in the exposure fusion are created by libcamera based on the tuning file used for this camera. Typically, the tuning file contains more than the two color matrices stored in the .dng-file. Normally, libcamera itself estimates a color temperature and calculates from its estimate the appropriate color matrix. If you work with preset color gains, a fixed color matrix is chosen.

So: already the color processing is different in the two scanning paths you are comparing against each other.

The second stage, namely mapping the linear intensities of the raw image data into the non-linear jpg-colors, is handled again by a section in the sensor’s tuning file, the “contrast curve”. The curve which is used in the standard tuning file is a non-standard curve, leading essentially to a very contrasty image with increased color saturation and reduced shadow and highlight detail. That contrast curve was probably chosen to satisfy the taste of the standard user. There exists a second tuning file for the HQ sensor which features a standard rec709 contrast curve. Jpgs created by this alternative tuning file have a reduced color saturation in the midtones, but better contrast definition in the highlights and shadows.

Whatever is used to produce the jpgs, the exposure fusion algorithm is designed to take data from the “best” image of a region and merge that together into a single output image. This is done in a way taking into account how the human visual system is processing given image data. The result is an image which has image data in every area where at least one of the input scans shows data.

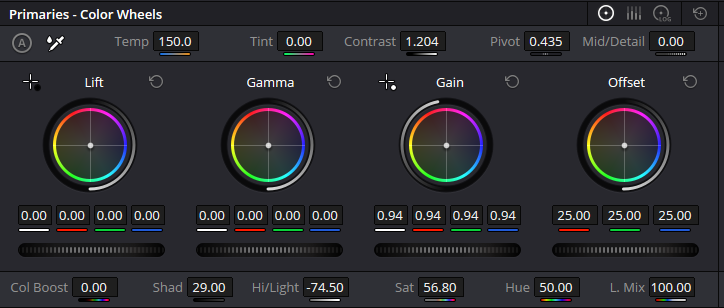

One specific issue with the Mertens exposure fusion algorithm is that usually, the contrast range of the output image exceeds the standard range [0:1]. Adjusting for this reduces the contrast of the image even further. And: it also reduces the color saturation again.

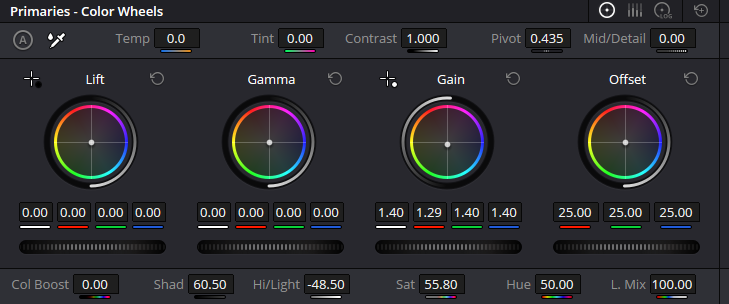

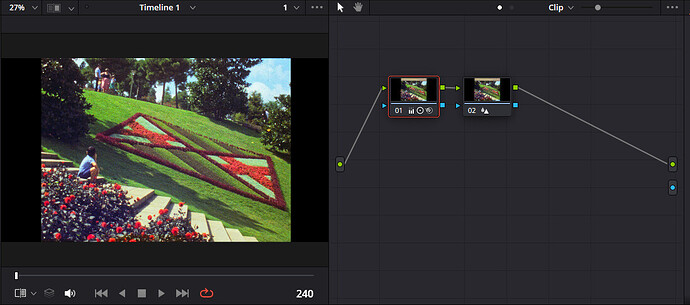

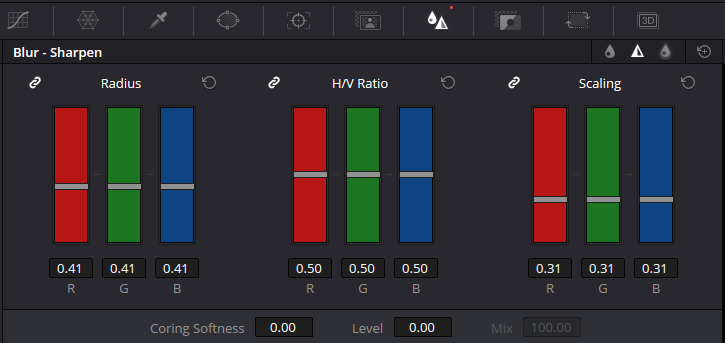

In the end, the output of the Mertens path is more comparable to a log-image and needs to be treated in postproduction appropriately (adjusting contrast and saturation, of example). Comparing the two approaches is difficult and things are constantly changing. The Mertens path is mostly automatic (with appropriate parameter settings), but time-consuming in capture as well as postprocessing. The raw processing path became only available recently, due to software improvements in the Raspberry Pi software offer. It should be much faster, especially if the raw input feature of, for example, daVinci Resolve is taken into account.

Due to its adaptive behaviour, the Mertens approach should provide better detail in highlight and shadow areas; I think in very dark footage, Mertens will have a small advantage over the raw-based path. Whether that is worth the increased workload during postprocessing will probably depend on personal preferences.

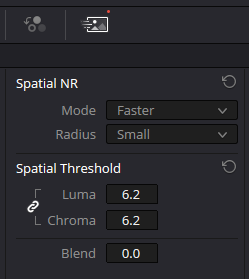

The major drawback of a raw-based approach is the limited dynamic range 12bit per channel can accommodate. With a properly chosen exposure value, this will not be noticable for most of our footage. Even with footage where a multi-exposure scan might have an advantage, the film grain of our small format stock covers that to such an extend that it will not be noticable. A grain reduction step which is recommended anyway for such small formats will iron out even these small differences.