Hi Pablo (@PM490) - I must confess that I have difficulties to properly understand your approach.

For starters, if I understand your tiff-approach correctly, you are throwing away all of the additional information available in the capture (blacklevel/whitebalance/ccm) to hand-tune a processing path in such a way that it looks identical to the standard way of working with raw files? If so, why not just work with the reference?

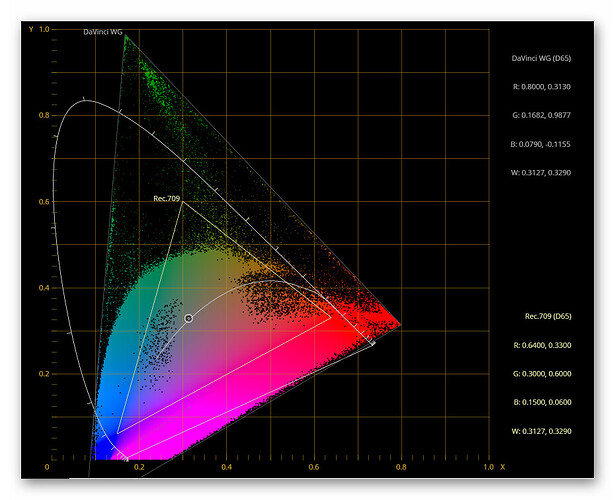

Well, that is actually not the case - DaVinci WG is the larger working space. You can check this by using the “CIE Chromaticity” setting on the “Scopes” tab which allows you to display two different color spaces with their parameters (see below).

Note that in the approach I sketched, the timeline working space does only mildly matter. Only the way certain sliders/modules work will change, but not much the overall result. That is build-in into DaVinci as it uses 32bit floats with no clipping (mostly: stay away from LUTs, for example).

Continueing:

I would say that it has, actually in two different ways. First of all, it’s what the camera saw with it’s given Bayer filter array looking at the scene. So in a sense a very well defined image. And in fact, the mapping from raw Bayer toward a RGB-image is based on that well-defined mapping of spectral data. Given, it’s complicated, like

- Adjust for illumination with appropriate whitebalancing coefficients (red and blue channel gains).

- Transform to device-independent, observer centered XYZ-space.

- Transform from XYZ to the desired output color space.

(I skipped a few things here…). In fact, the Color Matrix 1 hidden in the .dng-file is exactly describing the transformation from linear sRGB to cameraRGB while the As Shot Neutral tag specifies the transformation from cameraRGB to raw Bayer data (together with the blacklevel and whitelevel data in the .dng).

So in a way, the raw Bayer data (your .tiff-data) is defined - both in terms of color gamut as well as gamma (linear, for that matter). Of course, you can ignore that information…

Also note that you are implicitly imprinting a color space to your footage as soon as you insert the material into a Davinci timeline. There’s no way around this. The RGB data will be interpreted as whatever you have set your timeline color space to.

Let’s recap shortly what a color space is. It consists of four parameters: a whitepoint and the color coordinates of the three primaries. The whitepoint specifies the values which correspond to “white”, that is maximal brighness with no color. The primaries span the space of all representable colors. You can actually see this in DaVinci’s “Scope” tab when selecting “CIE Chromaticity”:

Here, actually two color spaces and their properties are displayed - the

DaVinci WG as well as Rec.709. And you see the coordinates of the primaries (RGB) as well as the whitepoint for both color spaces (which is identical in this case).

Now if you assign an arbitrary color space to your data, the colors will certainly not be correct. The correct way to go from one color space to another one is to use a CST node (there are also LUTs available, but as they introduce clipping, I am trying to avoid them).

In a CST, there is another selection you have to make which also changes the interpretation of color values: the input and output gamma. Again, you want to use the correct setting here, not something arbitrary. Let’s see in detail why I set up my processing in this post the way I did:

- I set up the timeline color space to

DaVinci WGwith the gammaDaVinci Intermediate- that basically instructs DaVinci to do all computations in this space. Choosing here other options will change the way certain sliders/modules will work, but most of the time, it’s rather irrelevant what you choose here. - I instructed the raw converter to output

P3 D60as color space - in fact, I could have used hereRec.709as well - that does not matter too much (but see discussion below in point 5.). - I requested as “Gamma”

Linearfrom the converter. Here as well, I could have chosen a different setting, it would not matter (as long as the clip’s CST matches). I choseLinearto have an easier setup on the clip’s CST node. - My monitor is calibrated to

Rec.709and I do output to this - so at the timeline node graph, I inserted a CST towards the output/display space in order to grade and output the correct colors. Indeed, input is set on this CST toUse timelineand output toRec.709. - Finally, on the clip’s node tree, I inserted right after the input node (which delivers

P3 D60/Lineara CST transforming this into the timeline (Use timeline). Important here is that both “Tone Mapping” as well as “Gamut Mapping” are set toNoneand bothApply Forward OOTFandApply Inverse OOTFare unchecked. If I would have selected color spaceRec.709instead ofLinearin step 3 when setting up the raw input module, during the clip’s corresponding CST setup withRec.709, theApply Forward OOTFwould have automatically be checked by DaVinci - that’s why I chooseLinearin the first place - theApply Forward OOTFis in this case left untouched.

After the clips’s CST, the raw data is in the DaVinci timeline color space, that is DaVinci WG - which is more than enough for all practial means. Due to the timeline’s CST, that data is transformed right before output into the color space I need, Rec.709. That’s the setup I described and I am working with. I hope this clarifies things a little bit.

So… - I am not sure what you are trying to achieve with your .tiff-processing. The color processing from .dng-files to any other desired color space/output gamma is well-defined. The data embedded in the .dng is derived from your scanner settings and the tuning file you are using. It is optimized in such a way that the colors come out very close to the original colors of the scene (provided the whitebalance is correct). So what would be the advantage of the .tiff-processing scheme, manually tuning things?