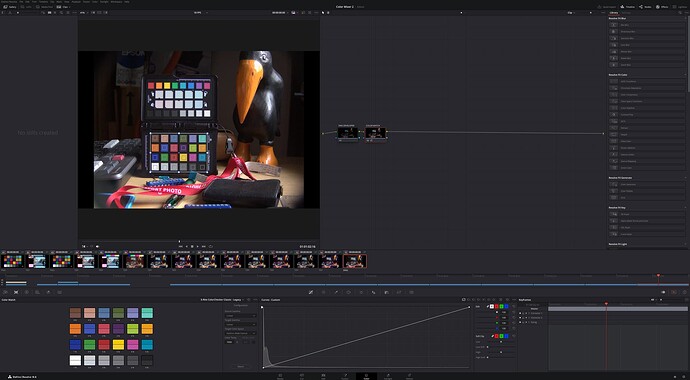

@verlakasalt asked for additional information on the methods I have been used to extract raw values on experiments in these postings.

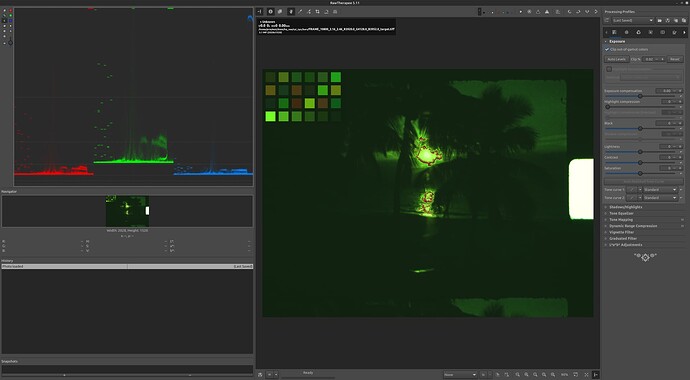

The setup is a Raspberry Pi 4, and the Raspberry HQ sensor/camera, python.

Keeping it fast

The RPi4 is underpowered for the task. Even worst if one is using the Pi via VNC, and have a GUI with a waveform monitor.

Sharing every detail on how/why this even runs in a RPi4 will make the post too dense in hard core python libraries. At this time, I am going to share the highlevel items that make this possible, so everyone can take advantage of new tools in their own setup.

- Keep the camera running. The camera never stops, and the configuration does not change.

- Core Association. Used the multiprocessing library to associate time consuming tasks to a specific core. The capture loop to one, the debayering to another.

- Shared Memory. Core association + alternating buffers of shared memory allow concurrent capturing, debayering, and saving.

- Numpy and Numba. This is a powerful combination, Numba is an open source compiler that translates a subset of NumPy into fast machine code. The improvements are an order of magnitude faster, and it serves well the tasks of debayering, sprocket detection, and waveform monitor processing.

- Picamera2 Configuration oddities. Different modes take different processing time, using preview configuration vs still configuration would shave tens of milliseconds of the capture.

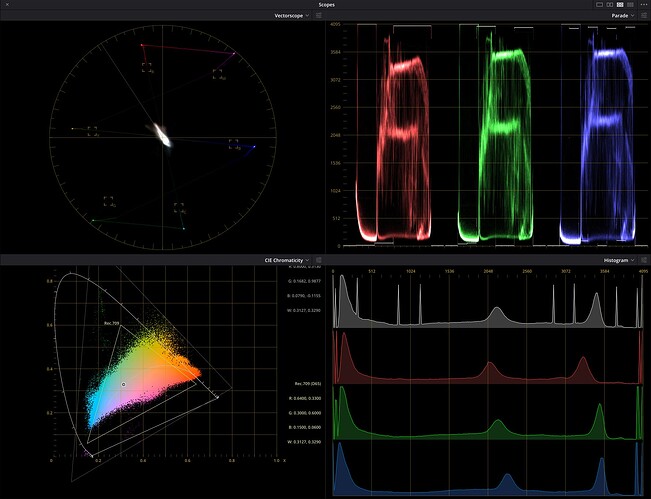

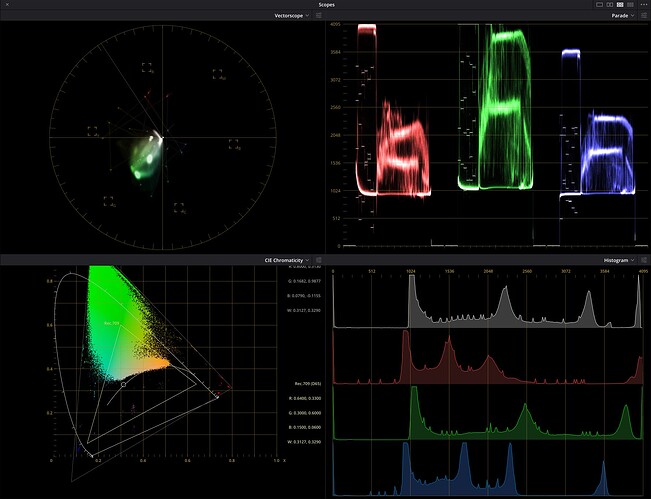

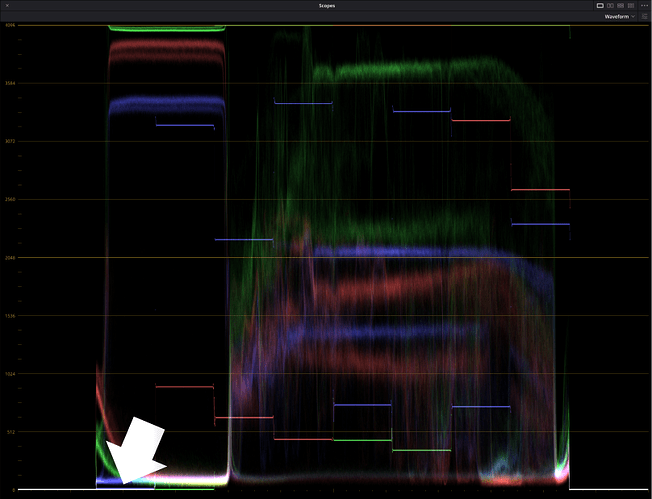

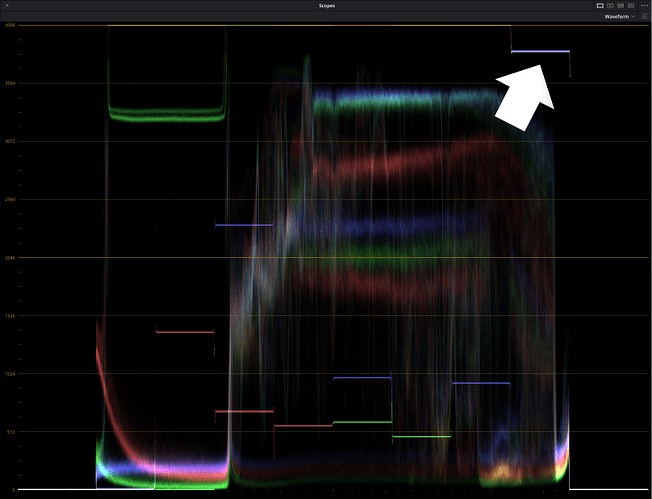

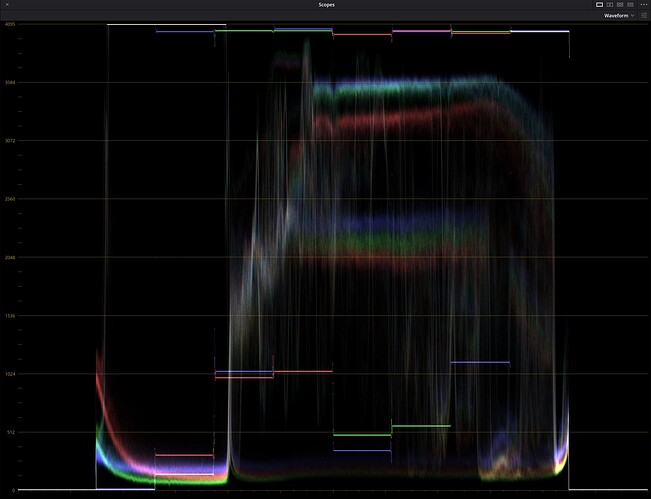

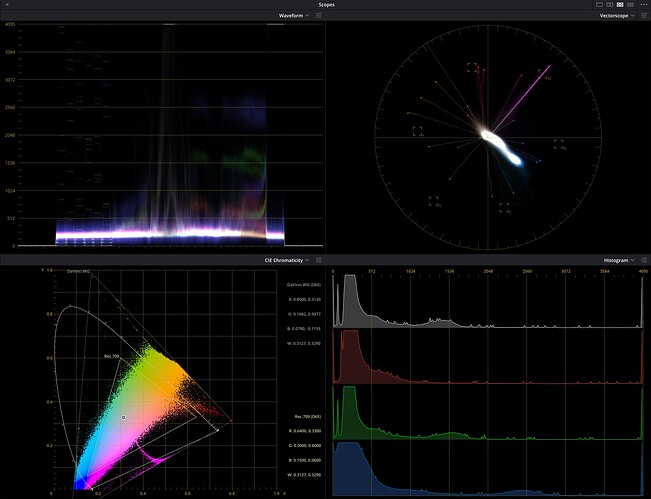

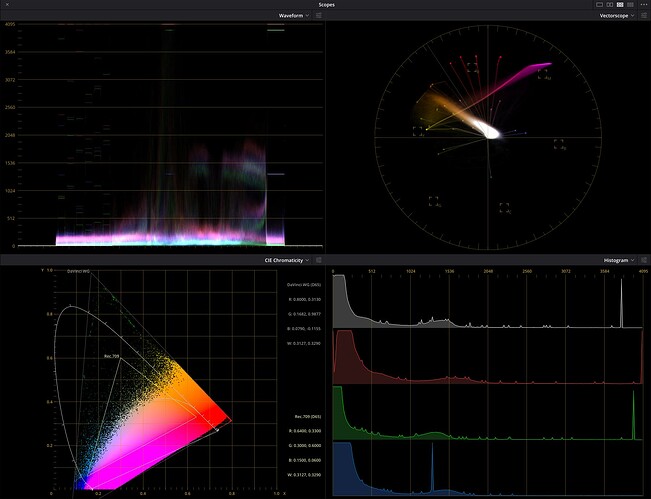

- Tradeoff of Resolution vs Pixel Depth. Instead of debayering to the full resolution of the sensor, which is processing intensive, I made the choice to bin the RGGB. The result has half the resolution (2032x1520) of the raw capture (4064x3040). This tradeoff however, increases the bit depth of the Green, since to hold G1 + G2 (each of 12 bit depth) is necessary to have 13 bits. This very item can be the subject of much discussion, I have been always in favor of more pixels, but the increased bit depth in green, and making each pixel true (not interpolated) provides -for me- a worthy trade off.

Common Code to both Alternatives

The camera setup is common regardless if you are saving the raw array or saving a DNG. It is important to highlight that the explicit sensor format will also determine the debayering order. If a format other than SRBBG12 is used, the debayering lines will change.

# Code Extract

self.pihqcam = Picamera2(tuning=tune)

self.cam_controls = {

"AwbEnable": False,

"AeEnable": False,

"FrameRate": 10,

"AnalogueGain": 1.0,

"Sharpness": 0.5,

"Saturation":1.0,

"ColourGains": (1.0, 1.0),# (R,B) Has no effect the raw array, affects DNG development

"ExposureTime": 20000,

"NoiseReductionMode": controls.draft.NoiseReductionModeEnum.Off,

"FrameDurationLimits": (100, 100000)

}

# Code Extract

self.pihqcam.create_preview_configuration(queue=False)

self.pihqcam.preview_configuration.main.size = global_shape_main #this should be 4056, 3040

self.pihqcam.preview_configuration.main.format = "RGB888"

self.pihqcam.preview_configuration.transform=Transform(hflip=True) # Camera rotated 90 degrees capturing from emulsion side

self.pihqcam.preview_configuration.raw.size = global_shape_sensor

self.pihqcam.preview_configuration.raw.format = "SRGGB12" # Sets Debayering order

self.pihqcam.configure("preview")

ALTERNATIVE 1 - Capture a raw array and save as image

The line used to capture the raw values is:

self.raw_frame_16[:]= (self.pihqcam.capture_array("raw").view(np.uint16))#12BIT

raw_frame_16 is an 16 bit unsigned integer numpy array (3040,4064) (height, width). The result of the capture_array method is 12 bits per channel packed, the addition of .view unpacks these into 16 bit pixels. The values will correspond to the 12 least significant bits.

The method used to debayer and bin (including the use of numba) is as follows:

from numba import njit

@staticmethod

@njit

def debayer_rgb16(raw_frame,rgb_frame):

height,width = raw_frame.shape

green_channel_1 = raw_frame[0:height:2, 0:width:2] # Top-left green

blue_channel = raw_frame[0:height:2, 1:width:2] # Top-right blue

green_channel_2 = raw_frame[1:height:2, 1:width:2] # Bottom-right green

red_channel = raw_frame[1:height:2, 0:width:2] # Bottom-left red

rgb_frame[:,:,2] = blue_channel << 4 #Shifted to the 12 MSBits

rgb_frame[:,:,0] = red_channel << 4 #Shifted to the 12 MSBits

rgb_frame[:,:,1] = (green_channel_1 + green_channel_2) << 3 #Shifted to the 13 MSBits

Note that when using numba, the self argument is omitted on the declaration. If numba is not used, remove the @ lines, and include a self as the first argument.

rgb_frame is expected as a numpy array (1520,2032,3) (height, width, channels)

Lastly, the line to save as an unsigned 16bit tiff (same numpy array dimensions as above).

# Code Extract

tifffile.imwrite(save_name, self.rgb_frame_16, compression=None)

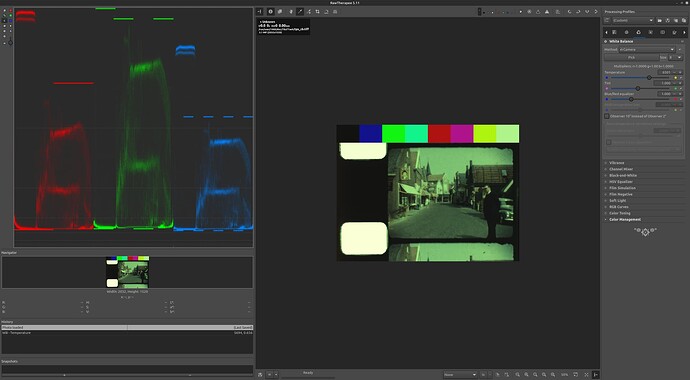

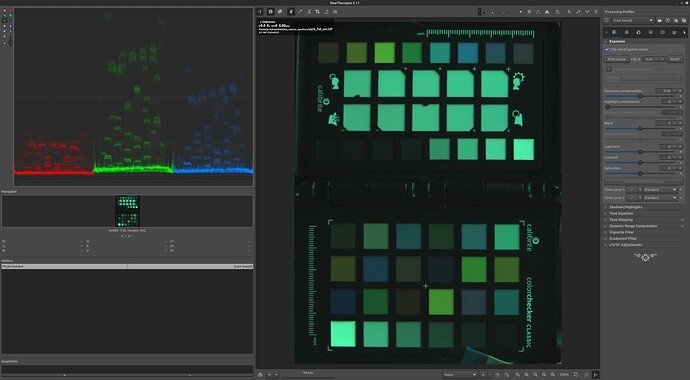

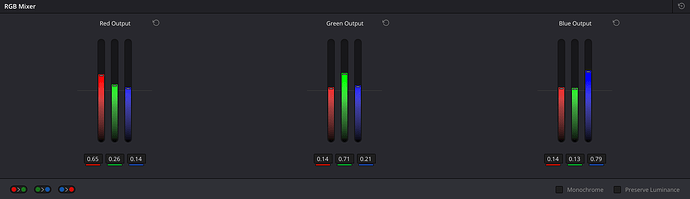

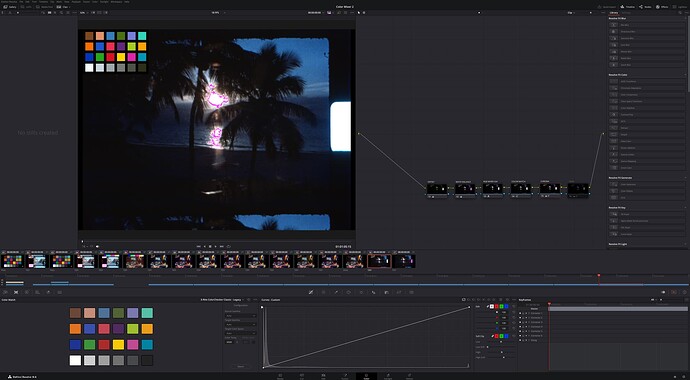

ALTERNATIVE 2 - Save a DNG and extract raw values

This alternative is to save a DNG file, in whichever way is best for your particular situation, and then extract the same raw value image.

The original code below was shared by @cpixip, remixed to add the debayering of the SRGGB12 sensor format pattern.

import numpy as np

import rawpy

import tifffile

path = r'tpx_L0800_church.dng'

inputFile = input("Set raw file to analyse ['%s'] > "%path)

inputFile = inputFile or path

outputFile = inputFile+".tiff"

# opening the raw image file

rawfile = rawpy.imread(inputFile)

print ('Rawfile Pattern',rawfile.raw_pattern[0][0])

print ('Rawfile shape', rawfile.raw_image_visible.shape)

# get the raw bayer

bayer_raw = rawfile.raw_image_visible

bayer_raw_16 = bayer_raw.astype(np.uint16)

rgb_frame = np.zeros(( 1520,2028,3),dtype=np.uint16)

# quick-and-dirty debayer

if rawfile.raw_pattern[0][0]==2:

# this is for the HQ camera

red = bayer_raw_16[1::2, 1::2] # Red

green1 = bayer_raw_16[0::2, 1::2] # Gr/Green1

green2 = bayer_raw_16[1::2, 0::2] # Gb/Green2

blue = bayer_raw_16[0::2, 0::2] # Blue

elif rawfile.raw_pattern[0][0]==0:

# ... and this one for the Canon 70D, IXUS 110 IS, Canon EOS 1100D, Nikon D850

red = bayer_raw_16[0::2, 0::2] # Red

green1 = bayer_raw_16[0::2, 1::2] # Gr/Green1

green2 = bayer_raw_16[1::2, 0::2] # Gb/Green2

blue = bayer_raw_16[1::2, 1::2] # Blue

elif rawfile.raw_pattern[0][0]==1:

# ... and this one for the Sony

red = bayer_raw_16[0::2, 1::2] # red

green1 = bayer_raw_16[0::2, 0::2] # Gr/Green1

green2 = bayer_raw_16[1::2, 1::2] # Gb/Green2

blue = bayer_raw_16[1::2, 0::2]

elif rawfile.raw_pattern[0][0]==3: #HQ SRGGB12

red = bayer_raw_16[1::2, 0::2] # red

green1 = bayer_raw_16[0::2, 0::2] # Gr/Green1

green2 = bayer_raw_16[1::2, 1::2] # Gb/Green2

blue = bayer_raw_16[0::2, 1::2]

else:

print('Unknown filter array encountered!!')

rgb_frame[:,:,0] = red << 4

rgb_frame[:,:,1] = (green1+green2) << 3

rgb_frame[:,:,2] = blue << 4

tifffile.imwrite(outputFile, rgb_frame, compression=None)

# creating the raw RGB

#camera_raw_RGB = np.dstack( [red,(green1+green2)/2,blue] )

camera_raw_RGB = np.dstack( [red,(green1+green2),blue] )

# getting the black- and whitelevels

blacklevel = np.average(rawfile.black_level_per_channel)

whitelevel = float(rawfile.white_level)

# info

print()

print('Image: ',inputFile)

print()

print('Camera Levels')

print('_______________')

print(' Black Level : ',blacklevel)

print(' White Level : ',whitelevel)

print()

print('Full Frame Data')

print('_______________')

print(' Minimum red : ',camera_raw_RGB[:,:,0].min())

print(' Maximum red : ',camera_raw_RGB[:,:,0].max())

print()

print(' Minimum green : ',camera_raw_RGB[:,:,1].min())

print(' Maximum green : ',camera_raw_RGB[:,:,1].max())

print()

print(' Minimum blue : ',camera_raw_RGB[:,:,2].min())

print(' Maximum blue : ',camera_raw_RGB[:,:,2].max())

dy,dx,dz = camera_raw_RGB.shape

dx //=3

dy //=3

print()

print('Center Data')

print('_______________')

print(' Minimum red : ',camera_raw_RGB[dy:2*dy,dx:2*dx,0].min())

print(' Maximum red : ',camera_raw_RGB[dy:2*dy,dx:2*dx,0].max())

print()

print(' Minimum green : ',camera_raw_RGB[dy:2*dy,dx:2*dx,1].min())

print(' Maximum green : ',camera_raw_RGB[dy:2*dy,dx:2*dx,1].max())

print()

print(' Minimum blue : ',camera_raw_RGB[dy:2*dy,dx:2*dx,2].min())

print(' Maximum blue : ',camera_raw_RGB[dy:2*dy,dx:2*dx,2].max())

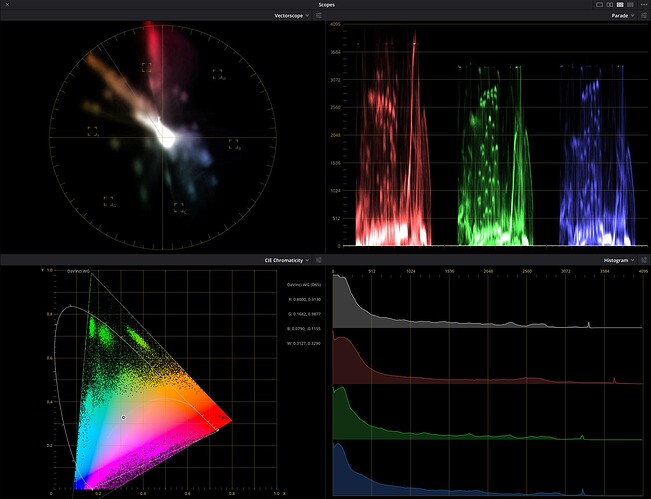

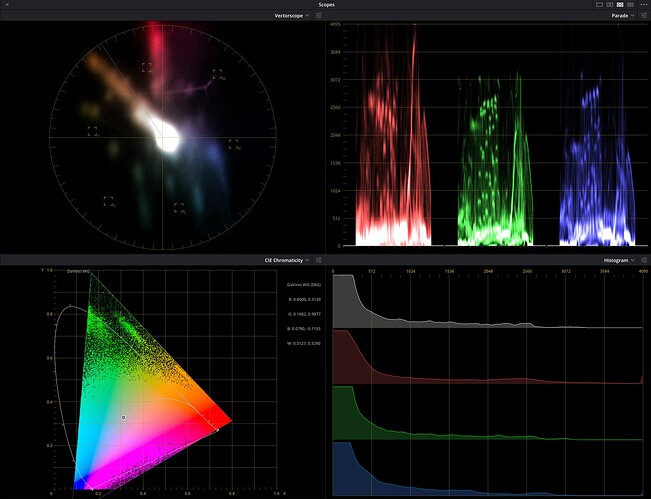

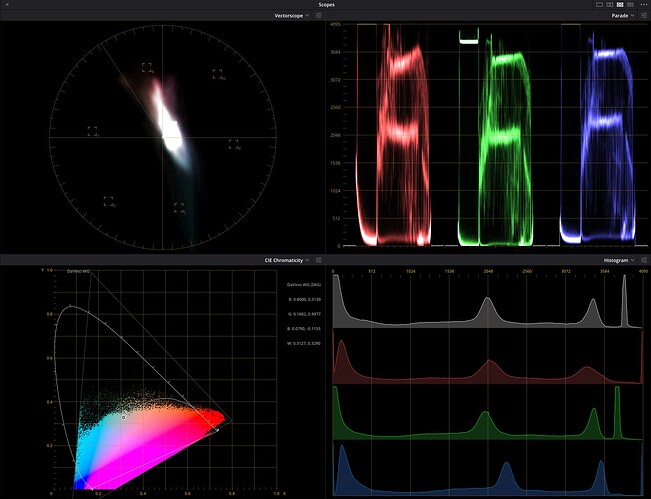

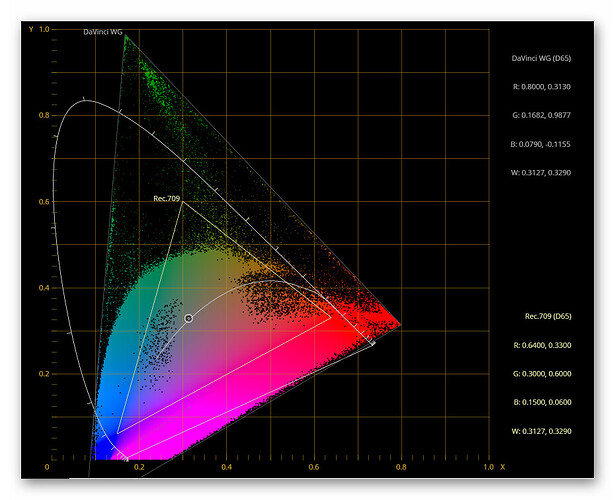

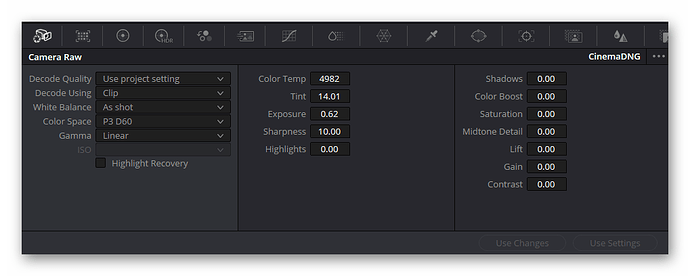

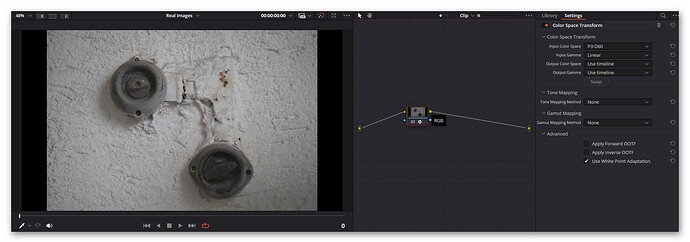

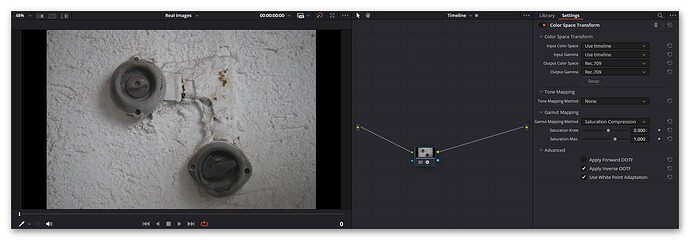

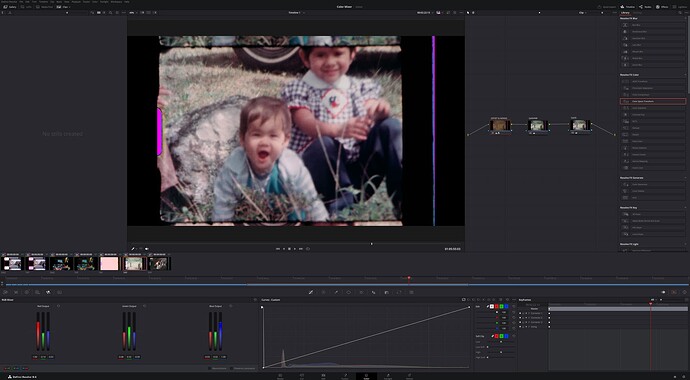

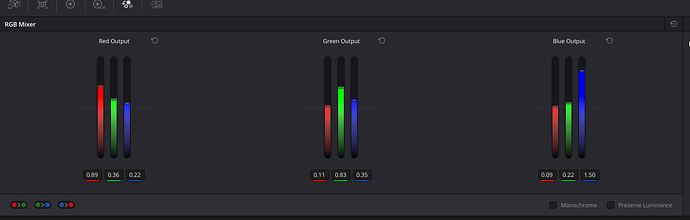

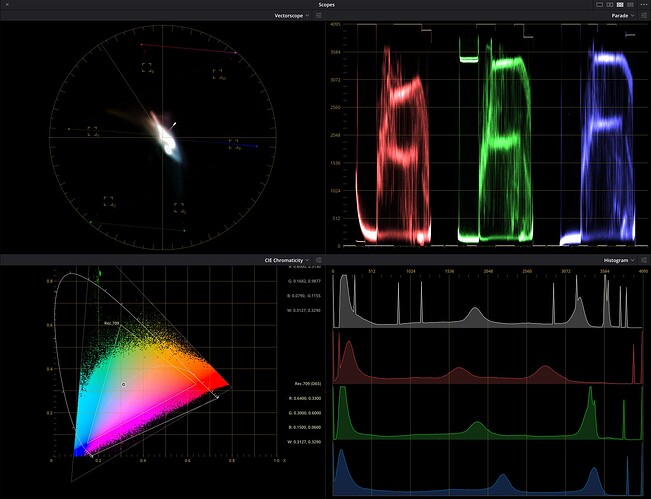

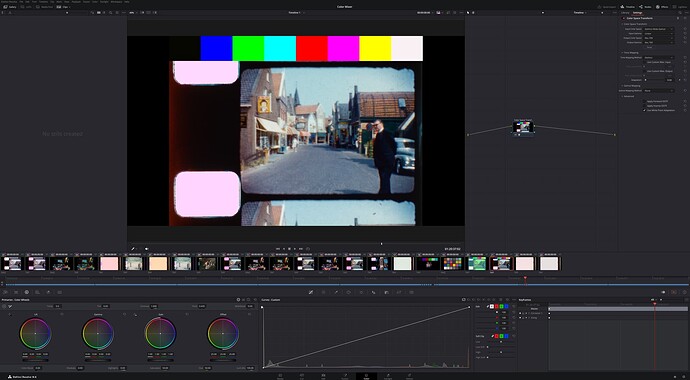

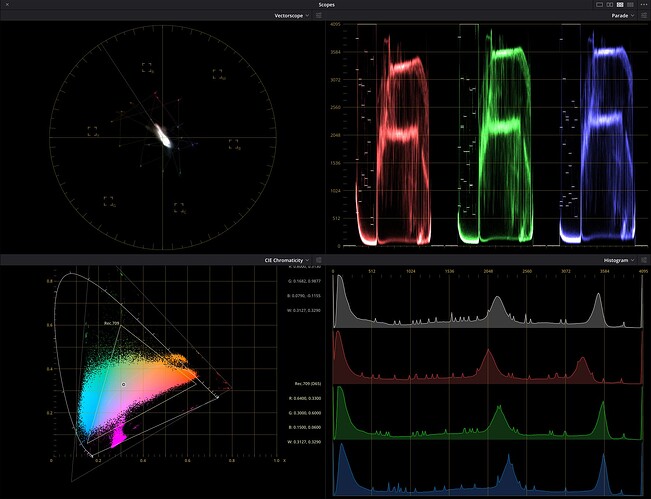

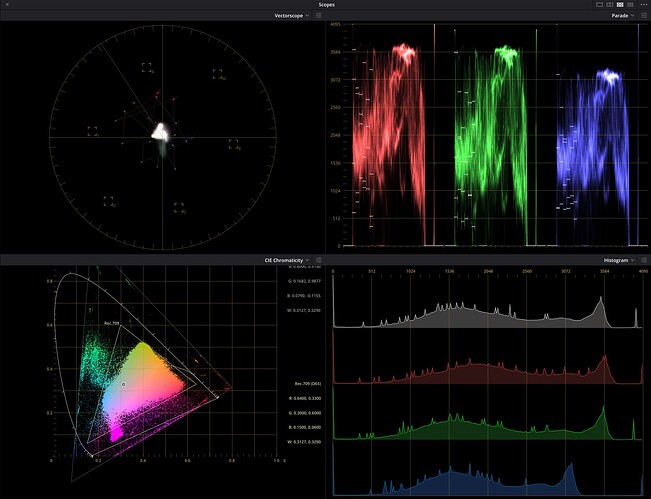

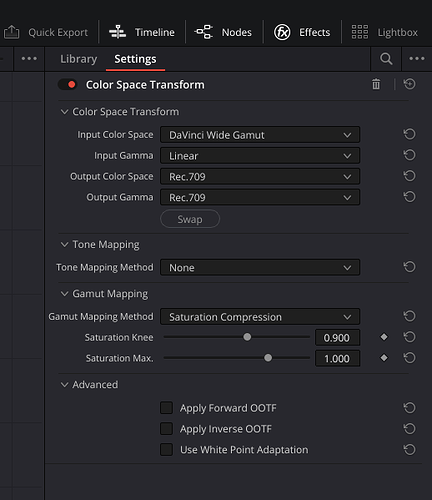

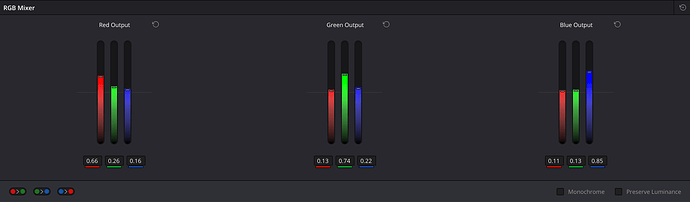

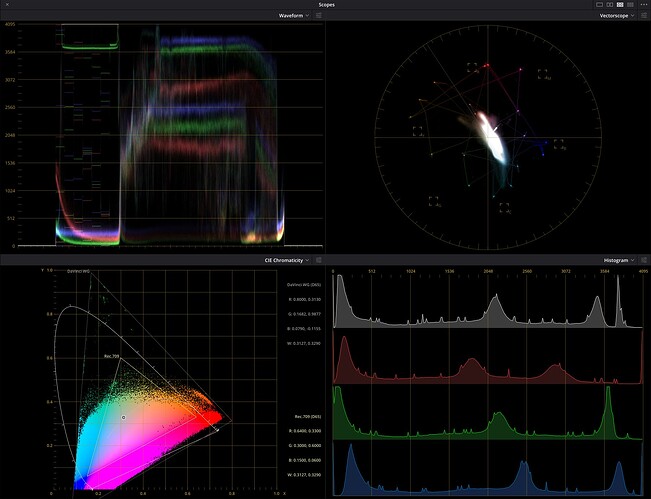

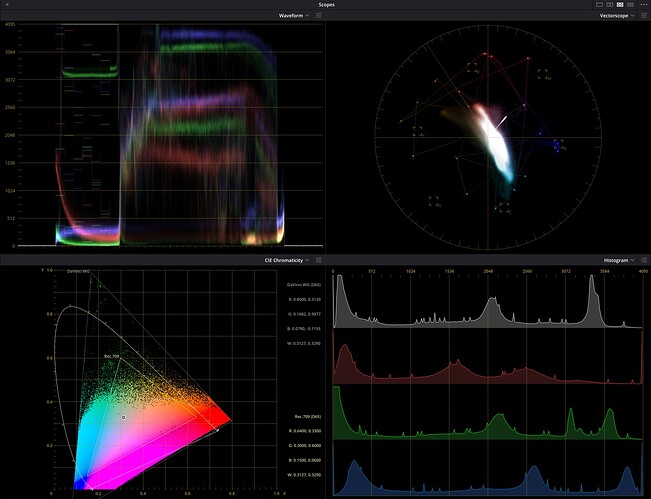

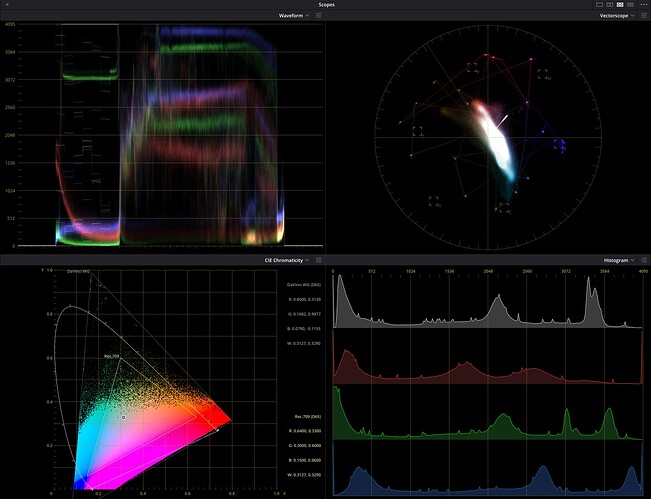

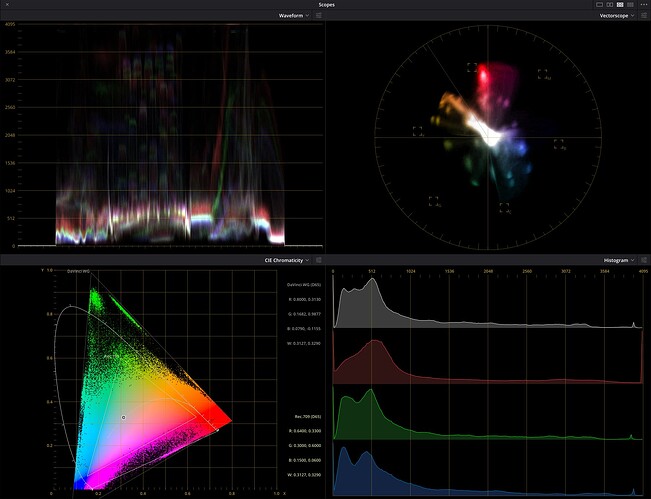

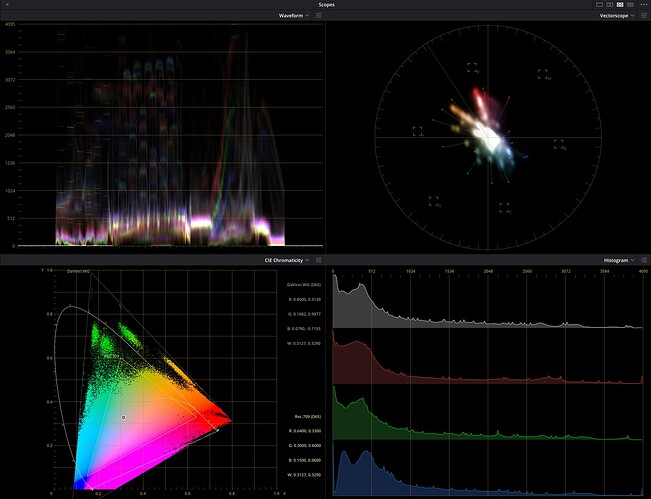

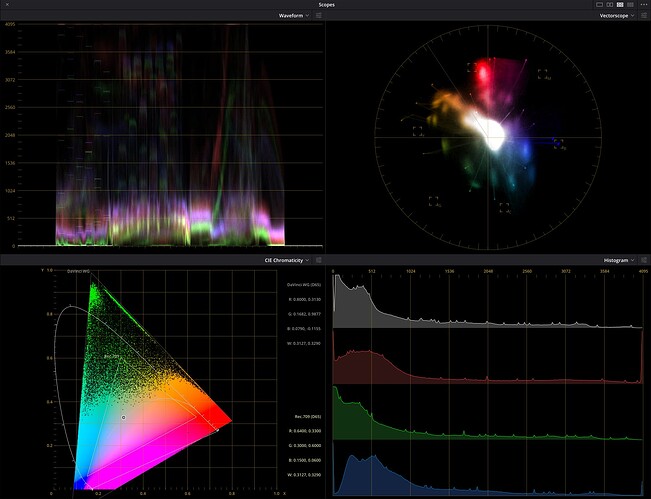

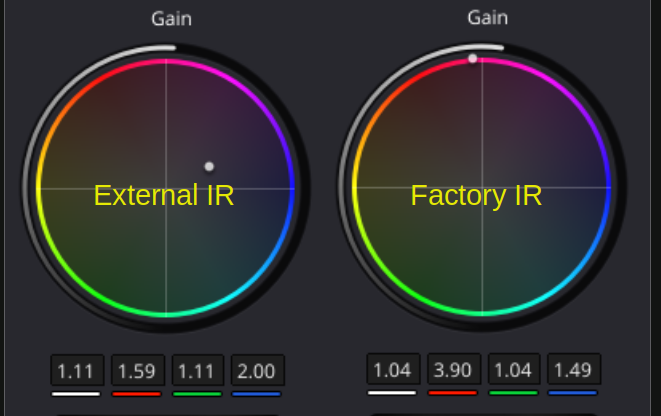

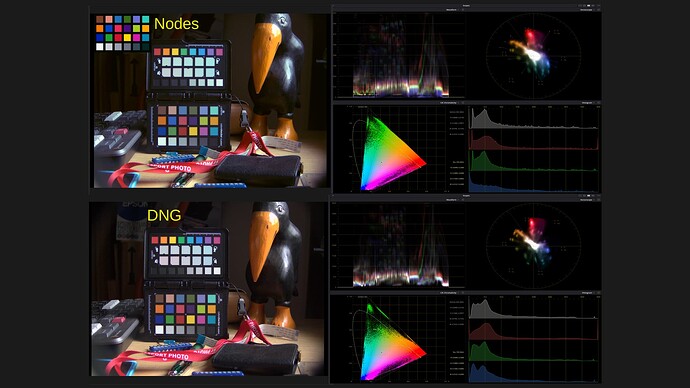

Understanding these alternatives

It is important to understand that in both cases, the resulting TIFF completely disregards color science information. It is also important to note that the raw values are what the sensor provided, including a pedestal (offset to the black levels of each channel), and that these are linear values (no gamma). In other words, from here, the processing pipeline that one chooses (either python coding or using something like Davinci Resolve) have to carefully consider the nature of the source data.

The second alternative preserves the color science, and the full resolution bayer (saved as the DNG). Consider that the raw values are subject to the processing to create (picamera2) and extract (rawpy) the DNG information.

The first alternative is a practical method to store -without any color science- the values as provided by the sensor. Note that Green 1 and Green 2 are combined into a single value, increasing the green channel bit depth, but effectively reducing the image spatial resolution, which was not saved otherwise (like when the DNG is saved).

@verlakasalt hope this provides the additional information you were looking for.