Yeah, that almost looks like magic. Nice work! I searched back a bit but couldn’t find anyplace where you described the “new color science and calibration”. I’d love to hear more about it, too!

Here’s another transfer I just did on the RobinoScan. It’s a rare Eastman Kodak demo film from 1996 to introduce their new Vision motion picture color negative lineup. Should be of interest for those of you who shoot on film ![]()

The scan is from a release print struck from 5244 EXR Color Intermediate negative film. They push the exposure -5 to +5 stops in some tests so the highlights are hard, but that’s the way the print is and the transfer is not clipping. Enjoy!

Great progress, very interesting film, thank you for sharing.

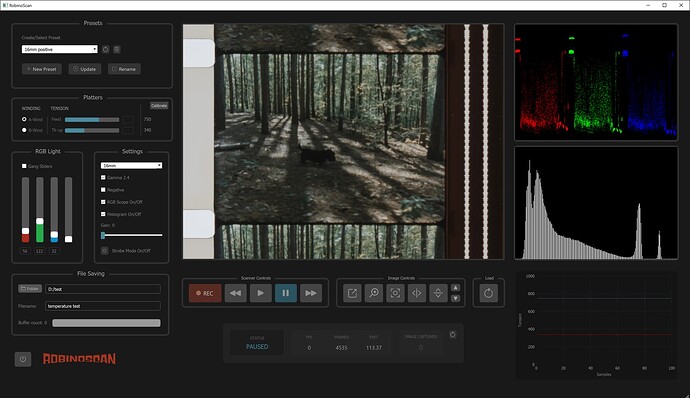

Here’s a screenshot of the working control software so far, hoping to have real-time stabilization by June. I’m going to ditch most physical buttons and only keep 2 (panic and load), everything else is controlled via the GUI.

There’s still a lot to add, I will share a new video of it all in action when it’s ready.

This looks really slick!

Are you doing the software in python? Where did you get your button icons from or did you make those yourself? How about the scopes?

Thanks !

The GUI is built with QT Designer (Pyqt) and the code is in Python with OpenCV / Spinnaker SDK. I designed most of the icons myself and got some at https://thenounproject.com/ this is a really great resource for icons!

The scopes were hard to do, they’re real-time and I have a variable ready to increase the resolution when I optimize things further. At the moment with everything turned on + capture, the CPU doesn’t go over 6% so it’s pretty good already.

The feature I’m the proudest of is the focus aid tool that detects small film grain detail and lets you focus to perfection.

I’m so glad to take out the buttons, this is the chunk I just retired ![]()

Nice. Focus is something we’re doing automatically, looks like in a similar way. Basically taking a bunch of images with the lens at different positions and using OpenCV to calculate which is the best focus.

Are you using any GPU acceleration for stuff like the scopes? OpenCV has some functionality that’s already available with CUDA acceleration - you basically just change the function name, I think. Seems like that would help with stuff like the scopes. I’m still trying to decide how I’m going to do those. Histograms are easy, waveforms are a bit more complicated.

Thanks for that link to the icons. I was hoping to find a nice set I could just use for the buttons rather than having to make my own. Most of what I found was pretty incomplete, but it looks like most of what I need is there!

That’s amazing, it’s something that could definately added to my setup but right now you have to adjust a knob manually to slide in/out for focus. I’m curious how long does it take for your setup to focus? It must be a trip to see the machine find the sweet spot! You should send a video one day of it working these videos are always so satisfying.

I did compile a version of openCV with Cuda, it was a little bit of a pain to do as I’m a newbie with CMAKE and stuff like that. I did try to change some of the opencv commands with cv2.cuda etc but in most cases, it was slower, unsure why - maybe because smaller operations don’t benefit from the GPU and just transferring the data from CPU to GPU make things worst? I need to dig into CUDA implementation for the real-time stabilization / post processing and will try some GPU optimization again. I got a GTX4080 ready to go but right now it’s dormant.

Your software is C++ right?

I’ve got it mapped out but haven’t coded it yet. I wasn’t planning on showing the image, since that would slow things down somewhat. it will run the laplacian function on each image, then compare the numerical results to get the one that’s sharpest. I’m pretty sure this is what the ScanStation is doing (or something similar), when you auto-focus. It’s always tack sharp and we don’t ever do manual focus as a result (manual, but with software control, vs mechnical). Basically it shows you the initial image when you enter the Focus tool, then shows you the position of the lens for each image it’s taking. depending on the film it can take between a few seconds and a minute (a minute is only when the film is really messed up, like it’s warped badly or cupped, and it can’t get a good lock on any edges. Once it has found focus it updates the image to the final version.

Yesterday I got the camera and lens stages tested with our new firmware, so hopefully I can implement this part soon. We need to lock in the transport first though, and I’m basically starting from scratch because we’re handling the controller in a completely different (much more reliable) way than before.

I don’t really know enough about it to know how/where the limitations are. But I would think it might mainly be in loading the images. If you’re coming from a disk, there may not be much advantage to passing it along to the GPU. But if it’s coming from memory it should be fairly fast.

I’m doing everything in Xojo, which is a rapid application development tool I’ve used for years. It used to be called RealBasic and was originally a flavor of Basic, for mac only. Over the years they’ve supported lots of other platforms - mac, windows, linux, iOS, Android, Raspberry Pi, etc. Basically you code in one project and compile for whatever platform you want. Sort of. At least it works that way on Desktop. mobile is a little different. In any case, the compiled apps are native, not interpreted like Python, so the performance is as good as anything written in C++, though with some limitations inherent to this kind of environment. For example, you can’t import C++ libraries, so we had to hire a programmer to wrap OpenCV 4.5 in a plain-C library so we can import that into Xojo. Seems to work well so far though, and it’s lightning fast, especially compared with Xojo-native image handling.

That’s how I do it too - both Sobel and Laplacian work, just need to play around and fine-tune until you’re happy with your detection alogortyhm. I now realize that before using this tool, I was never at optimal sharpness, especially in 16mm, this is a real game changer for my workflow.

Everything is done using the camera buffer for real-time viewing, scopes and all the small operations like negative, flips etc, the only time I hit the disk is for writing the raw files. This will change as I implement real-time stabilization.

Thanks I’ll look at XOJO never heard of it. Just for fun I was also able to compile my software for mac and to my surprise, it loaded up without issues - the only thing is it runs like crap on Mac, but so is Spinnaker/Spinview (at least on my big XEON mac pro ) which is why I built a dedicated host PC. I went with a Ryzen 7950X. I really would love to be on Mac, I will have to try my program on the new M2 chips and see if it’s better than my Mac Pro. It’s so crazy because that mac pro is so powerful with 2 huge GPUS - I think they just didn’t put a lot of love in the mac sdk.

Honestly, build it on whatever works. I’m doing my dev on the mac because that’s my preferred platform, but the final thing will run on Windows. The camera drivers do work on the mac, but thanks to Apple deciding what’s best for me, I can’t add the PCIe card unless I get a monster cheese grater mac. And it’s not worth spending that kind of money when you can build something on intel/windows that’s way faster and cheaper.

In any case, Xojo definitely isn’t for everybody and it’s got limitations, but i’ve been using it for so long for building in-house utility apps, I know it pretty well, and it’s able to do what I want very quickly. But to compile the software you do need to pay for a license, so there’s that.

It’s pretty depressing that the PC I just built for a LOT less money is torching my 16 Core Xeon/ 96GB ram / 2x AMD pro GPUs / cheese grater. That’s just the way it is I should sell it now before they release the new mac pro.

To be fair, Apple’s hardware is generally pretty great, but never cutting edge. We are still using 2009-era MacPro machines for capturing HD and SD videotape. They’re solid machines that do not die. Sure, the OS is old, but it all still works!

I am pretty curious to try out the new system I’m building for our Phoenix restoration system. We have been running it on an older dual 14-core Xeon setup, but the clock speed wasn’t great, and Phoenix is heavily CPU Bound. I finally pulled the trigger on a SuperMicro motherboard and Threadripper Pro 5975WX, 128GB RAM, RTX4070, etc. It should be crazy fast. I’m hoping to try getting Windows installed on that today or tomorrow, when I have some time. finished the hardware side last week.

To get back on topic though, OpenCV, I’ve found, is pretty quick even on modest hardware. We’re running Sasquatch on an i9 based system and all the initial OpenCV testing I did was almost instantaneous. We don’t expect any slowdowns from CPU-based processing once I put all the pieces together, based on those tests. (then again, I’m using a 3.6fps camera, but still - there’s a fair bit of heavy lifting happening in OpenCV, and we should be able to do sequential color RGB scans at about 1fps if I’ve done the math right - three separate exposures, aligned, cropped, color merged, converted to the output format and written to disk). I’m good with that.

A friend of mine has just replaced the host computer for his 4K DIY scanner with this:

https://www.amazon.com/gp/product/B0BSF3FRLV

He reckons it’s incredible value and likes that it’s nice and small as he doesn’t need another huge tower. He has a real scanner as well (a LaserGraphics ScanStation) but is powering back up the DIY one so he can continue development on it and use it for specific jobs that he wants to use it for. 9.5mm for example.