ok, here’s an update with respect to 16bit/channel processing.

The following script actually does the job, sort of

threads = 12

SetFilterMTMode("DEFAULT_MT_MODE", MT_MULTI_INSTANCE)

frameStart = 0

frameStop = 3450

frameName = "HDR\HDR_%08d.png"

#####################################################################################

# 8 Bit input, reading RGB24-frames and converting them to YUV

#source = ImageSource(frameName,start=frameStart,end=frameStop,fps=18,pixel_type = "RGB24")

#source = source.ConvertToYV12(matrix="Rec709")

# 16 bit processing, reading RGB48 frames and converting them to various YUV-formats

source = ImageSource(frameName,start=frameStart,end=frameStop,fps=18,pixel_type = "RGB48")

source = source.ConvertToYUV420(matrix="Rec709")

#source = source.ConvertToYUV422(matrix="Rec709")

#source = source.ConvertToYUV444(matrix="Rec709")

denoise = input.TemporalDegrain(sad1=800,sad2=600,degrain=3,blksize=16)

amp = 4.0

size = 1.6

blurred = denoise.FastBlur(size )

TemporalDegrain_C = denoise.mt_lutxy( blurred, " i16 x "+String(amp)+" scalef 1 + * i16 y "+String(amp)+" scalef * -", use_expr=2)

TemporalDegrain_C

Prefetch(threads)

I needed to explicitly ask the ImageSource to read RGB48 and had to introduce the i16 qualifier in the mt_lutxy-call to get a similar output to the above 8 bit/channel script. Also, the needed conversion from 48 bit RGB to YUV requires yet another call (ConvertToYUV...()).

However, the output of the TemporalDegrain-Filter is not the same as in the 8-bit case.

[Update: ]

That is actually expected, as the SAD and other values will need to be adjusted. Also,

as the processing path also uses mt_lutxy-magic, I suspect that some of the parameters need to be adapted accordingly. You might want to experiment… ![]()

I ran this script on 16 bit/channel source files (.png) with VirtualDub2. In order to get 16 bit/channel output frames, I needed to store the results as .tiff-files.Also possible with VirtualDub2 was rendering out the footage with the “x264 10-bit” codec.

Reading the image files as RGB24 allows only internal 8-bit processing. With a source resolution of 1600 x 1200 px, I get 7.0 GB memory usage, 64% CPU, and 5.2 fps processing speed. Reading the image files as RGB48 gets you 16 bit internal processing. Depending on the YUV- colorspace used, memory usage and fps will vary. With ConvertToYUV420 (my best bet), you need about 8.5 GB memory, use 53% CPU and get 3.5 fps. ConvertToYUV422 (probably already an overkill in terms of color resolution) results in 9.7 GB memory, 61% CPU and 2.7 fps processing speed. Finally, ConvertToYUV444 gives on my system 13.2 GB memory, 44% CPU and 1.9 fps.

[End of Update]

The 8-bit/channel image looks fine, the 16bit/channel image not so - seems to me that some scaling in the TemporalDegrain-filter is not right. Left is the 8 bit/channel result, right the 16 bit/channel result:

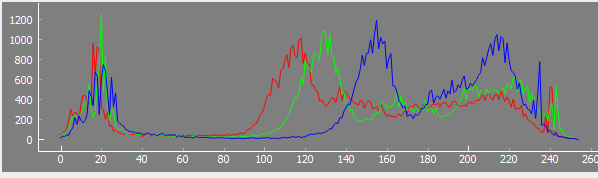

Looking at the histograms, here for the 8 bit/channel image

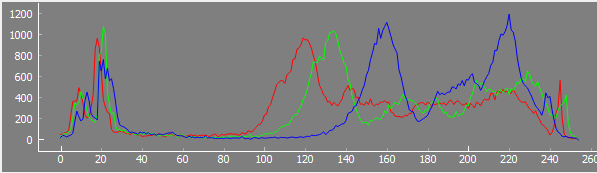

and here for the 16 bit/channel image:

one can see that the 16 bit/channel result looks cleaner in the histograms, as expected.

So, I consider this a “mixed result”. While it is possible to run an avisynth-script with 16 bit/channel, it seems that a lot of detailed rework for the filters employed is necessary to actually succeed.