I beg to differ on this one. The result is 3 numbers, but it is what those capture. In this case the resulting Gw -I speculate- will be influenced by the broader spectrum primary component Yw, since arithmetically is the primary component of Y. My hypothesis is that the additional spectrum (information) captured as Yw with the broad illuminant (white in this case) will provide visible changes in the resulting G channel.

To make an analogy, when one is digitizing music, it is only one number. But if the sound is filtered before capture (what the leds are doing) the information in the channel (the music components) are not preserved.

What is it better, a narrow G or a wide G?

I do not have enough understanding to make that choice, except from a signal perspective, there is more signal on the Gw (and also more noise).

If time and storage is not an issue, one could achieve something similar for film with a monochrome sensor and multiple exposures of more narrow band LEDs. But on the human eye, all that would become 3 numbers as you point out.

If that is done, one would be able to blend these to effectively widen the resulting bandwidth of each RGB by blending only those that match the wavelength for the channel. Not sure it is worth the time and storage.

Other valid use cases are for UV and IR to detect other physical aspects.

Agree. Here the practical workaround is exposure stacking.

Agree.

Agree. My thought process here is that if grain is better defined, digital cleaning process would also be more precise.

Exactly.

The Ektachrome post is 12 bit raw with white LED.

The film no longer is capable of faithfully reproducing the scene colors. When increasing channel gain in color correction, one should try to have a source with sufficient range to avoid digital artifacts/noise resulting from a limited range capture.

In this cases would white, or a tri-pass of Y-B-R would be a better source image than narrow band RGB? I think the answer is yes. And by source image I mean one that requires processing/correction to render a faithful scene.

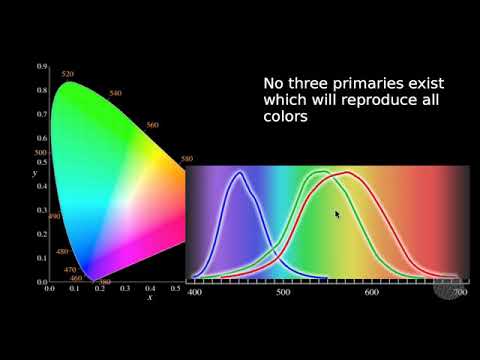

I will certainly read these, although probably not understand all. I think that key to the narrow band to wide band RGB is this article

The number that would represent each channel is capturing the power for a given spectrum band, the cascade band of illuminant, film, lens, sensor, processing, correction, and display.

There is a single G number, which represents the power that will be emitted by the display, and is derived by the power captured by the sensor.

How is the G number influenced/changed when the power counted by the sensor is received from a chain with a wide or narrow illuminant?

It will be one number still, but I argue it will not be the same number if there was adjacent power at neighboring wavelength, adjacent signals (what I called above information).

The number may be different than when adjacent power is filtered by the narrow illuminant.

I think that the narrow blue and narrow red is an acceptable trade off, given that the eye ability to see detail at those wavelengths is less (compared to Green). The trade off is having a wide band Green, where most the details are perceived by the eye.

Thanks for taking the time to exchange these perspectives, certainly there is no one size fits all in the world of film scanning, and it is good to have a better understanding of the choices one have when assembling a scanner.

I have been watching this presentation as a way to better familiarize myself with color science in film and what created the itch for the wider band illuminant.