Hey everyone,

I’m currently researching how to improve my dust and dirt removal in post processing and I would be interested to see what methods everyone else is using or other methods that people might be aware of. So please feel free to describe your approaches and your feelings about others at length below. I’m hoping that this thread can become a collection of information dust and scratch removal.

In the rest of this post, I will describe my own approach, why I’m not perfectly happy with it, and the direction I’m currently researching to improve it.

My current workflow and its problems

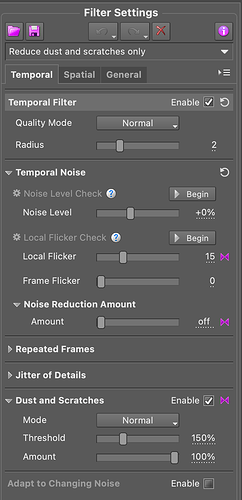

So right now, I am using Neat Video, which is a plugin for all kinds of denoising tasks, available for most commonly used video editing programs. Rather than for denoising, I use Neat Video primarily for its dust and scratch removal. I’ve outlined this a little more in my recent post on my own scanner build and the README of the corresponding GitHub repository. To spare everyone the search, here are the Neat Video settings I’m using:

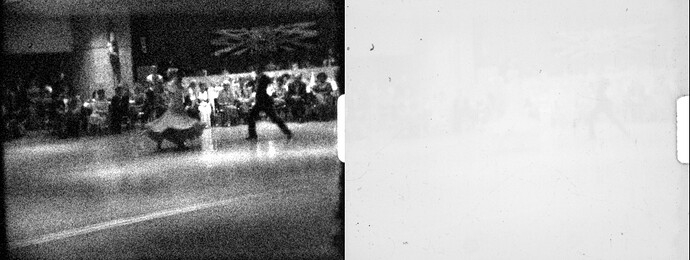

Now overall, I’m actually very happy with Neat Video. Most of the time, the software performs great and works as a one click solution … until it doesn’t! Every now and then, Neat Video fails at its job and produces the weirdest artefacts, like blotches, sometimes it also removes peoples limbs or other fast moving objects, and water reliably ends up looking a bit off. This article points to some of these issues as well. Most of these issues are not always immediately visible on a first watch-through, but at some later point, you will watch your video, see the artefact and be very annoyed with it. As a result, my current workflow is to apply Neat Video almost by hand, only to frames or even part of frames, where there is obvious and disturbing dust or dirt. The general goal here is not to make the film look perfect, but rather to make it look as good as a very clean film may have looked back in the day when projected. Furthermore, I generally go by the principle that “natural” dust or scratches are better than weird digital artefacts.

The issue with this approach is that it takes a LONG time – often hours for just a 15 m roll of Super 8 film. As a result it slows down my scanning process significantly, which is why I am looking for a better solution.

Goals for the future

What I would like, is a workflow that is reliable enough to be applied as a one-click solution, i.e. that more or less removes most of the dust while producing (almost) no visible artefacts. Compute times are not so much a concern in this case, at least not for me, as you can just set and forget the software to do its thing and do other things in the meantime.

I’m not aware of any other approaches that can deliver this kind of performance. I know that DaVinci Resolve has similar tools to Neat Video (Dust Buster?), but my understanding is that they work in a very similar way to Neat Video and have many of the same strengths and weaknesses … but I’m interested in other people’s opinions on this.

Otherwise, I am planning to do some research on using Neural Networks to do this. This is my personal professional background, so to me it seems like the obvious tool for the job. Unfortunately, I’m not aware of any recent publications already trying something similar, not even for dust removal on stills images (film scans). If a good solution for the latter already exists, this would absolutely be a first thing to try on our motion picture films.

Closing words

With all that said, I’m looking forward to seeing other people’s solutions, and hope that this thread can become this forum’s collection of information dust and scratch removal.

![[ECCV 2022] Demo Video: Flow-Guided Transformer for Video Inpainting](https://forums.kinograph.cc/uploads/default/original/2X/9/9acfe074b2e88a825e1d429f7d82bbabb9086e3c.jpeg)