Hi all!

It’s been 3 years working on this project on and off, and now it’s finally time to post my finished build. ![]()

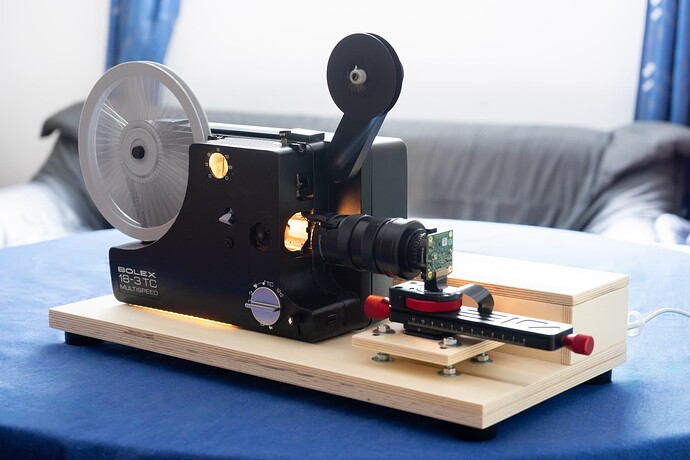

Without further ado, here is the finished 8mm Film Scanner.

TL;DR: All details on how this scanner was build including the software are available on GitHub: GitHub - jank324/8mm-film-scanner: Conversion of an old projector to a Regular 8 and Super 8 film scanner. Sample scans and a screenshot of the software can be found below.

I started building this scanner and following the forum over 3 years ago, when I was given my grandfather’s collection of Regular 8mm and Super 8 films. Originally, I wanted to build a Kinograph-style scanner completely from scratch, but I soon realised that this was a tall order. My focus switched to converting an old projector when I found a very beat-up Bolex 18-3 TC in my local area for very cheap, and around the same time, Scott Schiller released an excellent and inspiring video on his projector conversion over on YouTube. With this change in direction, the project was finally making serious progress, and about 1.5 years ago, at the end of 2021, the scanner was finally “finished”, and I could start properly scanning and archiving the extensive collection of film reels I have. Since then, I have scanned over 50 reels, and while some quirks of this machine have made themselves known and some things were never quite finished properly (“Nothing lasts as long as a makeshift solution.”), I can say that I am really happy with the results I’m getting.

The overall concept is very similar to other frame-by-frame machines, such as Scott Schiller’s as well as the build by @Manuel_Angel posted on the forum many moons ago. Similar to them, I have replaced the motor of the projector with a stepper motor that is controlled by a Raspberry Pi. Also similar to many scanner builds, I am using a Raspberry Pi HQ Camera with a Schneider Kreuznach Componon-S 50mm enlarger lens. I have written some more about the camera setup in another thread. The entire scanner is tied together by a wooden base plate that also comes with a case to house the Pi and some other electrical components. This makes the scanner super easy to move around and store. A nice (but probably unnecessary) touch is that the entire scanner can be powered via an off-the-shelf USB-C power supply as you might already be using to charge your phone or laptop. It feels good to be able to comply with upcoming EU regulations. ![]()

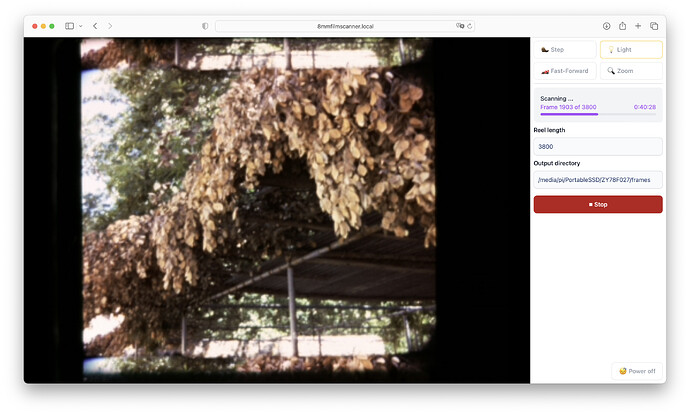

Where I believe this build differs from some of the others I’ve seen on the forum, is in terms of software. Of course, most of the software is also written in Python, but unlike other software, a connection to a client PC is not needed during scanning. Instead, the Pi provides a web interface (shown below) that can be used to set up the scan, and after that the scanner runs independently, saving frames to an external SSD connected to the Pi.

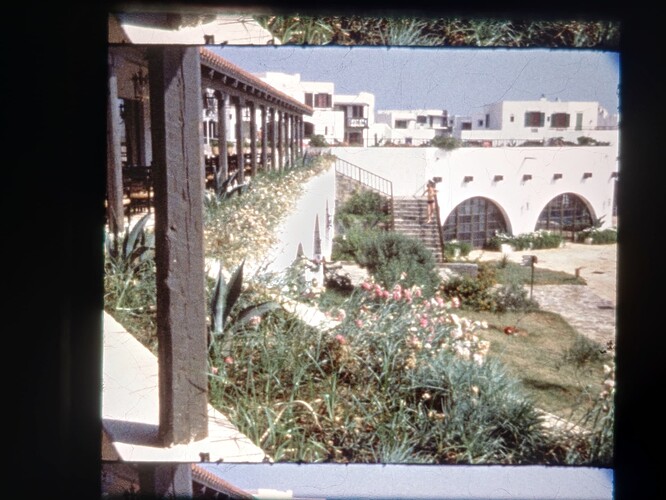

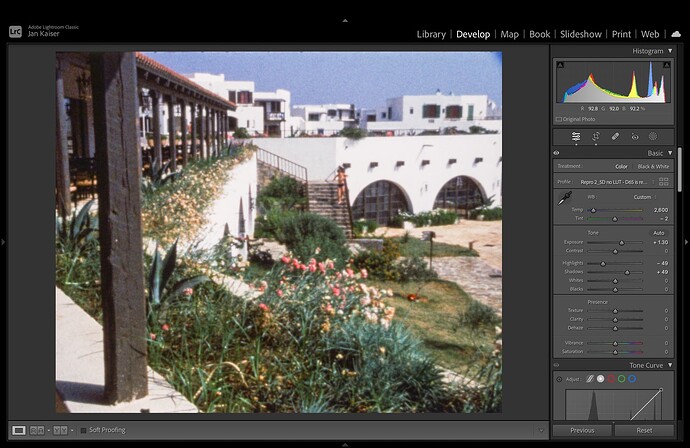

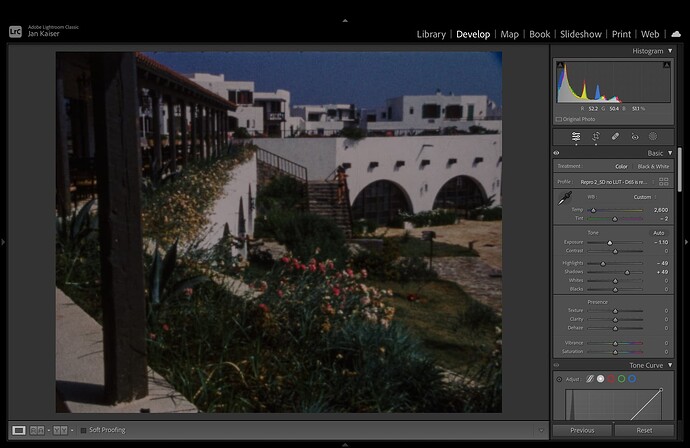

The scanner saves RAW images from the HQ Camera. These are then post-processed on my laptop after the scan. A Python script converts the raw data produced by the HQ Camera into .dng files, which are then edited in Adobe Lightroom via a preset. Next, I use Apple Compressor to produce a video file which is stabilised and cleaned of dust in Final Cut Pro.

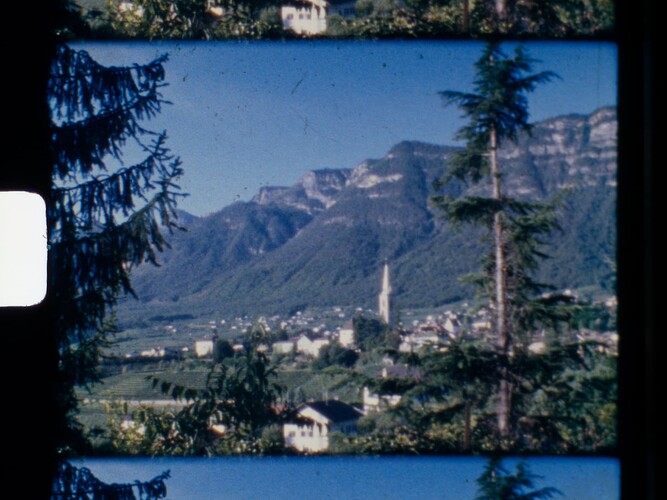

Here is a sample video of what the end result looks like:

If you would like to use the software for your own scanner or simply read up on every technical detail about my build, I would like to point you to the following GitHub repository where I have tried to collect absolutely everything related to this project: GitHub - jank324/8mm-film-scanner: Conversion of an old projector to a Regular 8 and Super 8 film scanner.

Questions, suggestions and comments are highly welcome!

Last but certainly not least, I would like to thank the members of this forum for the amazing corner of the internet that has been created here. The knowledge collected in this forum is outright amazing and was a huge help for me and surely also for others building such a film scanner to preserve works of art, documents of the past or precious memories.