Building the Sphere

Previously I shared the findings (failures) on the first round of the SnailScan.

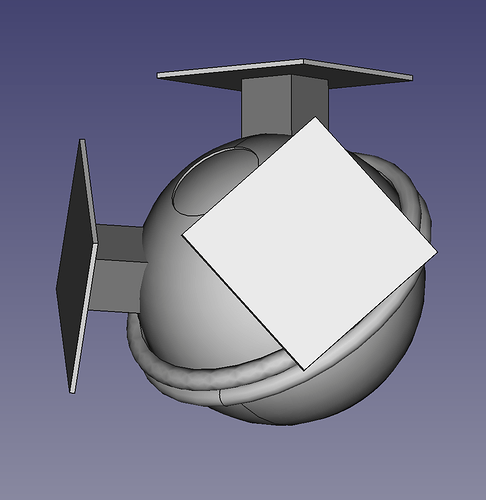

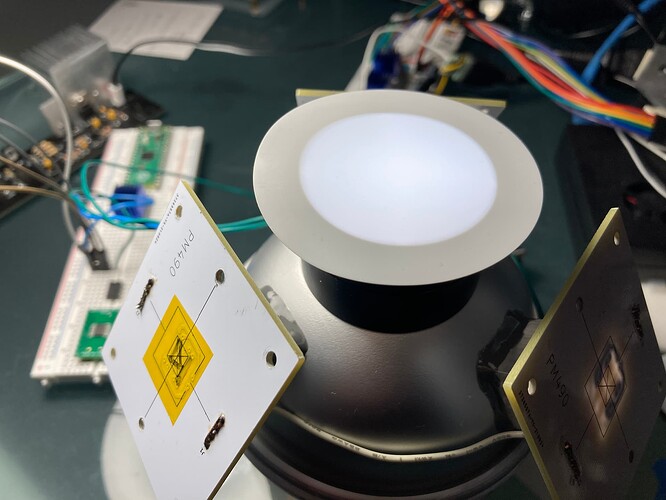

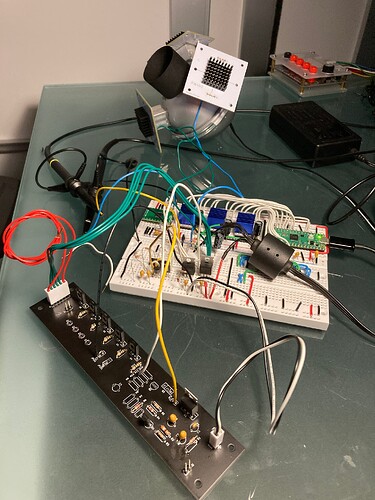

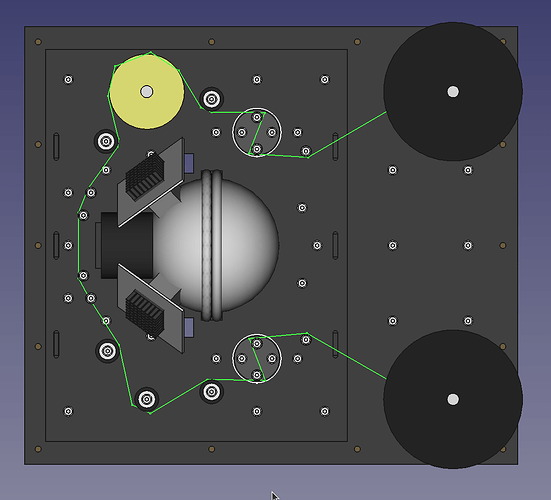

Have been progressing mostly on design choices, and began Frankensteining as much as possible into a prototype, first on the transport, now on the illuminant. The goal is to button up as much as possible before the next round of PCBing and metal cutting.

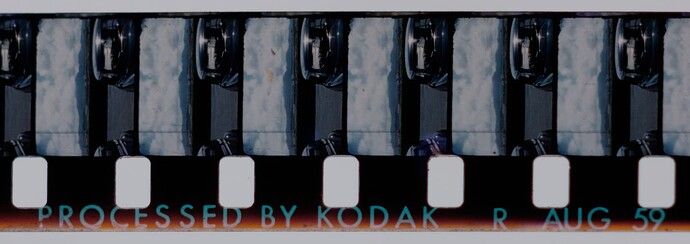

In Snailscan2 the plan was to build a larger sphere to accommodate, at the minimum, about 3 frames of 16 mm.

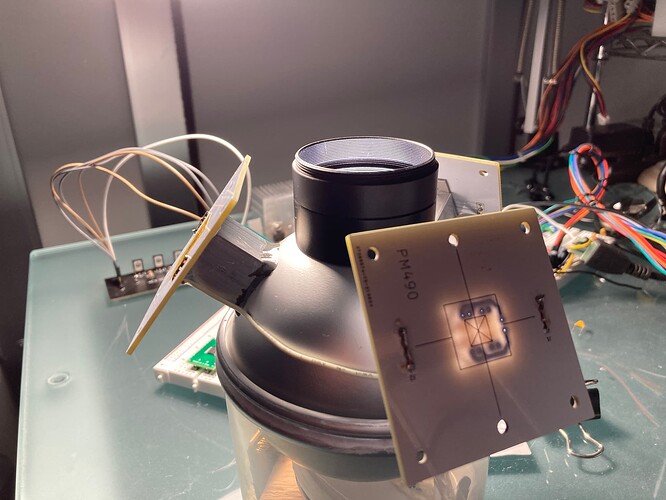

In reply to other postings, I have commented about the half-sphere aluminum baking mold as an option. The sphere was build using the hemisphere aluminum baking molds (90mm diameter). On one half, 3 round entry ports (12 mm diameter) were drilled with a step-bit, and one large output port was cut (39mm diameter). The entry ports were extended with 3/4-inch aluminum square tube epoxy-welded to the sphere-half. The finished part were sanded with a 200 grit paper, and painted with multiple coats of a mixture barium sulfate diluted in distilled water, mixed with acrylic white paint.

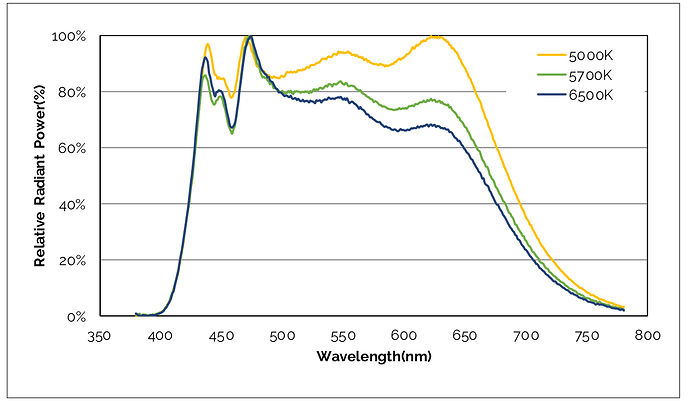

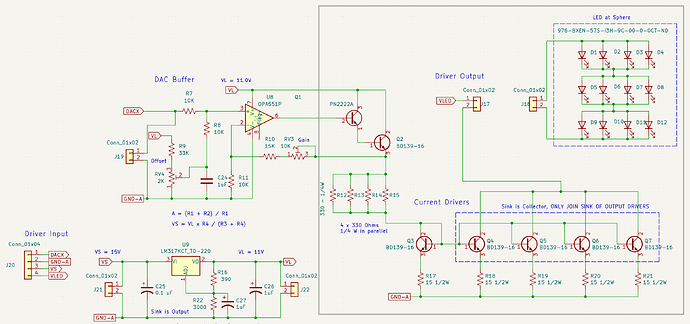

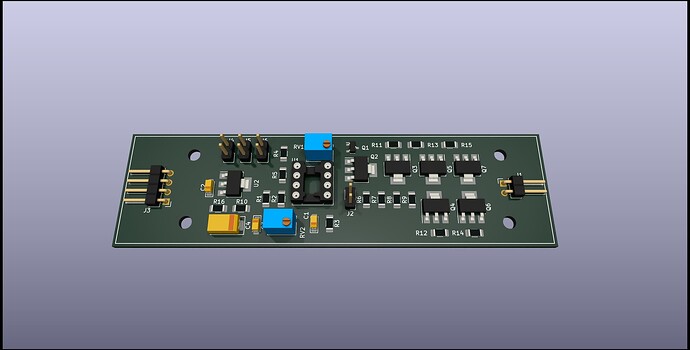

The prototype uses prior-design-existing LED PCBs, and the part of the constant-current driver previously shared. Each PCB has four LEDs, each LED is driven at about 0.7 watts. The LEDs are rated with a CRI>95, see the 5000K curve.

Plenty of light, and too much to use continuously at full power (unless baking something).

Since I had the existing LED boards, the prototype would require a connector cylinder from the sphere to the gate to avoid spilling it everywhere. The separation, makes clear that the distance between the film, and the sphere surface, has important implications: the intensity and cohesion of the light changes slightly.

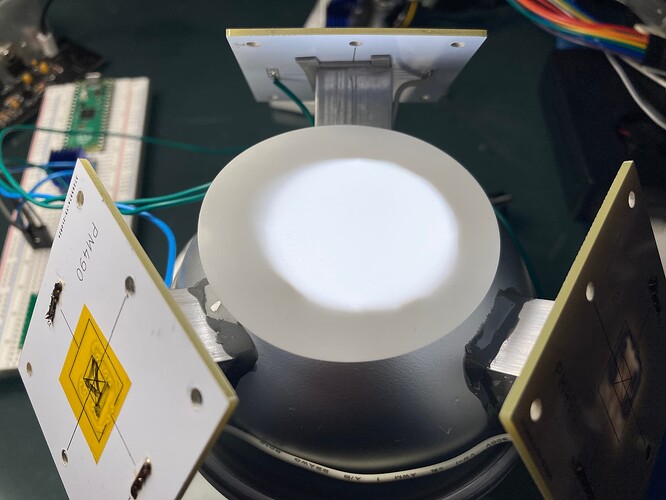

Most of the spheres in the forum keep the film close to the sphere, and -if my interpretation is correct- that also provides more-diffuse less-coherent light. When the output port is separated, the same light comes out, but the cylinder somewhat narrows it, making it a bit more-coherent less-diffuse than at the surface. This is very subtle.

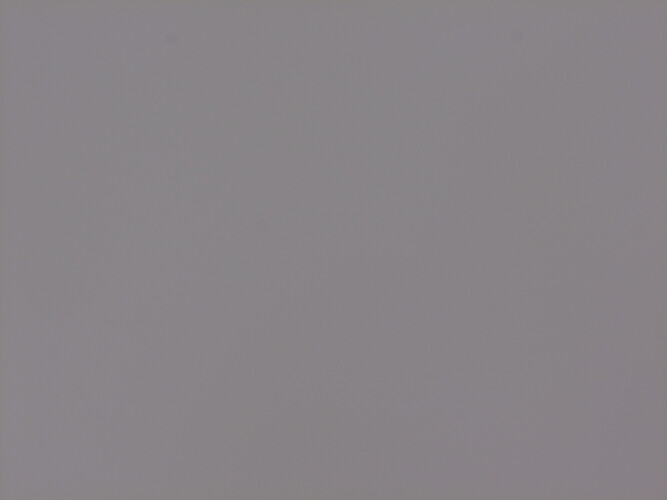

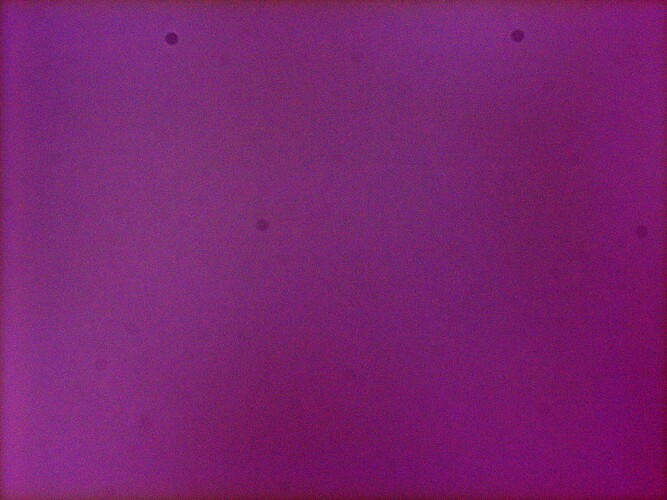

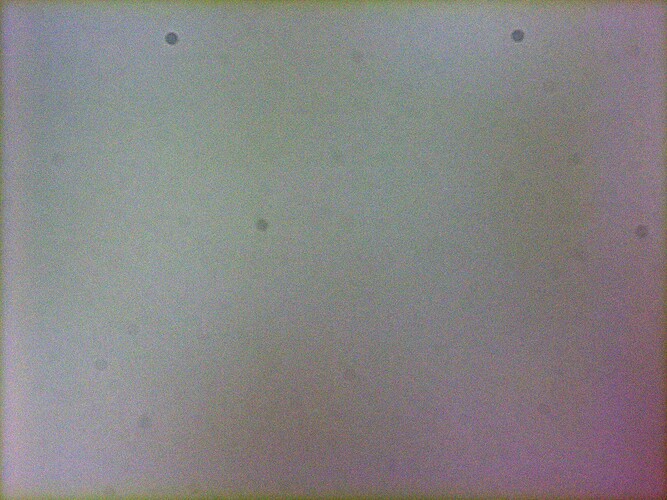

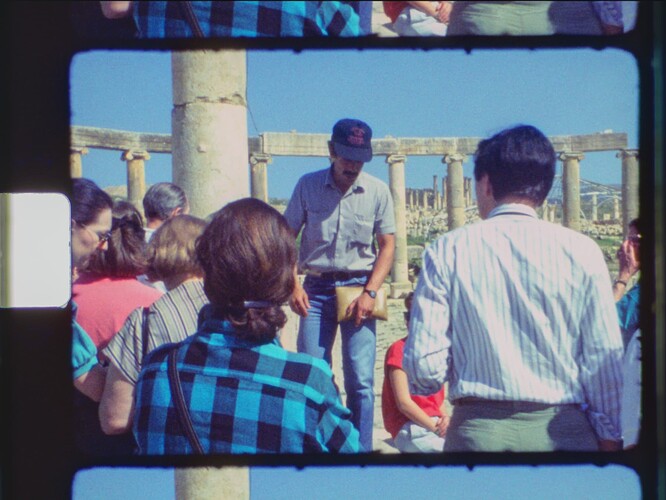

To illustrate, the pictures below have the same LED intensity.

Near the surface:

With a 34mm length 42mm diameter extension cylinder:

For me this was an interesting realization, yet something I should have known already: the distance from the film to the sphere surface, slightly changes the nature (diffusion) of the illuminant.

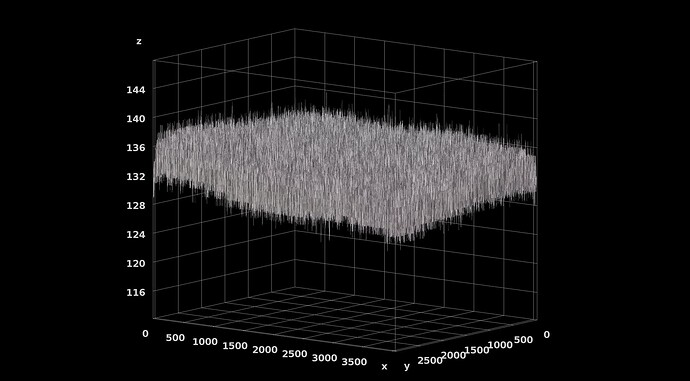

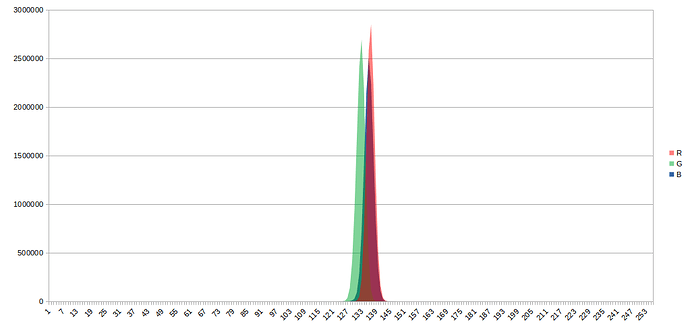

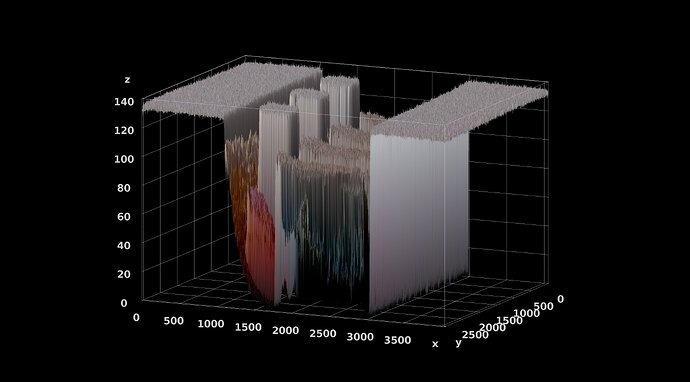

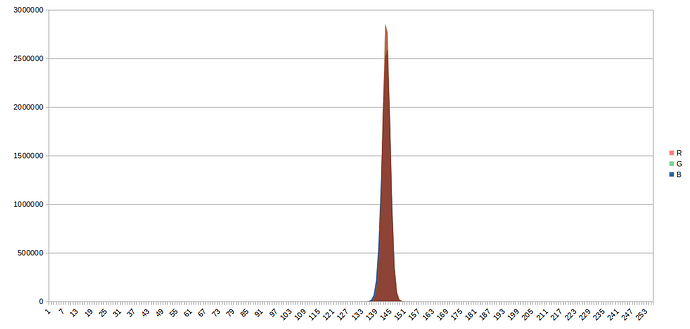

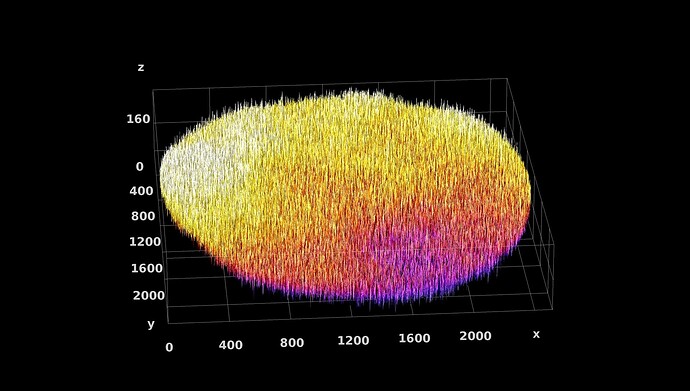

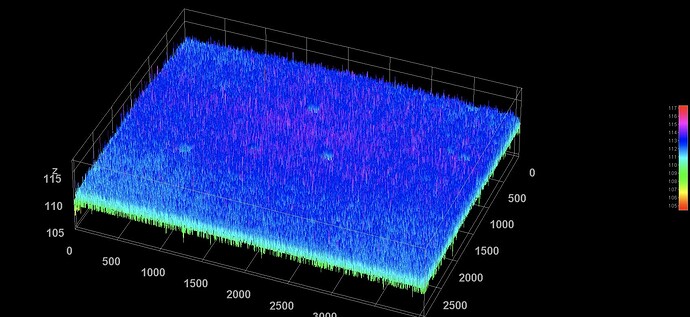

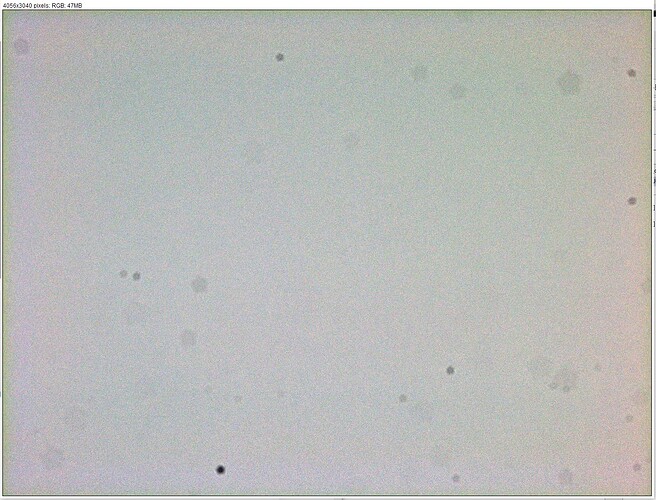

The following takes are with the same parameters, with and without the film. The PNG files were used with ImageJ using the Interactive 3D plugin for the surface plots.

$ libcamera-still --awb=daylight --tuning-file=‘imx477_scientific.json’ --saturation=1 --awbgains=1.65,1.86 --denoise=off --rawfull=1 --mode=4056:3040:12:P --encoding png --raw --n --shutter=1000 --o filename.png

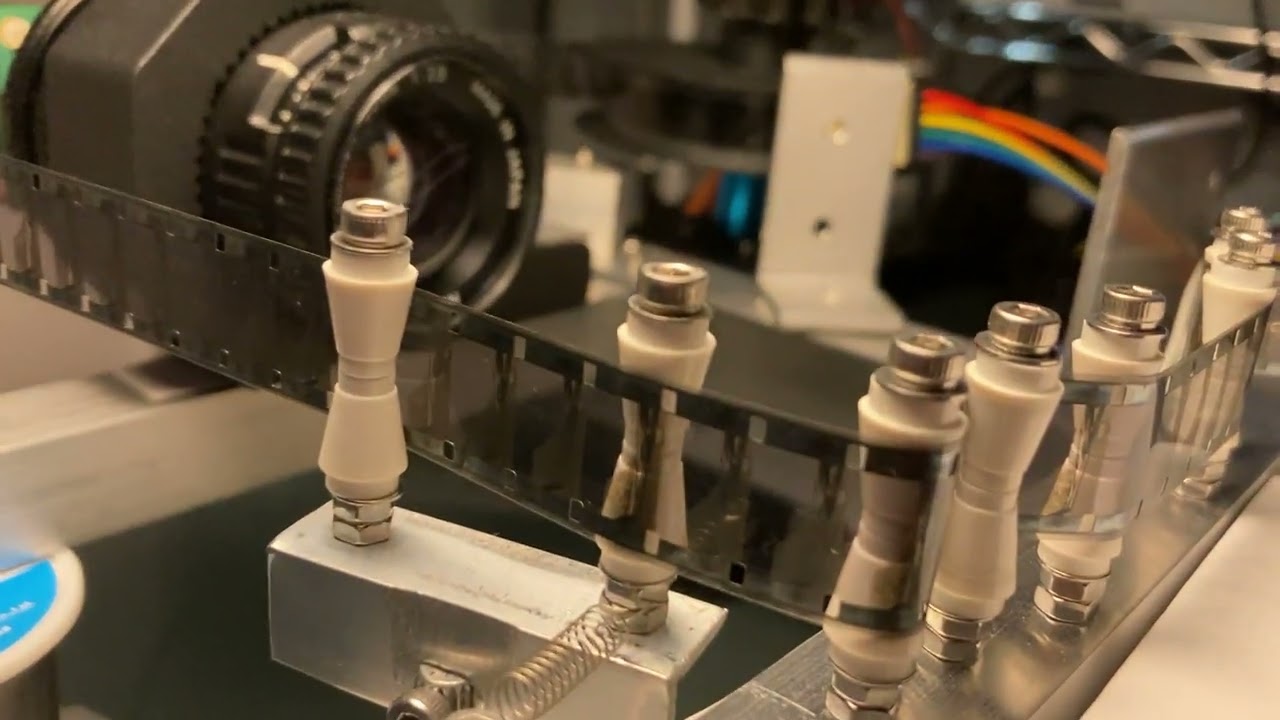

Setup

The test pictures were taken with a Raspberry HQ sensor and Nikkor EL 50mm f2.4 Lens, set between f4 and f5.6. The IR filter of the HQ sensor was removed (had spots) and the setting includes an IR/UV Filter at the front to the lens. The extension tubes and helicoid from the base of the lens to the sensor mount total 54mm.

Results

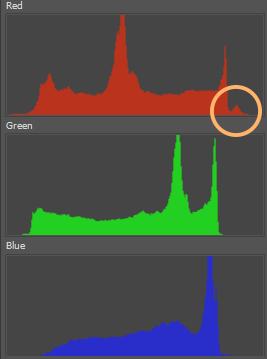

The high-contrast shows a few spsots, these were confirmed (probably dust) to be the sensor.

Both surface plots were performed without smoothing on the png file on the plugin of ImageJ.

One important detail of the test setup, and results: only two of the three LED boards on.

It was done to test how well the sphere is performing (and because I need to order some components to change the configuration of the driver to handled all three).

Adding the third will probably not improve the results of the surface plot or histograms, but it would reduce the exposure time.

Conclusions

- Better than expected performance of the sphere for a large output port/sphere diameter.

- Distance from film to sphere makes subtle adjustments in the nature of the illuminant.

- RPi HQ sensor with 2 millisec shutter for normal exposure with about 6 W of white 5000K LED at f4/f5.6.

Special Thanks

The flatness of this test would not have been possible without the extraordinary work by @cpixip on the color processing of the raspberry new library, and its scientific.json. Thank you for sharing this valuable insight with the community.

PS. Small adjustments in gain improved the histogram and high-contrast image.

$ libcamera-still --awb=daylight --tuning-file=‘imx477_scientific.json’ --saturation=1 --awbgains=1.58,1.86 --denoise=off --rawfull=1 --mode=4056:3040:12:P --encoding png --raw --n --shutter=1000 --o filename.png