This is an interesting discussion. I have a few questions/comments!

The current version of the picamera2 lib reports the following capture formats available for an attached HQ-camera:

{'format': SBGGR10_CSI2P,

'unpacked': 'SBGGR10',

'bit_depth': 10,

'size': (1332, 990),

'fps': 120.05,

'crop_limits': (696, 528, 2664, 1980),

'exposure_limits': (31, 667234896, None)},

{'format': SBGGR12_CSI2P,

'unpacked': 'SBGGR12',

'bit_depth': 12,

'size': (2028, 1080),

'fps': 50.03,

'crop_limits': (0, 440, 4056, 2160),

'exposure_limits': (60, 674181621, None)},

{'format': SBGGR12_CSI2P,

'unpacked': 'SBGGR12',

'bit_depth': 12,

'size': (2028, 1520),

'fps': 40.01,

'crop_limits': (0, 0, 4056, 3040),

'exposure_limits': (60, 674181621, None)},

{'format': SBGGR12_CSI2P,

'unpacked': 'SBGGR12',

'bit_depth': 12,

'size': (4056, 3040),

'fps': 10.0,

'crop_limits': (0, 0, 4056, 3040),

'exposure_limits': (114, 694422939, None)}

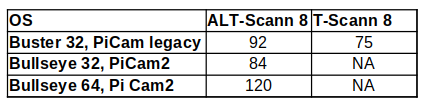

There is only one format (SBGGR10_CSI2P) which will give you frame rates of 120 fps. That delivers a bit-depth of 10 bit at a maximum image size of 1332 x 92 pixels. Assuming that the fps quoted in your table are calculated correctly, you are probably operating your camera in this mode. Note that you might not notice that immediately, as libcamera (which is what under the hood does the work in picamera2) might simply scale up the image to the resolution you are requesting.

On the other hand, I am rather sure that @Manuel_Angel ís using instead the SBGGR12_CSI2P-format, which maxes out at 40 fps, but operates at 12 bit-depth and a maximal image size of 2082 x 1520 px.

Just to round up the discussion of capture formats: the SBGGR12_CSI2P-format delivers images with 12 bit-depth and 4056 x 3040 px, but it is awefully slow. It maxes out at 10 fps.

There is no way to obtain higher frame rates within the hardware constraints of the Raspberry Pi (it is connected to the two CSI-lanes available between HQ camera and main board).

Coming back to the topic, since the SBGGR12_CSI2P-format (40 fps) does use binning at the sensor level, an image obtained with that mode is not that sharp as an image obtained with mode SBGGR12_CSI2P (10 fps/full sensor resolution) resized by libcamera to the 2082 x 1520 px resolution.

In case you are operating your camera in the SBGGR10_CSI2P-mode (120 fps), you are actually grabbing images of size 1332 x 990 px which will be enlarged by libcamera to the requested 2082 x 1520 px size. This image will be the least sharp of all three possibilities. Also, the native bit-depth of your captures will only be created from 10 bit raw data instead of the 12 bits the sensor is capable of operating with.

Another comment: the jpeg-encoding is done in picamera2 via software, it was done in the original picamera lib (the legacy stack) in hardware. That is the reason why picamera2 is slower than the original picamera. The speedup between 32bit OS and 64bit OS is interesting - I would not have expected that it is so noticable.

That is certainly an interesting observation. The SD you linked to is quoted with a write speed of 45MB/s; a portable Samsung SSD connected to one of the USB3 ports of the RP4 (>300MB/s) should be faster by a noticable factor. I have planned to do exactly such a comparision/test in the near future. Can you elaborate on your experience?