25 years ago, back in the days of Betamax, I attempted to archive my large collection of 8mm home movies by recording them ‘off the wall’ with my newly acquired (and hugely expensive) Sony SL-F1 system.This was relatively successful, mainly due to the fact that the camera had a Trinicon imaging tube which had long persistence by today’s standard so virtually eliminating flicker. Most TVs in those days rarely exceeded 21", and everything was in SD, so the result was quite acceptable. However, I knew that one day I would have to repeat the process because I anticipated that the digital revolution was bound to enter the home movie-making arena sooner or later.

When I retired in 2014 I thought ‘now is the time’, because the technology to do every aspect of it was here. Now the pressure was even greater because by then I had also inherited a huge archive from my late father and my great-uncle both of whom had spent all their lives in the film production industry back as far as the late 1800s. Now, not only did I have 8mm to convert, but also 9.5mm, 16mm, and hand-perforated 35mm.

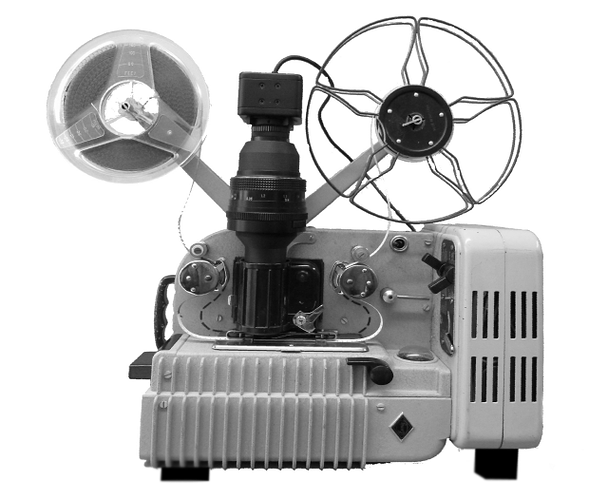

Last year I decided to start with the 8mm reels, and I had great success converting my vintage Eumig projector to do the job. This projector was beautifully engineered, very gentle on the film, and easy to modify using parts made on my 3D printer.

Originally I used a Microsoft LifeCam Studio as the sensor, but I show here an MC500 which I have recently been experimenting with.

However, I did not have a projector for any of the other guages, so I set about making one of my own using an intermittent claw mechanism that I designed and which I have talked of elsewhere on this forum. I was concerned however that this may not be the most sensible way to handle the earliest films, the youngest of which were from the 1950’s, and stretched back through every decade to around 1895.

So, I trawled the internet looking for information that would help me move forward. About 6 weeks ago I stumbled across the Kinograph and this forum, and I knew that this was where I was going to get the inspiration that I needed, and a good place to hang-out. I also realised that the machine was still a ‘work in progress’, and that there were a few problems still to address which was exciting for me because it meant that I could build a machine of my own and experiment with solving those problems. As a technologist, that is the kind of thing I have done all my working life in various fields, but now I could have fun doing it for me!

That is what this post is about. I have been chatting privately to Matthew about the direction I am moving in, and he is keen that I share it in this forum and open it to discussion - so here goes…

Firstly, I will show you my proposed version of Kinograph so you can put the details that I am about to describe into context:

This machine will be about 90% carbon-fibre plastic made of parts produced on my 3D printer. The chassis is made of 6 ‘tables’ 200 mm (~ 8") square that are pinned and bolted together to provide high rigidity and strength. I called these ‘tables’ because they each have 4 legs which provides clearance for the motors beneath, and makes the whole thing aesthetically pleasing. To get some idea of the scale of this drawing, the reels are 10" diameter.

Here are the main attributes of the design -

- There are no sprockets on the rollers.

- The emulsion side of the film never touches anything.

- A ‘vacuum gate’ gently flattens the film against the ‘shiny side’ during the short period of frame capture.

- The complete transport mechanism is stabilised by a simple low-cost

closed-loop hybrid control system (analogue/digital). - High immunity to missing/broken sprocket holes.

- Film tension is adjustable, and automatically maintained.

- Film speed is adjustable and automatically maintained.

- Longitudinal framing is adjustable without moving the camera and is

accurately maintained. - Sprocket hole sensing is achieved by reflective laser.

- Provision for optional lateral frame stabilisation (later).

- Provision for gentle rewinding with constant tension.

- Auto shut-down if film breaks or end-of-reel.

- Easy set-up mode for pre-focusing etc.

Before I introduce the control system, I need to briefly mention the sprocket-hole sensor that I am going to use. Although it is possible that the existing micro-switch arrangement could be used because it would be stabilised by the control loop, I have discounted this as I wanted to have rollers free of sprockets.

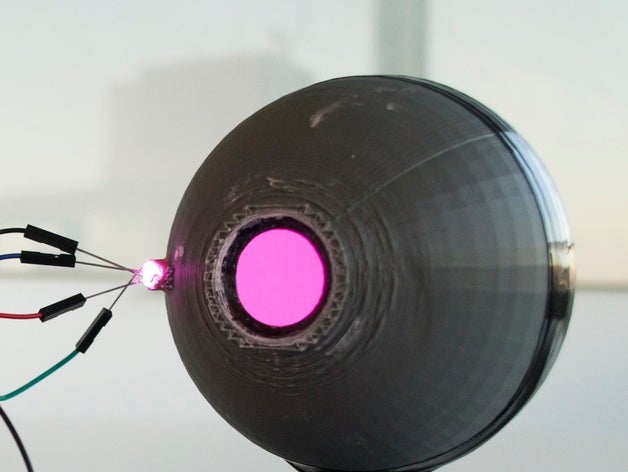

After a period of experimentation with a variety of designs and using different types of transmitters and sensors, I finally settled on this one which I built using a cheap laser diode and photo-transistor in a reflective layout as shown here -

Although it is described as ‘reflective’, this is not meant in the ‘mirror’ sense, which is what an l.e.d. would rely on. Unlike an l.e.d. the beam from a laser is ‘coherent’, which means that the photons are all in phase with each other. When they strike a surface the reflection produces a constructive/destructive ‘interference pattern’ that appears to us as a granular, twinkling, effect. This will occur regardless of whether the surface is transparent or not, which is just what we want. To ensure that the diameter of the beam is smaller than the sprocket hole, it is passed through a small pinhole, and to prevent the beam from falsely illuminating the sensor, it is caught in a trap as shown.

Now look at how the sensor is likely to perform -

This diagram is somewhat exaggerated, but it illustrates that the output from the sensor will be a stream of pulses that have an average period that exactly matches the period between the sprocket holes, but that the edge of each pulse will have a degree of uncertainty which will be reflected in the timing of the capture. This will result in a vertical jitter in the captured video.

To overcome this we need something special to clean up these pulses, so now for the interesting bit - the control system. This is based on a ubiquitous system device known as a Phase Locked Loop (PLL). There are billions of them in use today all around the world in one form or another, and most of us have many spread throughout the electronics in our homes, offices, and cars, not to mention our pockets (cellphones, mp3 players etc).

See this for a good primer on PLLs

There are many ways in which a PLL can be used, and here is a conceptual diagram of the configuration we would need to overcome our jitter problem.

In effect, it is working like a flywheel, smoothing out the jitter but, unlike a flywheel, not only are the input/output frequencies locked, but their phases are too. Imagine two identical cars travelling side by side on a road. They may well be moving at identical speeds, but unless they are dead-alongside each other, they will not be in phase.

This will almost certainly help a great deal to improve the trigger performance of the existing Kinograph, but it does nothing to stabilise the frame rate of the film, and without that it is difficult to control the tension, so I wanted more from my system.

It took a couple of days to think this through, but I finally came up with a solution that is somewhat unusual, which involves using a DC motor for take-up, and turning the PLL on it’s head by removing the VCO and replacing it with the motor/film/sensor combo. In effect, this combo responds to changes in motor voltage by changing the output rate from the sensor, just like a VCO. Now, all we have to do is to apply an appropriate clean pulse stream to the input of the PLL, and the sprocket holes in the film will be locked to it, in both frame rate and position (phase). What is more, because we are using a PMDC motor, which has an inverse torque/speed relationship, the loop conditions will not be upset as the diameter of the film on the spool changes. Naturally, the camera will be triggered by the clean pulse generator.

A further improvement to this may be to add a conventional PLL between the sensor and the phase comparator to improve the jitter reduction even further and enhance immunity to damaged sprocket holes, but I won’t know if it is necessary until I have constructed the system for real. However, this may be vital if an electronic ‘framing’ control is introduced, since this is where the ‘phase’ can be conveniently adjusted.

So far, all these details are theoretical, although a large part of it has been run through a simulator (SciLab + Xcos) on my PC, and all seems to be OK so I will continue with it.

Other aspects worth mentioning.

- The supply reel will use an identical motor to the take-up, to

provide constant tension with changing film diameter, but will be

driven differently because it is essentially working ‘backwards’. - I have incorporated a gauge to monitor tension, and a screw to

calibrate it. - The film gate is a departure from the norm in that there is no

pressure plate. The film is held flat against the frame aperture by

vacuum for the brief period of frame capture, but is free to float at

other times.. The vacuum is provided by two PC case fans, and

switched by a shutter working on a louvre principle. The precise

details of this are still floating in my mind, but I have also toyed

with the idea of using the shutters closer to the film where they

could also shutter the lamp. I may even dispense with the shuttering altogether and leave the vacuum on permanently - i’ll see how it goes. - Film rewinding will be simple because the two motors are identical,

and their roles can be easily switched. Slow rewinding can be done

using the normal film path, but fast rewinding, with tension control

can be performed using the back rollers. - The knobs and switches I show are something that will appear after I

am happy with the system performing its major tasks. They will

probably interface to an Arduino, which will also handle safety

features and idiot-proof interlocks.

And what is all this likely to cost?

Ignoring the cost of the motors, It is likely that the complete electronics system will be less than $20. The motors may be another $40 or thereabouts, but I’m still working on an optimum model type to use.

Overall, without the camera, I guess the machine could be built for less than $100, and this is using components at 1-off prices.

Well, that’s enough for now, maybe too much? Anyway, there are still many details that I have left out for the sake of brevity, but soon I will be putting them down in a pdf, as much for my benefit as anyone else.

Any comments or suggestions?

To be continued…

Jeff