@Manuel_Angel - ok, things are getting clearer…

When you do in the Mertens path the following

img = cv2.normalize (img, None, 0., 1., NORM_MINMAX)

img = np.clip (img * 255, 0, 255) .astype (‘uint8’)

you basically map the pixel with the lowest value onto the 8-bit value 0x00, and the pixel with the highest value to the 8-bit value 0xff. That is ok, but it is sub-optimal.

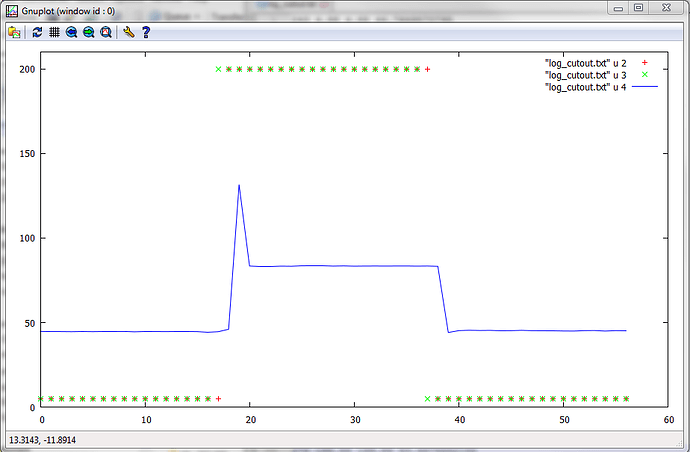

The reason is that sometimes there are only a handful or even less pixels with these extreme values - you fare usually much better if you use a code similar to

minimum = np.percentile(img, 1)

maximum = np.percentile(img, 99.0)

scaler = 1.0/(maximum-minimum+1e-6)

img = scaler*(img-minimum)

img = numpy.clip(img * 255, 0, 255).astype(‘numpy.uint8’)

This code disregards the darkest and brightest 1% of the pixel in the frame and gives you usually better output results. You might want to play with the percentages - even 10% (that would be 10/90 instead of 1/99 in the above code) might be ok. Just do some experiments.

Now, you are treating the Devebec totally different, using your old

img = np.clip (img * 255, 0, 255) .astype (‘uint8’)`

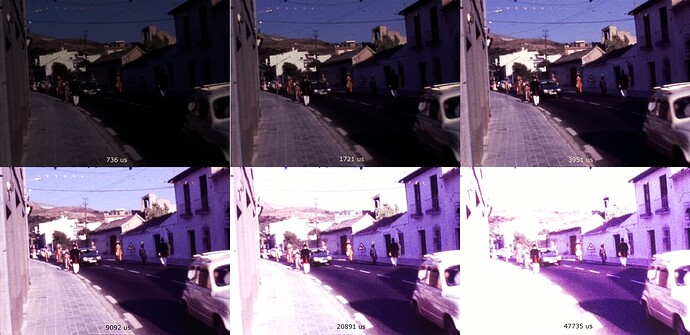

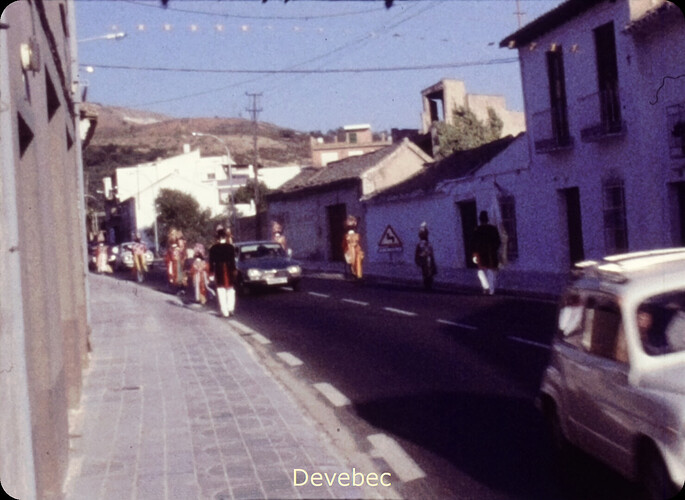

processing step (after tone-mapping). In case the tone-mapping does not map into the [0.0:1.0]-range, you will cut off highlights and dark shadows. I see actually indications in your result that this is happening. Compare the texture of the bright house left-center in your two results. It is certainly better defined in the Mertens result than in the Devebec one.

Furthermore, through the Reinhard tone-mapping, you are increasing the gamma of the image (ReinhardGamma = 1.2), which leads to more contrast in the Devebec case - try to compare with a run where ReinhardGamma = 1.0 and make sure that you are not clipping the result of the tone-mapping.

Finally, setting ReinhardColor = 1 asks the tone-mapping algorithm to independently process the three different color channels, leading to a sort of auto whitebalance, reducing in turn the magenta cast you have in your input images. That’s the reason why the Mertens result shows much more magenta. What happens if you set ReinhardColor = 0?

On closer inspection of the input frames, it seems that all do have a magenta cast. This indicates that your red and blue gains are too high for this movie.