Hi all:

I want to present to the forum the software that I use to scan my Super8 films.

The software is based on the project GitHub - jphfilm/rpi-film-capture: A project to capture 8mm and 16mm films using a raspberry pi & camera, and a modified movie projector. although much improved and adapted to my tastes and needs.

At first I did it for my personal use, in Spanish, my native language, but then I thought that it could be useful for other people interested in the subject of film digitization. For this reason I have prepared an English version.

The software that I have called DSuper8 (Digitize Super8), is especially suitable for the use of a modified projector with a stepper motor together with a Raspberry Pi and a RaspiCam camera. It works with both the old V1 camera and the new HQ camera.

Overall, the system is based on the client-server model.

As a server it uses a Raspberry Pi with a RaspiCam, which performs the following functions:

- Attend the user’s orders via the GUI of the client program.

- Lighting control.

- Control of the movements of the film.

- Taking and sending the digitized images to the client program.

- Sending information on the process.

- Log in the system console.

As a client, it uses a PC that performs the following functions:

- Maintains the system GUI.

- Receive the images captured by the server.

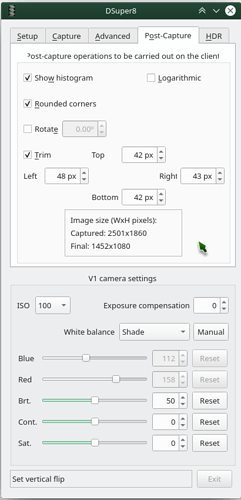

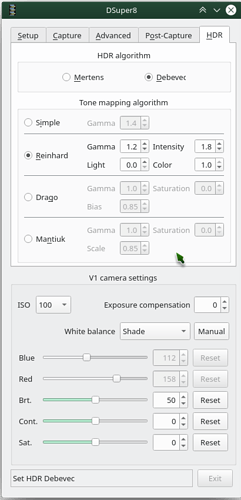

- Performs post-processing operations: calculation and display of the histogram, corner rounding, image rotation, image edge trimming, image fusion …

- Save the resulting final image to a file.

- Updates the GUI with the information received from the server. In particular it permanently maintains the position of the film frame. At any time the user can place the film in the desired frame, forward or backward.

- Log in the system console.

It should be noted that the system does not use any type of sensor to detect the correct position of the frame to be digitized. From my point of view, this function is already performed mechanically by the projector. Once we have the first frame located, it is a matter of making the main axis of the projector rotate in a controlled way. In my case a complete revolution of the axis advances exactly one frame. This approach greatly facilitates projector modification and software development.

I can say that with this procedure I have digitized many meters of film successfully. The frames are digitized one after the other with total precision.

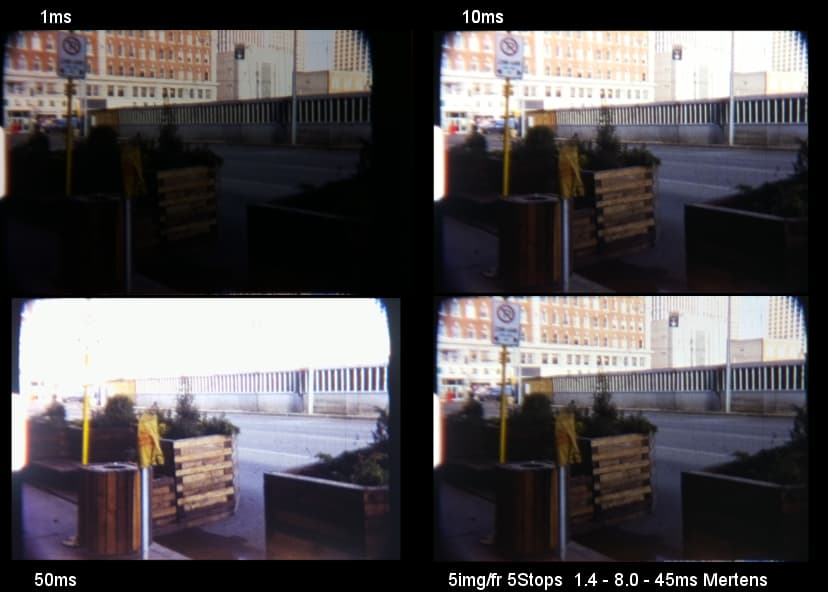

I attach some images and screenshots.

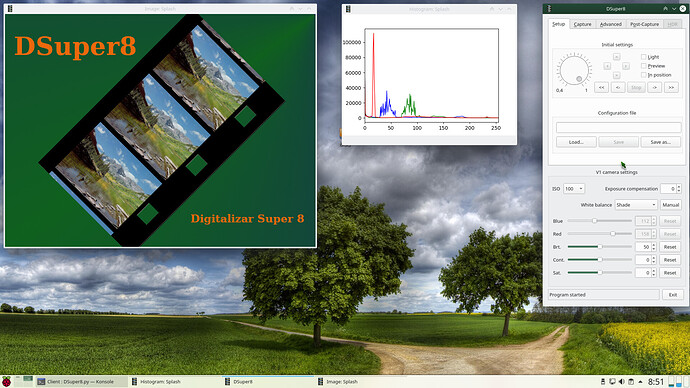

Initial appearance after startup.

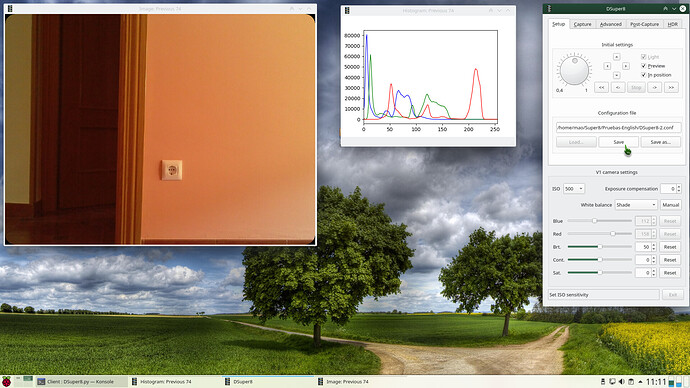

The system previewing images.

It is not a film, but images captured by camera V1 of a simulator that I have prepared to test the English version. We are spending a few days in a house that we have in a small and quiet town and here I do not have the real device.

Server simulator (Raspberry Pi 2 + RaspiCam V1)

Client simulator (Raspberry Pi 4)

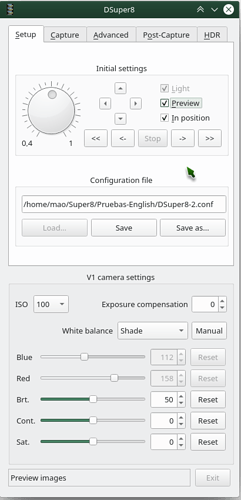

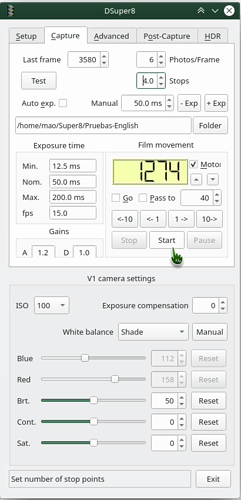

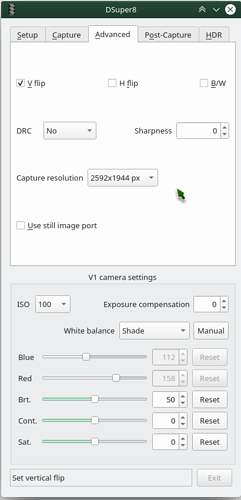

Screenshots of the five GUI tabs.

Instructions.pdf (32.5 KB)

DSuper8-Client.zip (785.6 KB)

DSuper8-Server.zip (14.0 KB)