… and here you can download my raw for your experiments…

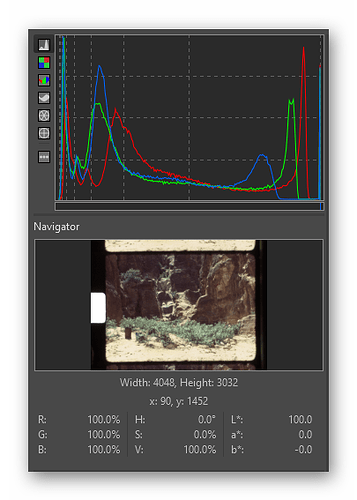

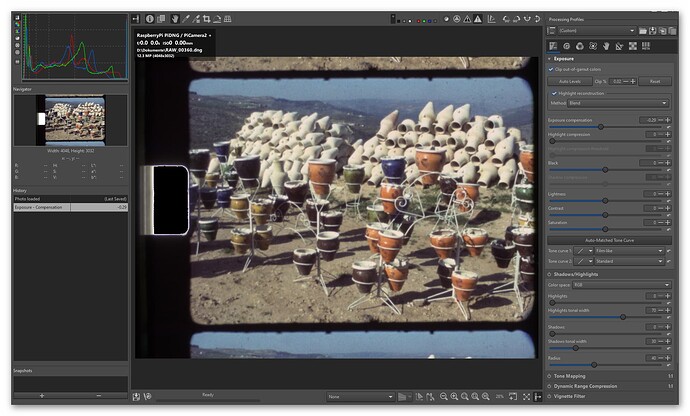

In RawTherapee, this raw image opens like so:

Note the shape of the histogram - the red channel extends all the way to the right side of the histogram. That is: it’s clipping.

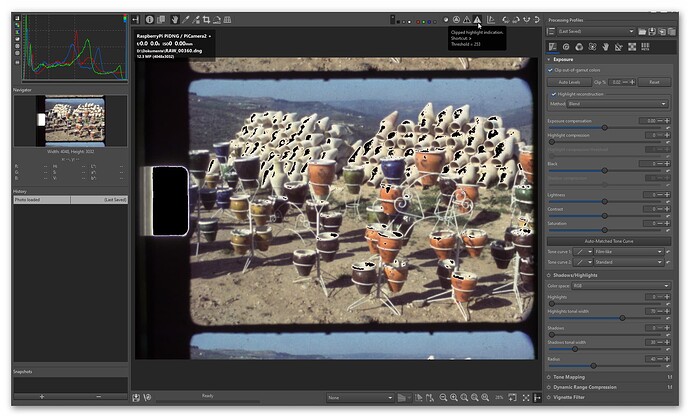

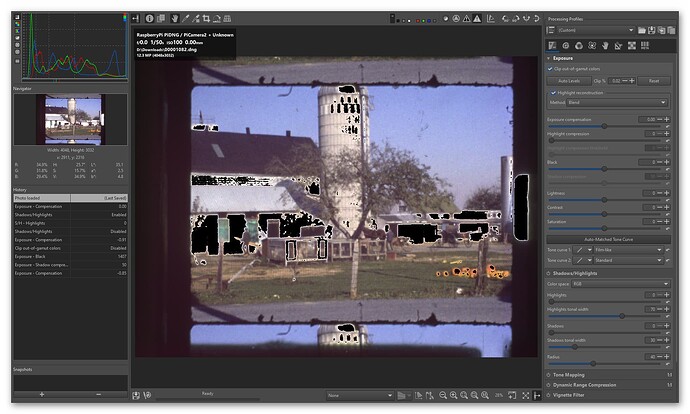

RawTherapee can help you with that issue. Click on the triangle with exclamation mark in the upper right window area, and all critical areas will be marked:

Now turn the exposure down until all black areas in the frame area are no longer marked. You should end up with something like this:

Two things to note: first, the only area still marked as “clipped” is the sprocket area, and we don’t mind this. Second, the red channel in the histogram runs out before it hits the right border. So we have found the correct exposure setting for this image.

Please note that this procedure is basically identical to the above described procedure with DaVinci - only that I did not use the histogram but the “Parade” viewer.

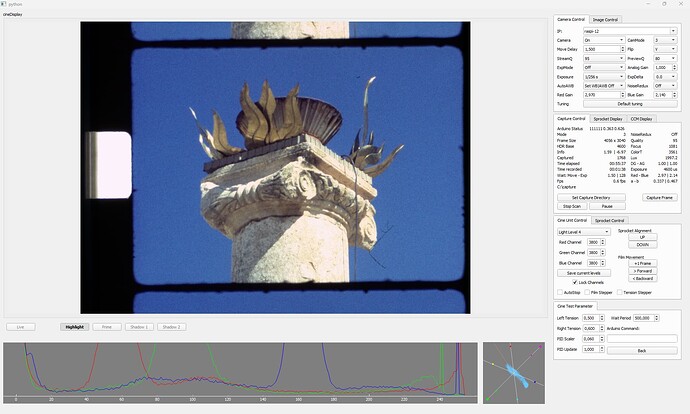

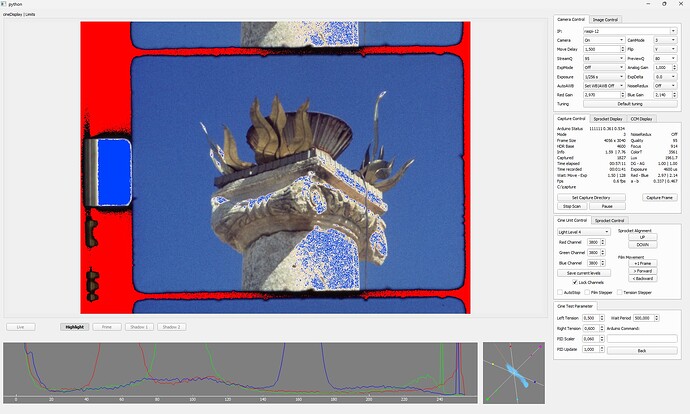

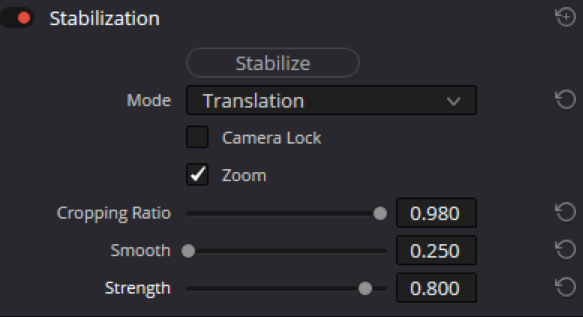

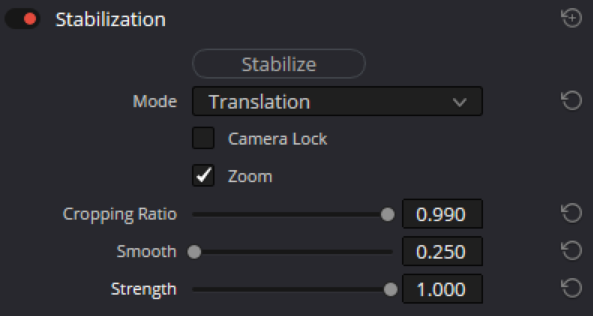

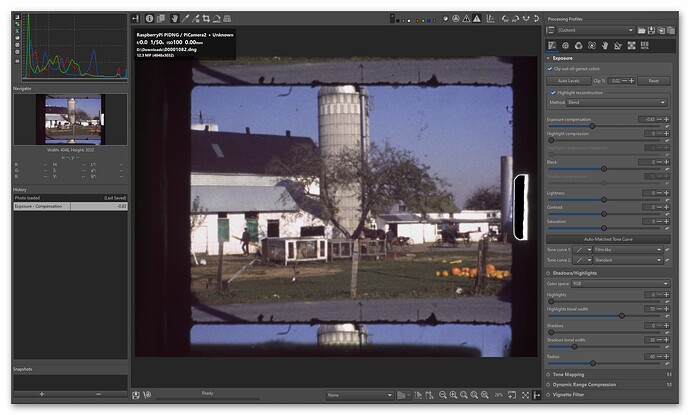

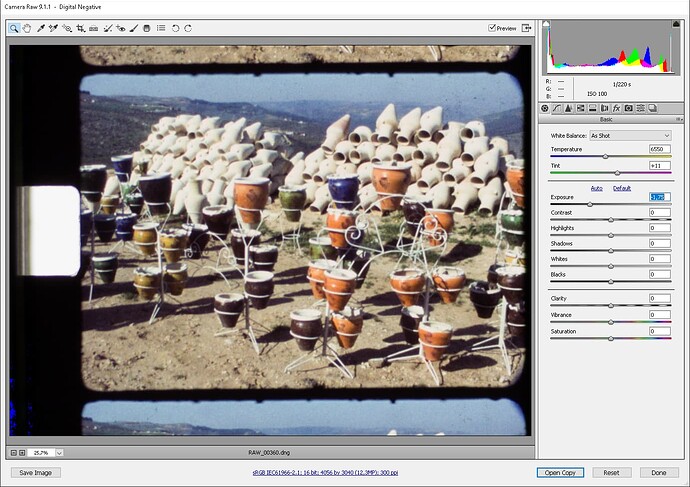

Now let’s see how this procedure would work with your raw. Here’s the initial setting, already with the clipped highlight indicator active:

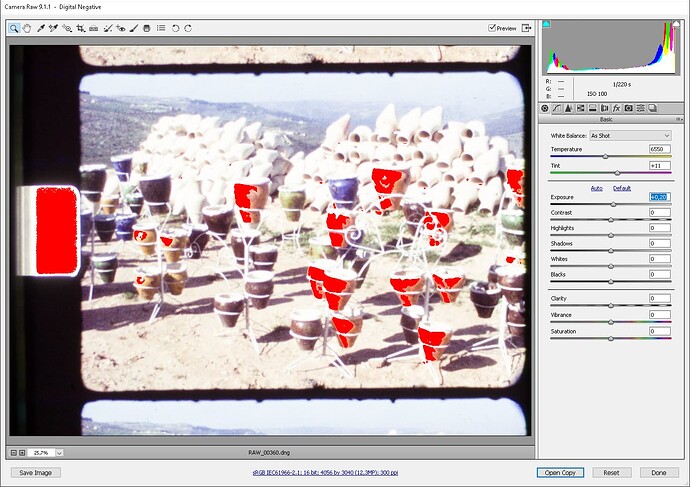

And this is how it should look like:

Only the sprocket area clips here. Good!

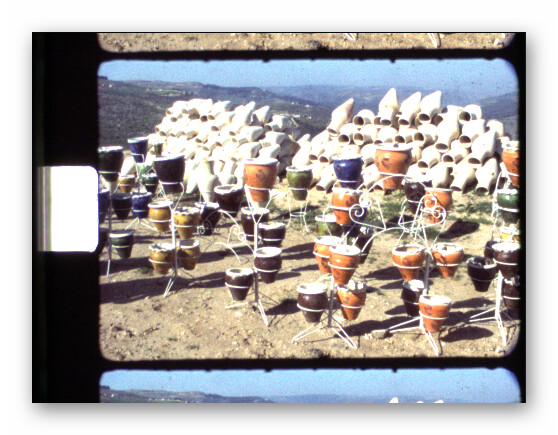

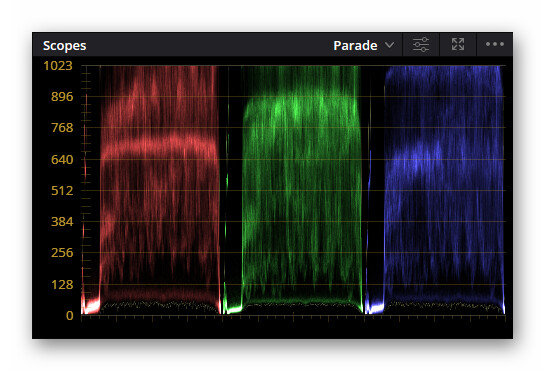

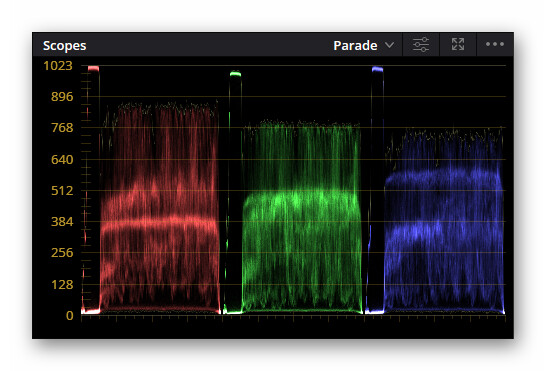

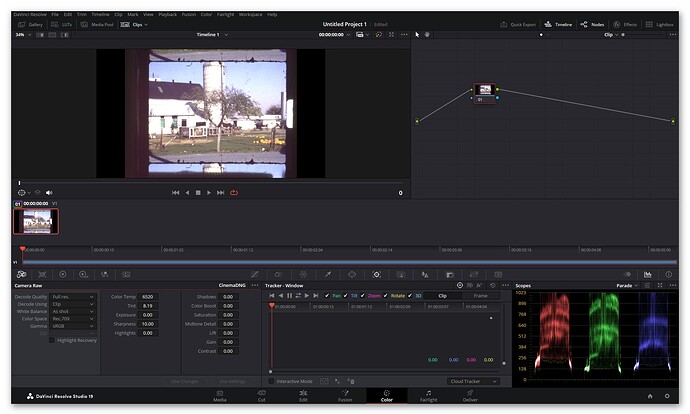

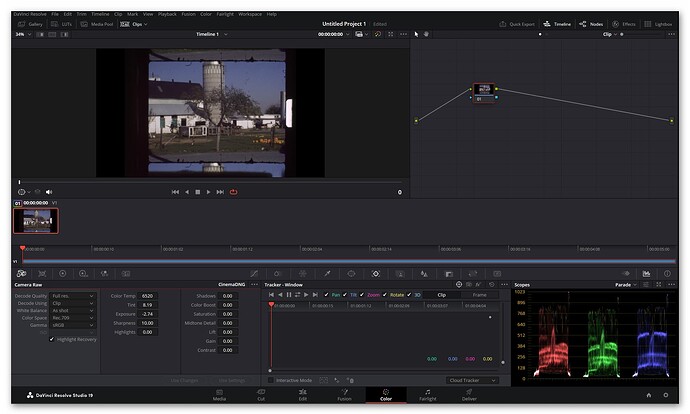

While we’re at that, the same in DaVinci. Original image loaded - way too bright, the “Scopes → Parade” viewer shows clipping.

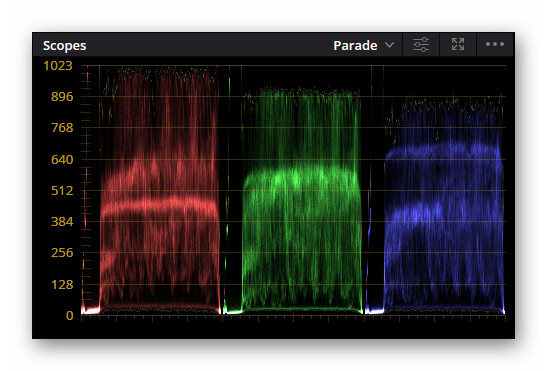

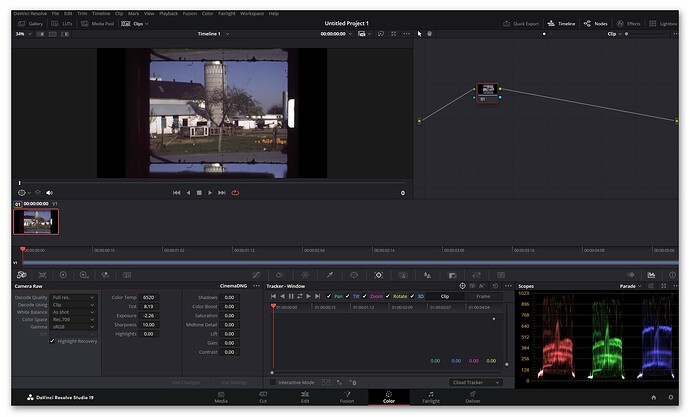

Adjusting so that all data is displayed, including the sprocket area:

Rather dark, but we set Exposure = -2.74. If you look closely, you might notice that your red channel is somewhat brighter than the blue and green channel in the empty sprocket area, but the color amplitudes of the actual frame data have about equal height - that is probably what one would aim at.

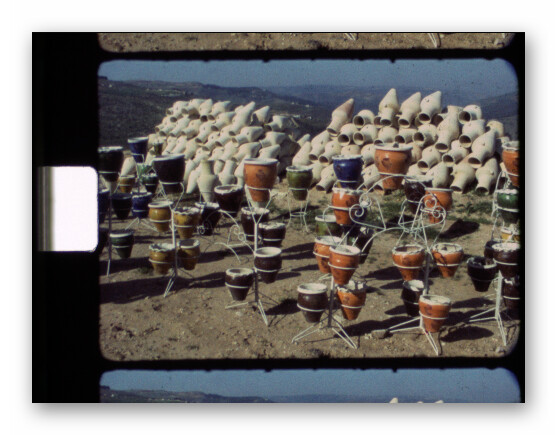

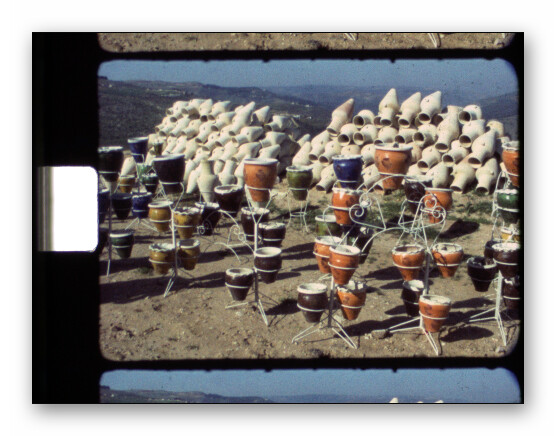

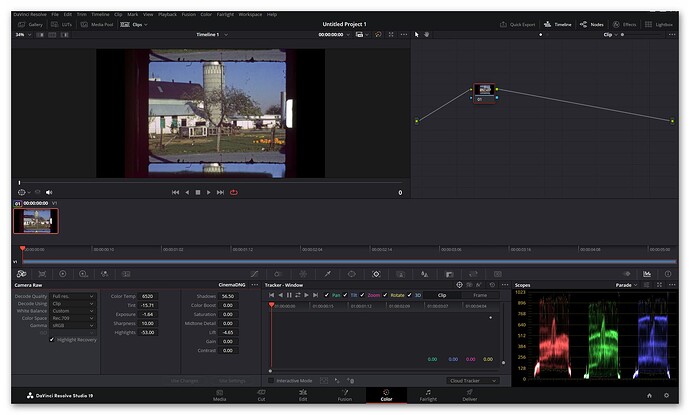

Anyway. We do not care about the sprocket area, only about the frame data. So up with the exposure again! Setting Exposure = -2.26, we arrive at this:

Note how the values of the frame data just touch the upper border of the Parade viewer. Usually, for me, I am done at that stage of processing. I only make sure that the chosen exposure works for all other frames in my capture. If you “exposure setting frame” was chosen carefully, that should be the case.

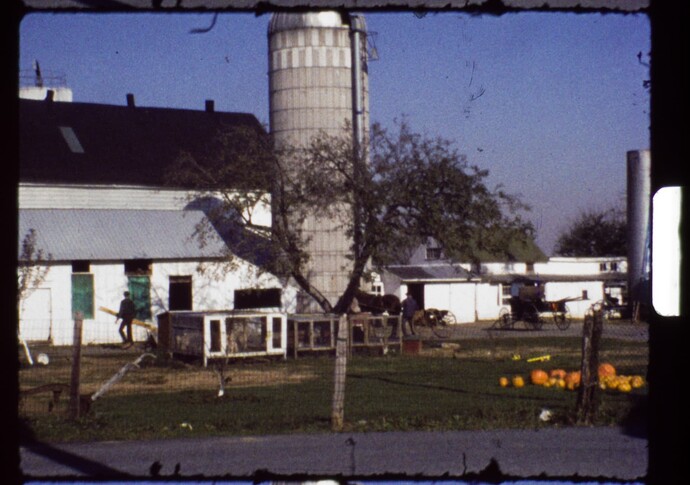

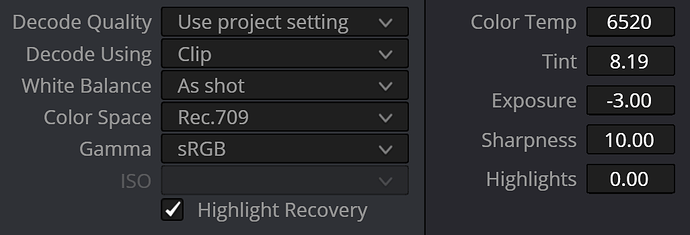

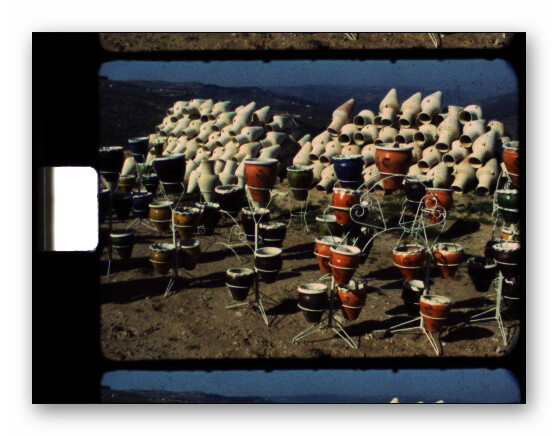

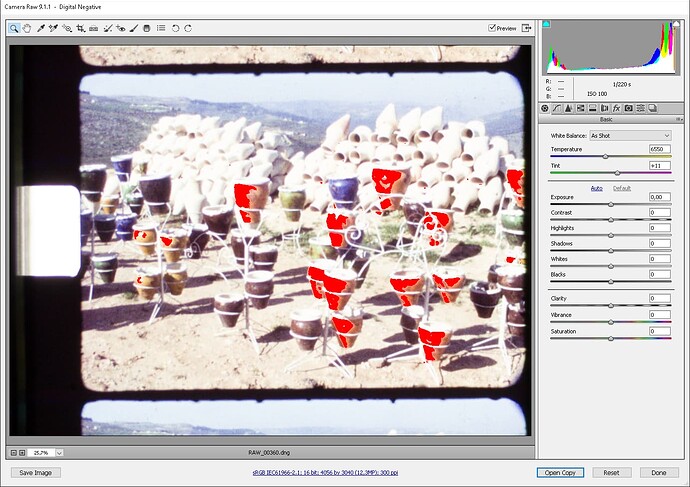

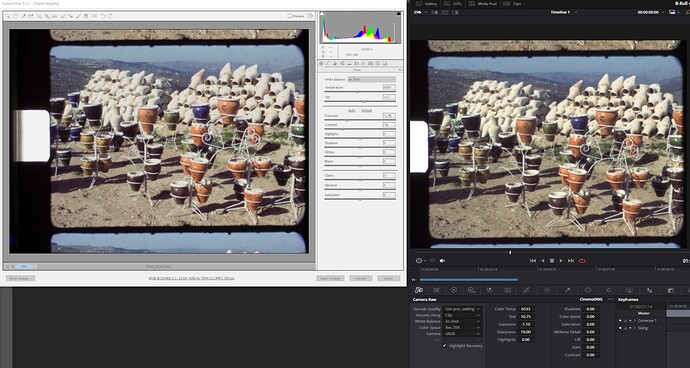

As noted, I defer al the other adjustments to a later processing stage. However, if you really want to optimize your footage further already at the level of the “Camera Raw” module, all controls are there. Here’s an example for your image:

I basically first looked at the “Shadow” setting, followed by the appropriate “Highlights” setting. Than, I readjusted “Exposure” and “Lift”. Once that was done, a little tweek on the “Tint” setting gave me a more autumn-like look of the frame. Of course, all of these setting are available also in the “Primary → Color Wheels” tab, and that’s were I usually do this stuff.

I have the suspicion that the controls of the “Camera Raw” tab work in linear space, whereas the controls in the “Color Wheels” tab work in the timeline’s color space. If so, they would not be equivalent. I still need to investigate that further.

Anyway. Here’s the data hidden in your .dng-file:

---- ExifTool ----

ExifTool Version Number : 12.01

---- File ----

File Name : 00001082.dng

Directory : D:/Downloads

File Size : 24 MB

File Modification Date/Time : 2024:10:06 11:15:26+02:00

File Access Date/Time : 2024:10:06 12:12:19+02:00

File Creation Date/Time : 2024:10:06 11:13:01+02:00

File Permissions : rw-rw-rw-

File Type : DNG

File Type Extension : dng

MIME Type : image/x-adobe-dng

Exif Byte Order : Little-endian (Intel, II)

---- EXIF ----

Subfile Type : Full-resolution image

Image Width : 4056

Image Height : 3040

Bits Per Sample : 16

Compression : Uncompressed

Photometric Interpretation : Color Filter Array

Make : RaspberryPi

Camera Model Name : PiDNG / PiCamera2

Orientation : Horizontal (normal)

Samples Per Pixel : 1

Software : PiDNG

Tile Width : 4056

Tile Length : 3040

Tile Offsets : 756

Tile Byte Counts : 24660480

CFA Repeat Pattern Dim : 2 2

CFA Pattern 2 : 2 1 1 0

Exposure Time : 1/54

Exposure Time : 1/50

ISO : 100

Exif Version : 0221

Shutter Speed Value : 1/50

ISO : 100

DNG Version : 1.4.0.0

DNG Backward Version : 1.0.0.0

Black Level Repeat Dim : 2 2

Black Level : 4096 4096 4096 4096

White Level : 65535

Color Matrix 1 : 0.4528 -0.0541 -0.0491 -0.4921 1.2553 0.2047 -0.149 0.2623 0.5059

Camera Calibration 1 : 1 0 0 0 1 0 0 0 1

Camera Calibration 2 : 1 0 0 0 1 0 0 0 1

As Shot Neutral : 0.3203177552 1 0.6680472977

Baseline Exposure : 1

Calibration Illuminant 1 : D65

Raw Data Unique ID : 323233303034333636303030

Profile Name : PiDNG / PiCamera2 Profile

Profile Embed Policy : No Restrictions

---- XMP ----

XMP Toolkit : Adobe XMP Core 5.6-c011 79.156380, 2014/05/21-23:38:37

Creator Tool : PiDNG

Rating : 0

Metadata Date : 2024:10:04 17:24:15+02:00

Document ID : 6DE917EB3F26A9DCE53ED5A4CD92EBC6

Original Document ID : 6DE917EB3F26A9DCE53ED5A4CD92EBC6

Instance ID : xmp.iid:af0ce410-d71d-5e40-8d00-6e4243ad0bba

Format : image/dng

Raw File Name : 00001082.dng

Version : 9.1.1

Process Version : 6.7

White Balance : As Shot

Auto White Version : 134348800

Saturation : 0

Sharpness : 25

Luminance Smoothing : 0

Color Noise Reduction : 25

Vignette Amount : 0

Shadow Tint : 0

Red Hue : 0

Red Saturation : 0

Green Hue : 0

Green Saturation : 0

Blue Hue : 0

Blue Saturation : 0

Vibrance : 0

Hue Adjustment Red : 0

Hue Adjustment Orange : 0

Hue Adjustment Yellow : 0

Hue Adjustment Green : 0

Hue Adjustment Aqua : 0

Hue Adjustment Blue : 0

Hue Adjustment Purple : 0

Hue Adjustment Magenta : 0

Saturation Adjustment Red : 0

Saturation Adjustment Orange : 0

Saturation Adjustment Yellow : 0

Saturation Adjustment Green : 0

Saturation Adjustment Aqua : 0

Saturation Adjustment Blue : 0

Saturation Adjustment Purple : 0

Saturation Adjustment Magenta : 0

Luminance Adjustment Red : 0

Luminance Adjustment Orange : 0

Luminance Adjustment Yellow : 0

Luminance Adjustment Green : 0

Luminance Adjustment Aqua : 0

Luminance Adjustment Blue : 0

Luminance Adjustment Purple : 0

Luminance Adjustment Magenta : 0

Split Toning Shadow Hue : 0

Split Toning Shadow Saturation : 0

Split Toning Highlight Hue : 0

Split Toning Highlight Saturation: 0

Split Toning Balance : 0

Parametric Shadows : 0

Parametric Darks : 0

Parametric Lights : 0

Parametric Highlights : 0

Parametric Shadow Split : 25

Parametric Midtone Split : 50

Parametric Highlight Split : 75

Sharpen Radius : +1.0

Sharpen Detail : 25

Sharpen Edge Masking : 0

Post Crop Vignette Amount : 0

Grain Amount : 0

Color Noise Reduction Detail : 50

Color Noise Reduction Smoothness: 50

Lens Profile Enable : 0

Lens Manual Distortion Amount : 0

Perspective Vertical : 0

Perspective Horizontal : 0

Perspective Rotate : 0.0

Perspective Scale : 100

Perspective Aspect : 0

Perspective Upright : 0

Auto Lateral CA : 0

Exposure 2012 : -3.00

Contrast 2012 : 0

Highlights 2012 : 0

Shadows 2012 : 0

Whites 2012 : 0

Blacks 2012 : 0

Clarity 2012 : 0

Defringe Purple Amount : 0

Defringe Purple Hue Lo : 30

Defringe Purple Hue Hi : 70

Defringe Green Amount : 0

Defringe Green Hue Lo : 40

Defringe Green Hue Hi : 60

Dehaze : 0

Tone Map Strength : 0

Convert To Grayscale : False

Tone Curve Name : Medium Contrast

Tone Curve Name 2012 : Linear

Camera Profile : PiDNG / PiCamera2 Profile

Camera Profile Digest : 57D05906E51D353BBF302E5EE4A0CC2E

Lens Profile Setup : LensDefaults

Has Settings : True

Has Crop : False

Already Applied : False

Photographic Sensitivity : 100

History Action : saved

History Instance ID : xmp.iid:af0ce410-d71d-5e40-8d00-6e4243ad0bba

History When : 2024:10:04 17:24:15+02:00

History Software Agent : Adobe Photoshop Camera Raw 9.1.1 (Windows)

History Changed : /metadata

Tone Curve : 0, 0, 32, 22, 64, 56, 128, 128, 192, 196, 255, 255

Tone Curve Red : 0, 0, 255, 255

Tone Curve Green : 0, 0, 255, 255

Tone Curve Blue : 0, 0, 255, 255

Tone Curve PV2012 : 0, 0, 255, 255

Tone Curve PV2012 Red : 0, 0, 255, 255

Tone Curve PV2012 Green : 0, 0, 255, 255

Tone Curve PV2012 Blue : 0, 0, 255, 255

---- Composite ----

CFA Pattern : [Blue,Green][Green,Red]

Image Size : 4056x3040

Megapixels : 12.3

Shutter Speed : 1/50

To obtain that data, simply use the tool noted at the first line of this file “ExifTool”. It works since a .dng-file is basically at heart a simple .tif-file - just with a few extra tags.There are online-tools for that available (search: “exiftool online”), if you do not want to set up this nice program on your computer.

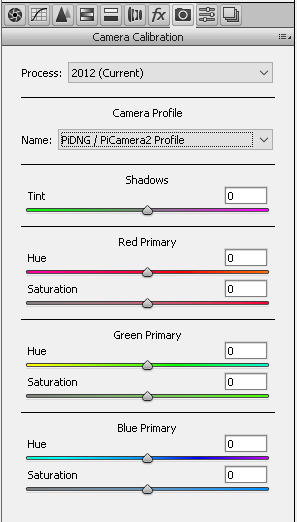

Some final thoughts. What your Camera Raw is indicating is that it is using the profile information embedded in your .dng-file. You could opt to use a different profile, but I would not recommend that in our case.

The “profile” consists of quite some data (see the above listing), but not all of that data is actually used. We already discussed the tags Black Level and White Level which are the first parameters used in “developing” your raw data. Once these have been applied, color information is used to convert the raw data into a decent color space (say, sRGB or so). There are a lot of tags coming here into play, depending on the type of .dng-file. In our case, a .dng-file created by picamera2, we are working with the most simple type one can imaging. Only the As Shot Neutral tag and the Color Matrix 1 tag need to be used. Other tags in the picamera2-dng are nonsense, like the Calibration Illuminant 1, normal dngs feature actually at least two Color Matrix and Calibration Illuminants - this is a wild west area with a lot of uncertainties. So whether your raw developer does inpret the information in a picamera2-dng in the correct way or not is not clear. Judging from my tests, at least DaVinci, RawTherapee and Darktable do it right - no idea how Adobe’s stuff performs here.

EDIT: I noticed that the tags of your .dng-file look different from my raw files.

For example, in your file it’s Tile Offsets : 756 but in my (recently captured) file, it’s Tile Offsets : 760. Also, your .dng has two Exposure Time tags (?) and a Shutter Speed Value tag which is missing in mine. Lastly, there is a XMP Toolkit tag-section with a lot of auxilary data which indicates that you ran the original raw file through some converter? Or at least your Adobe Camera Raw sets some additional tags in your original .dng-file without telling you. In any case, the file you linked above is not the original file as created by picamera2. Whether this has an influence on how for example DaVinci interprets your raw data I do not know - default behaviour would be to ignore these tags, but you never know…