Hi all,

I want to invite people to share in this thread scan results of their Super-8 scanners, to give us some ideas on how different old film stock is performing, as well as how good a sensor/lens combination is performing on the large contrast classical color-reversal film is throwing at the hardware.

The focus should be on the unprocessed capture coming straight from the camera, not the end of a maybe elaborate and finely tuned post processing scheme. While there are already examples of scans of a SMPTE-Super-8 test film available here on the forum, I want to aim in this thread on real world examples. The SMPTE test film is not a color-reversal film, it’s resolution and density is in no way comparable to what is thrown at your scanner if you are digitizing old home movies.

For judging in a coarse way the resolution of the lens/camera combination, one can try to use a frame which includes some dirt. A simple way to do this is to use a frame near a cut between scenes.

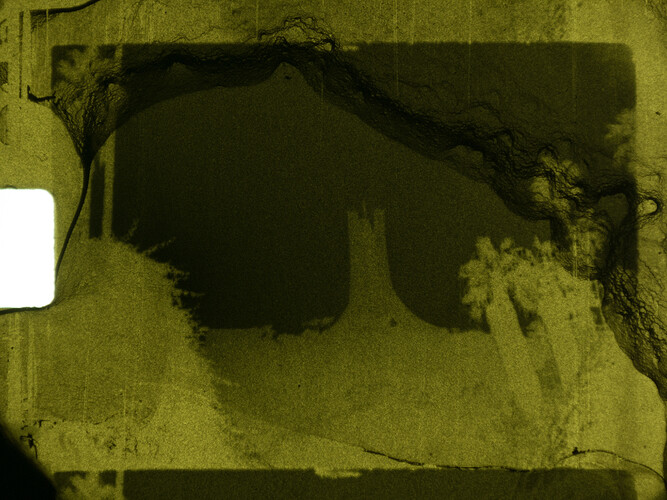

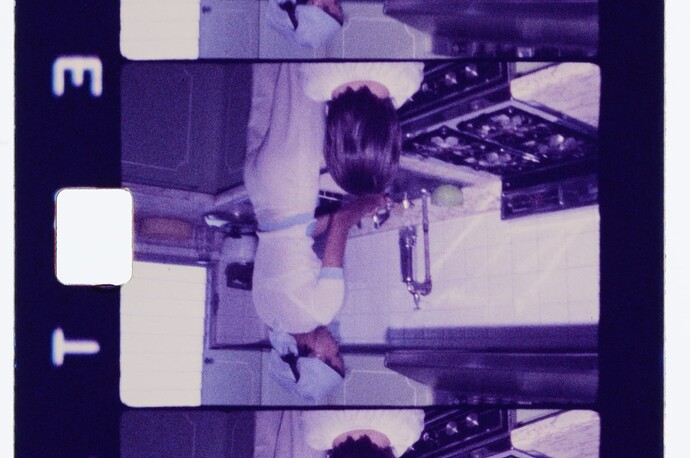

So, here we go: first is an example of a Kodachrome 40 film, scanned with a combination of a see3cam CU135 and a Schneider Componon-S 50 mm. The film camera used was a Revue S10 deluxe, originally made by Chinon/Japan with a Zoom F 1:1.7 f = 6.5-65 mm lens. This specific film was developed more than a year after the initial exposure. Endured during that wait time freezing temperatures as well as temperatures above 40°C (don’t ask ![]() )

)

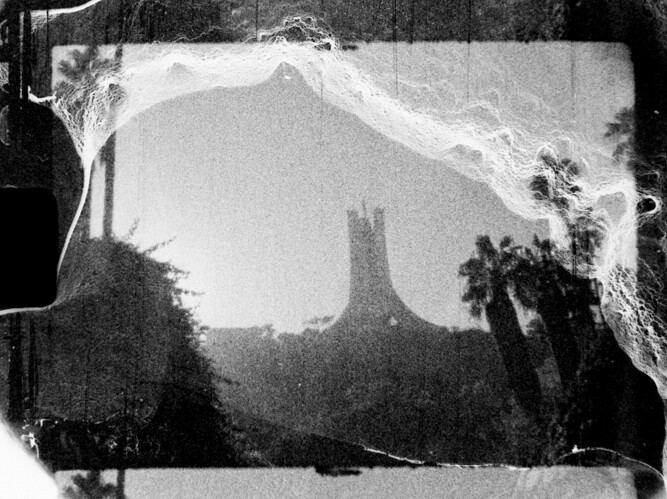

Same parameters as above, only filmed with a “Bauer C Royal 8E” and developed immediately after exposure:

I guess one can notice the difference in film camera resolution as well as the difference in film grain.

Here’s an example scanned again with the see3cam/Schneider combination, filmed with the Revue/Chinon camera, but this time, it’s Agfachrome

In contrast to the very first example above, the camera was here certainly operating at the largest f-stop possible, 1:1.7 and it shows in the sharpness of the original frame.

I think it would be interesting just to share other scan results, possibly, if available, with information about the film camera used, the scan camera/lens combination as well as other information available. The ideas is to build slowly a database of scan results which might help to design better systems, for example by showing minimal requirements as well as intrinsic limitations of the format. Super-8 color reversal film is probably one of the most challenging scanning situations, due to the small frame size and the huge dynamic range color-reversal film is showning.

By the way - in every frame I posted, there is some dust hiding, as promised, to judge the sensor/lens combination!

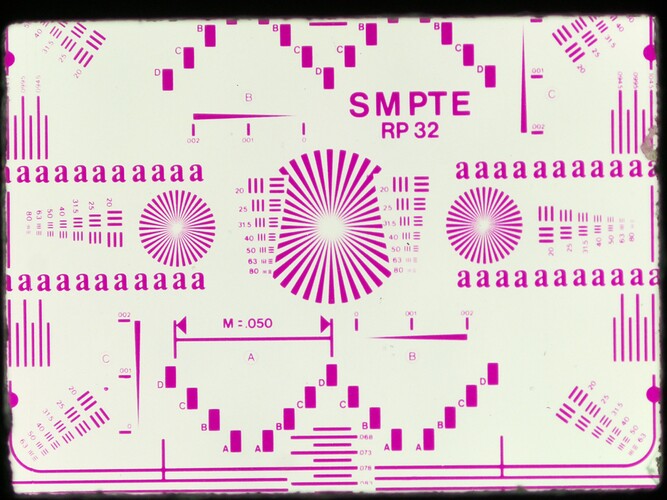

Now here’s a scan comparision without that luxury (simply could not find a frame with dirt). It’s between two different scan sensors, namely a Raspi HQ sensor vs. a lens-shading compensated Raspi v1-sensor. Both scans used the trusted Schneider Componon-S 50 mm, film stock is Agfachrome, camera the Revue/Chinon one. Here’s the scan result of the v1-sensor (original scan resolution only 1292x972 px):

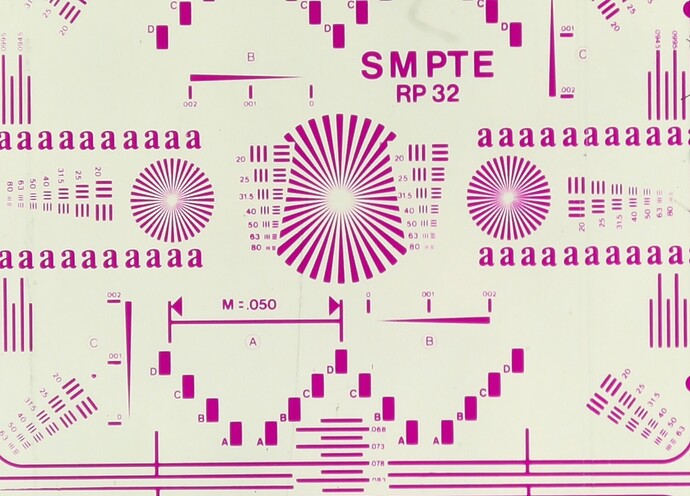

Here, for comparison, the same frame, but scanned with the Raspi HQ sensor. The scan resolution is much higher (2028x1520 px)

so we start to see the film grain, but the visual resolution seems to be worse. So what happened?

Well, let’s enlarge a part of the frame. Here’s the v1-version

Nice, relative sharp image content, only mildly noticable grain - right?

And now for the HQ version at the same resolution level:

What happens here? Highly visible film grain and lousy definition of image borders…

Well, the v1 sensor is a mobile camera sensor, while the HQ sensor is more geared towards cam corders. Like any mobile sensor, the v1 sensor actually employs (either already on the sensor, or during intial image processing) heavy noise cancelation and image sharpening techniques. In our scanning application, this gets rid of not only the sensor noise, but also of the film grain! And of course, the (adaptive) sharpening also helps with the visual appearance of image edges. If you compare both images closely, you will notice the patchy, plastic-like look of the v1 sensor - that is a sure indication of adaptive smoothing/sharpening.

The HQ sensor on the other hand is tuned differently, and shows happily the film grain. Sharpening is also applied here, but it is less noticable.

One point to be mentioned here: this issue here is most probably caused by the pipeline converting the raw sensor files into the jpgs stored on the disk. Most machine vision cameras are usually not designed to deliver “nice looking” images. Also, one can avoid such things by working directly with raw images. But: there is a tendency of utilizing image processing algorithms already on the sensor level. So your raw image migth not be as “raw” as you might image (for fun, just google: “nikon star eater”)

Coming back to the original topic: it would be great to see other examples of Super-8 scans here in this thread. Other film stock, other film cameras, other sensors, other lenses and various scan resolutions, to get an idea what is needed and how much is needed for a great result.