Here’s a little status report on this. The challenge: my scanner is made out of 3D-printed plastic parts, which is a bad choice for optical setups. This stuff is way too wobbly to be really of any use. Furthermore, as @npiegdon discovered, even a sturdier setup will show tiny movements when anywhere in the house somebody moves or closes a door. At least if your scanner isn’t mounted on a sturdy concrete slab…

@npiegdon detects such movement with a nicely constructed sensor and simply retakes an image if movement is detected. While I even have the parts of building @npiegdon’s sensor, I was wondering whether one could utilize the camera as a sensor.

Having no movement between captures of the same frame is also essential if one wants to average away any of the sensor noise, as discussed above.

So, the idea is simple. Instead of taking a single capture, several ones (in my case currently: only two) are taken in rapid succession. In full res mode, the HQ sensor is running with 10 fps, taking two captures per frame reduces this to 5 fps only. That’s the disadvantage with respect to this approach - but if we want to average anyway over a set of frames, that is the price we have to pay anyway.

So, the approach is the following. Immediately after capturing a frame, I capture a second one. Similar to this code:

def grabFrame(self):

request = self.capture_request()

self.frame = request.make_array('main')

self.raw = request.make_buffer("raw")

self.metadata = request.get_metadata()

# grab a second frame

request.release()

request = self.capture_request()

self.frameB = request.make_array('main')

request.release()

return self.frame, self.metadata

Before sending the raw toward the PC-client, the following operation is performed:

frame = cv2.absdiff(camera.frame,camera.frameB)

delta = np.average(frame)

Here’s the “normal” result with no movement in the house

and here’s someone walking around in the room with the scanner:

Clearly, there are horizontal bands of large movements between the frame and the reference frame. These bands are mainly horizontal due to the rather low frequency of the movements with respect to the scan frequency of rolling shutter sensor. Speaking of this - a global shutter sensor won’t save you here; the movement will still be there, only in a global way - that is, the complete frame will be misaligned.

Such a capture should not be used for averaging - you will loose resolution if you do so. Luckily, even my simple np.average seems to be a not too bad indicator. There might be other measures which perform better - still a subject to be researched.

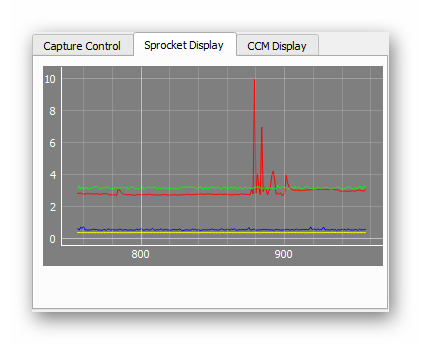

For illustration purposes, here’s a display of the actual scan operation over time (frame number). The green line indicates the time between captures (here slightly above 3 secs), the blue line shows the tension measured at the supply wheel, the yellow line tension on the take-up wheel. Lastly, the red line shows the differences between the consectutive captures of the same frame. From frame 880 to about 900, I was walking around the room:

(I would set the threshold to throw away and redo a capture with the described approach at a value of 4.0 currently. There is however a slight dependence of the error value with respect to the image content (note the slightly higher average value after frame 900). Maybe something more intelligent than the np.average used should be employed. We will see.)

So, at least with my scanner and house, it is essential to monitor the differences caused by scanner movements between frames which are intended to be averaged in order to improve the noise characteristics. It might be possible by just analysing the images from the sensor, without additional hardware.