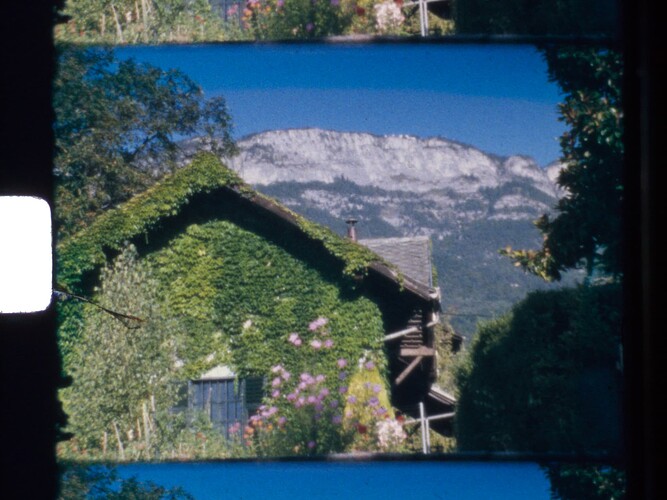

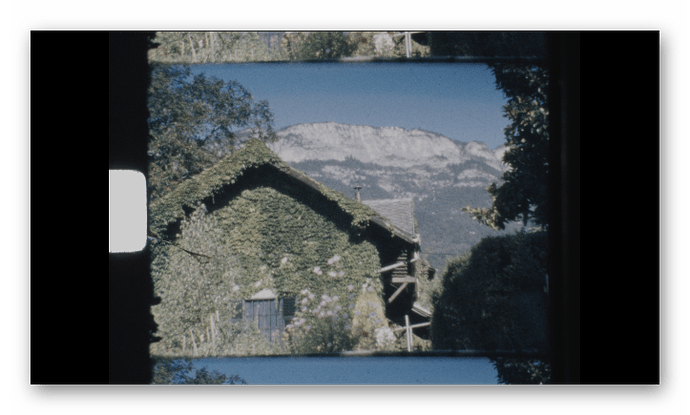

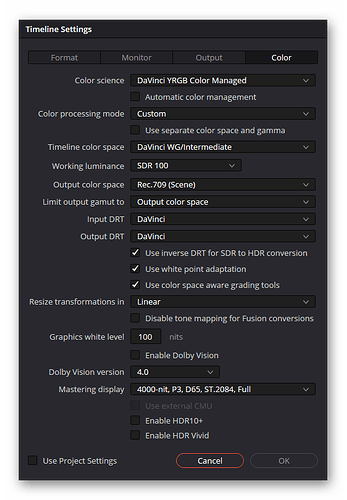

Let’s return to the original question - the strange banding in the blue sky in a Agfa Moviechrome scan (first post of the thread).

First a summary, as this is going to be a long post: The colors available in film stock greatly exceed the colors representable on a normal computer display (rec709 or sRGB). Mapping from the film stock to digital imagery requires a careful adjustment of the process in order to preserve the color content of the original footage.

Now for the details.

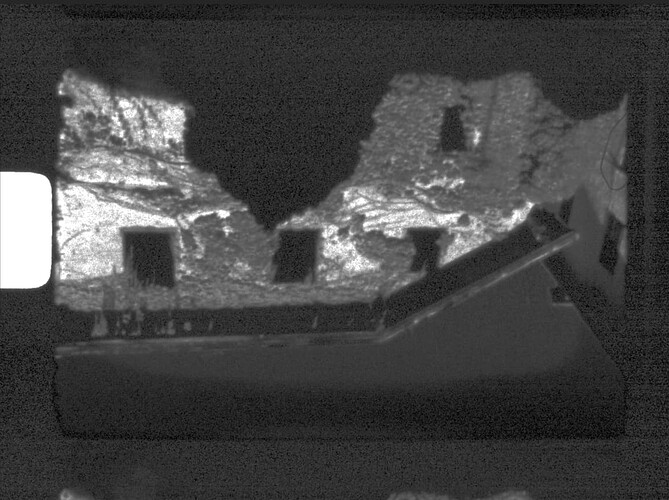

To get a basis for analysis, I created a real HDR from an image stack of 32 different exposures of the frame, ranging in exposure time from 0.24 msec to 0.3 sec. I used that data within the context of Debevec’s algorithm to first estimate the gain curves of my HQ sensor. This is the result:

That plot shows two things: first, the estimate of the gain curves is not too off (it would be perfect if all curves would fall onto each others), and secondly, your average jpg covers about a range of 8 stops.

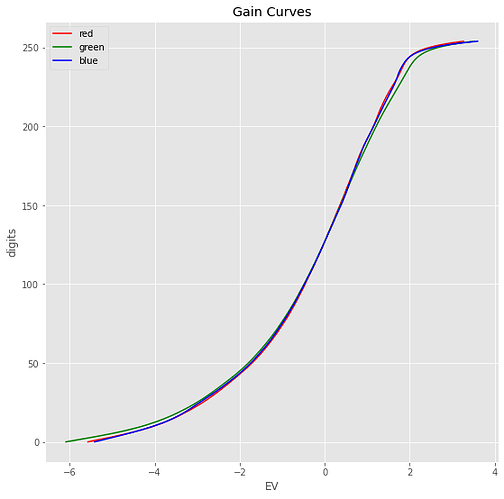

Now, using the gain curves to calculate the real HDR, one ends up with image data having the following histogram:

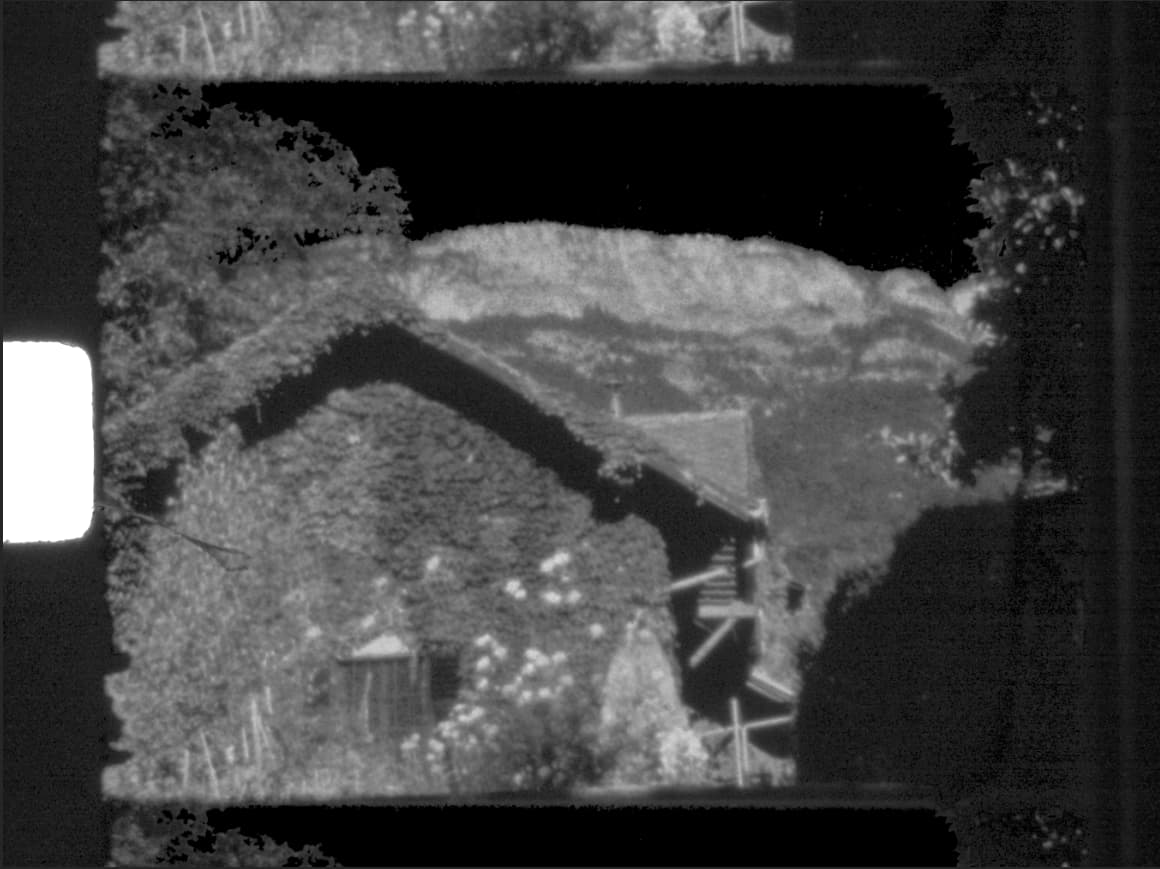

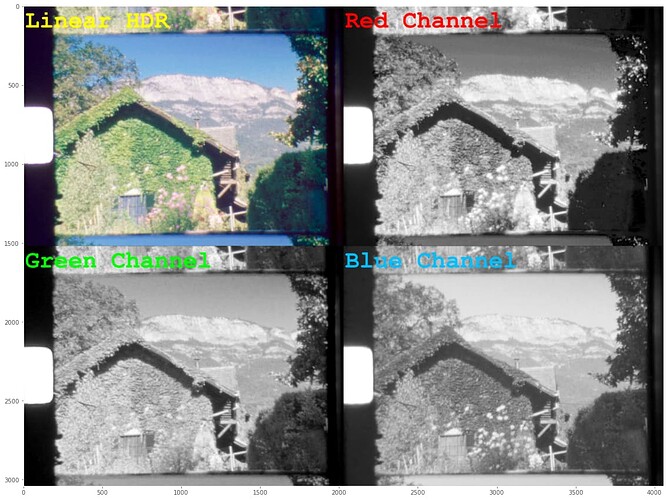

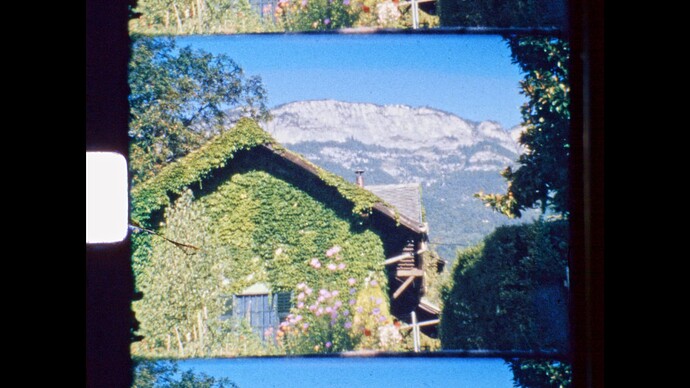

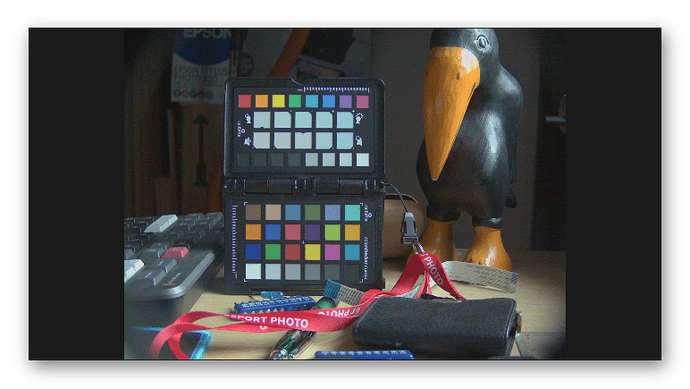

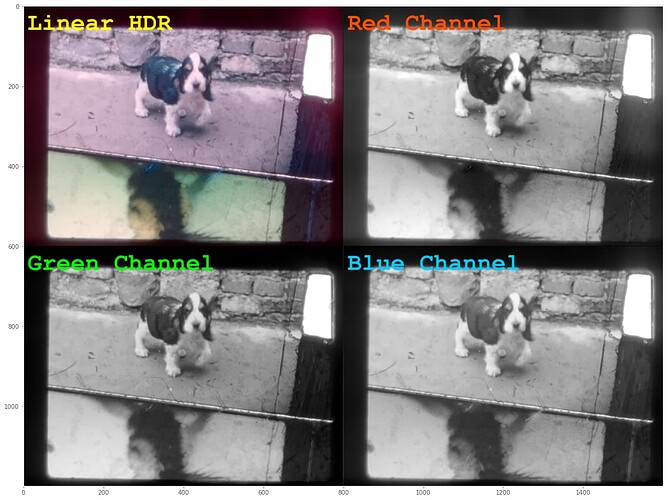

I find this rather impressive - the data of the scan ranges over 20 EVs! Well, granted, the real image data ranges probably only over 12-15 EVs (stops). Here’s a display of the HDR-result:

Looking at the data in the sky, there are slightly visible bands of different color as well. So it’s safe to assume that the banding is actually real, sort of. The question is: why is it so much more visible in the .dng-file when developed by the usual raw converters?

To answer this question, I implemented the raw development pipeline step by step and checked the data after each step.

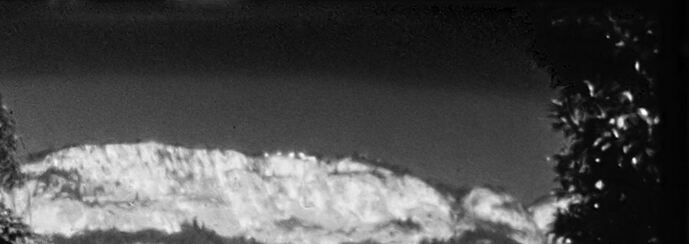

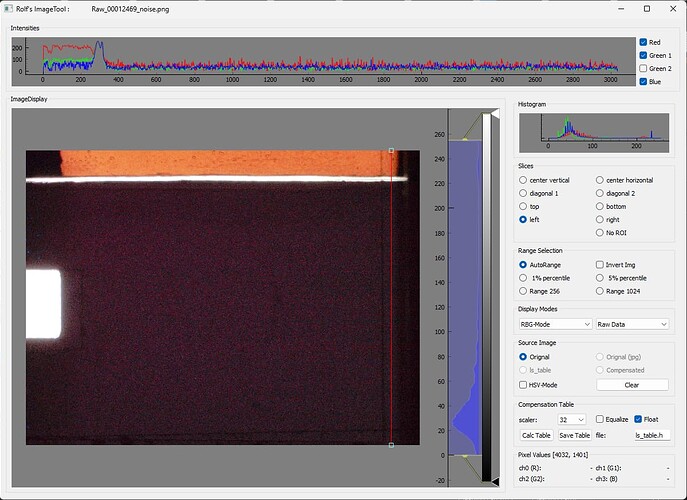

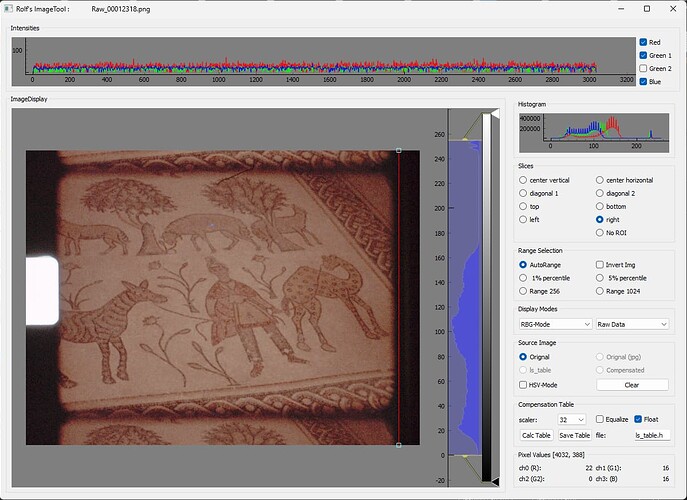

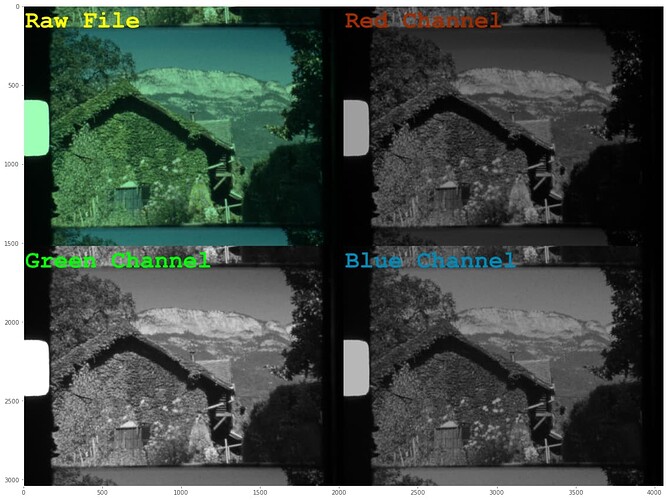

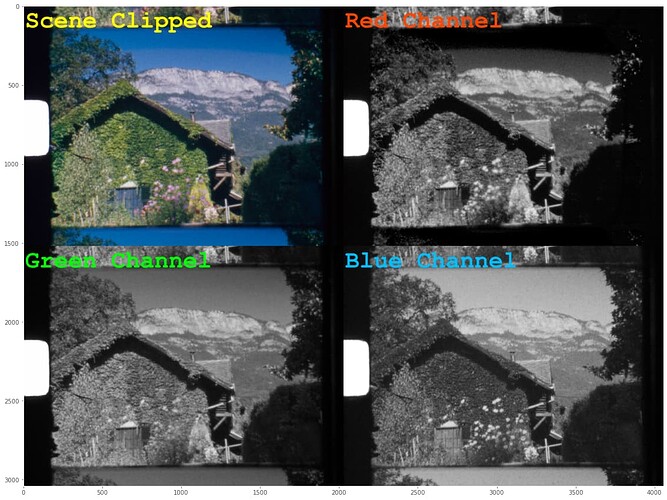

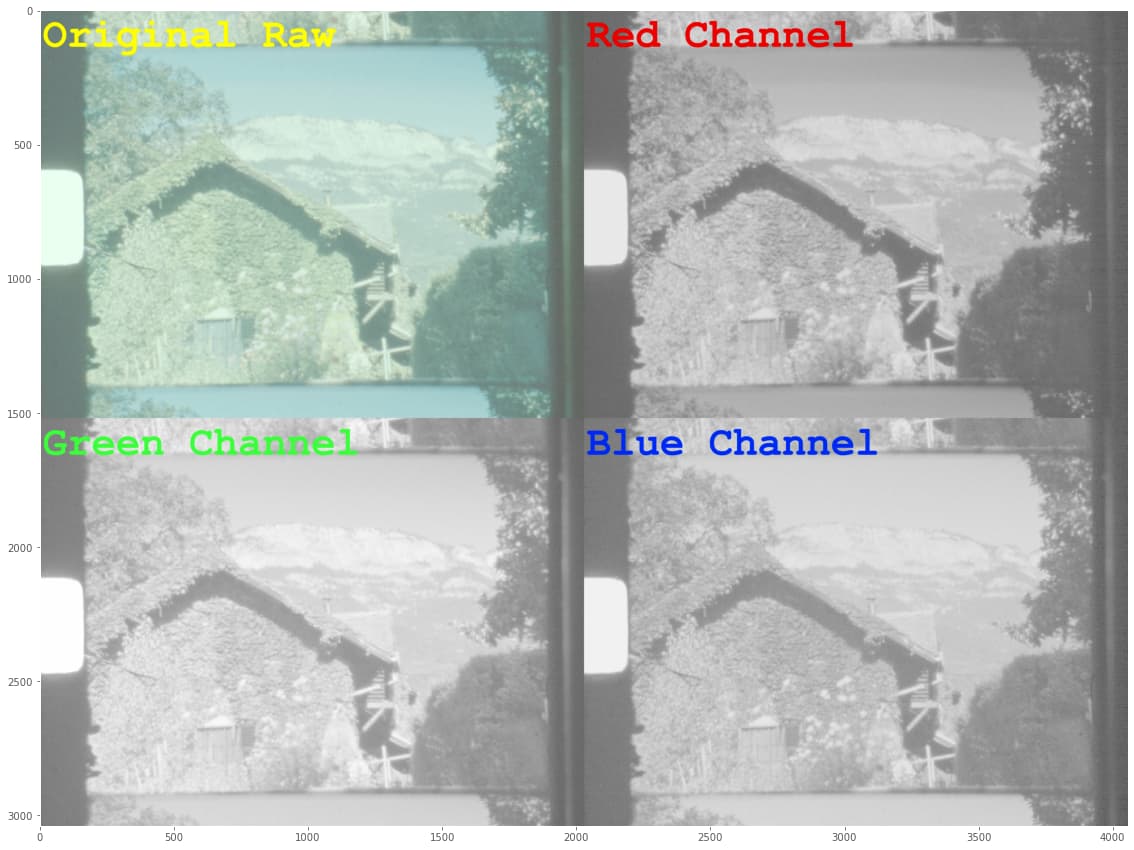

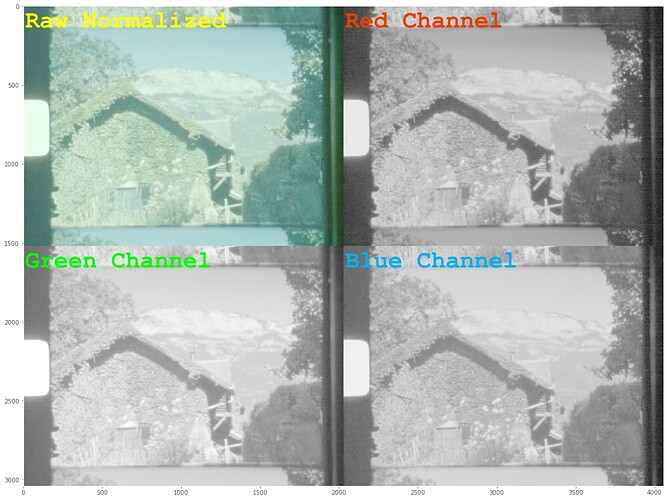

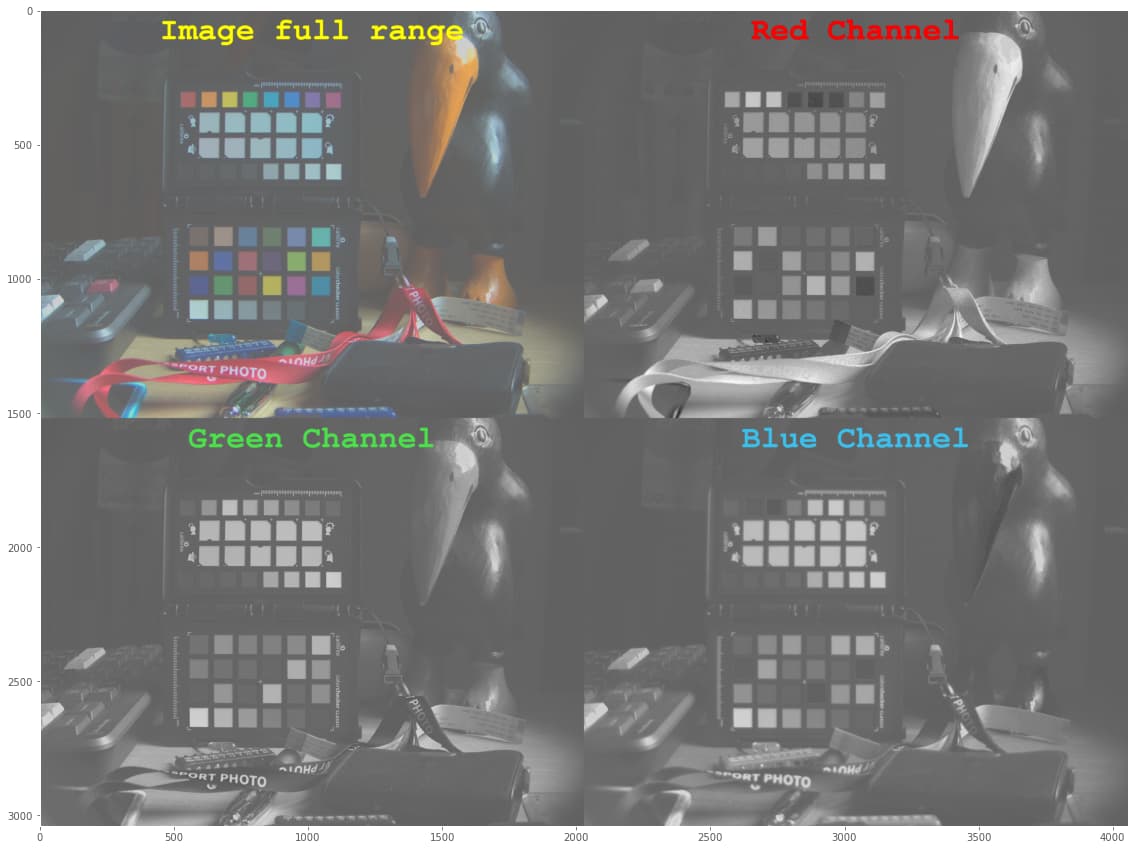

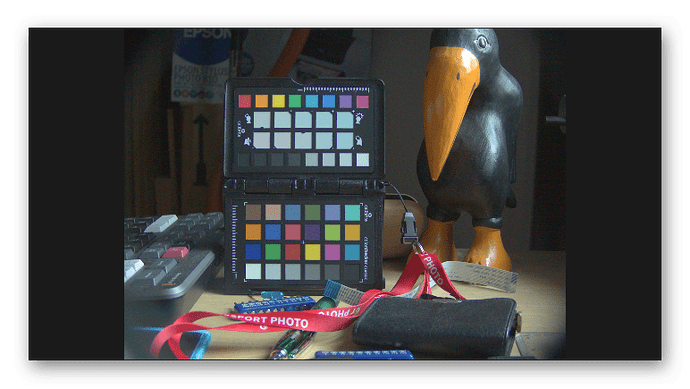

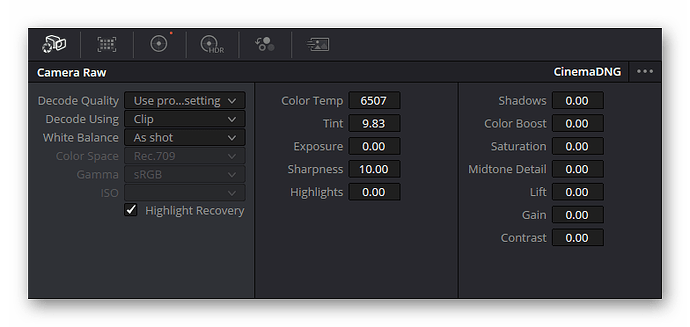

Now, the very first step in raw development is to De-Bayer the image. During that process, also the blacklevels and whitelevels are taking into account, in effect normalizing the image data. Here’s the result of these steps:

On the top left is the obtained image, it features the familiar greenish tint of raw data not yet color managed. The other quadrants of the plot show the data in the red/green/blue channel, and one can see in the sky two nice bands in the red channel of the data.

As a sidenote: already at that step some colors will be clipped. Some clipping is intentional (in order to avoid magenta tinted highlights), some other not so much. Specifically, from the raw data the blacklevels of the sensor are subtracted - which in the case of the HQ sensor have a value of 4096 units. That information is contained in the .dng-file for the raw converter to use. However, the lowest value available in the raw Bayer-data are red: 3920, green1: 4032, green2: 4016, and blue:3986. So subtracting the blacklevel as specified in the dng-file will yield negative values of some pixels of the image. Not so good, but I think all raw converters handle that situation gracefully. There are no visual artifacts in the above image either.

The next step a standard raw converter is performing is applying the whitebalance coefficients used at the time of capture to the raw data. In the case of the HQ sensor, this is either the inverse of the red and blue color gains you specified when taking the capture, or, worse, the inverse of the color gains libcamera came up with at capture time, based on the information available in the tuning file. None of this will happen if you specify the whitebalance in your raw converter by manual means, for example using the color picker to indicate a grey surface in the image.

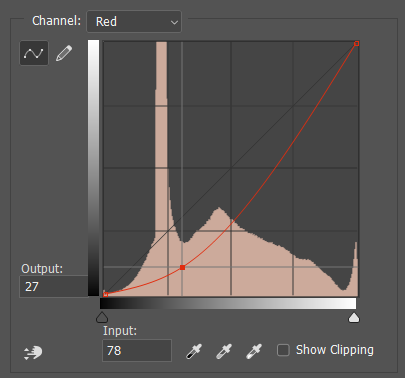

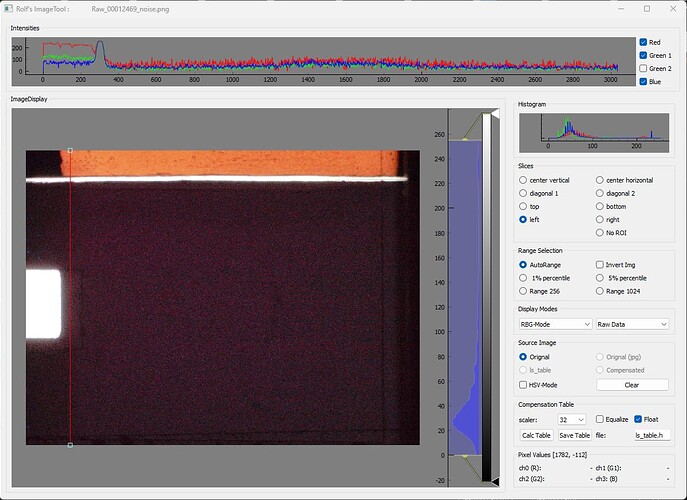

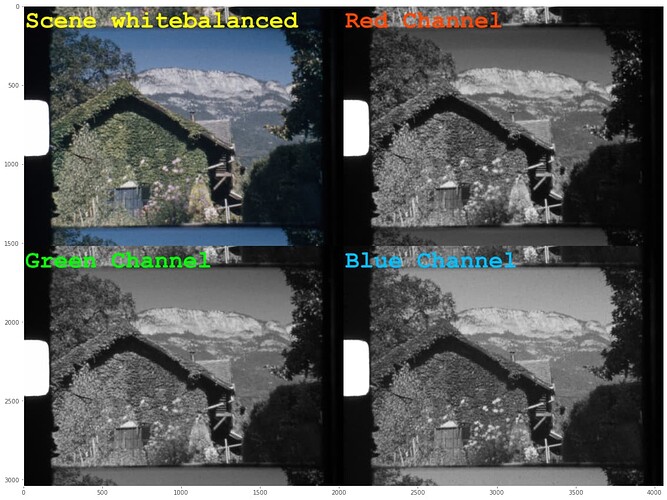

However, if you do not interfere manually at that stage, the whitebalancing values from the .dng-file will be taken. In my case, these values are fixed and set by my capture software on capture start. Applying the whitebalance, we end up with the following image:

In fact, not too much has changed from the previous image, as it is just a simple multiplication in the color channels. If anything, the banding in the red channel is slightly reduced. But the colors in the image alreay look better, but somehow not yet “right”.

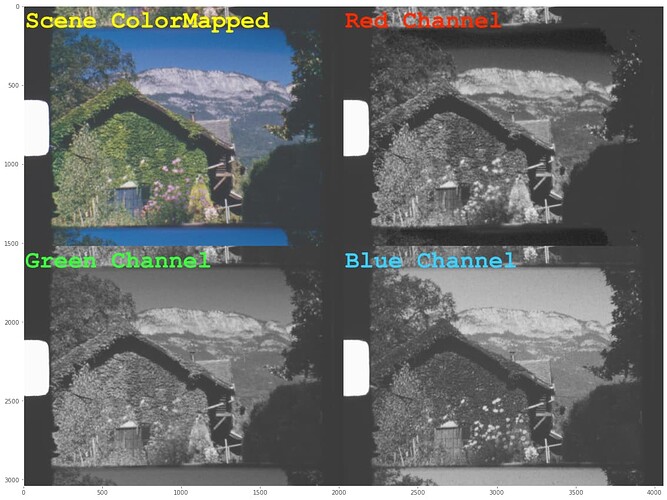

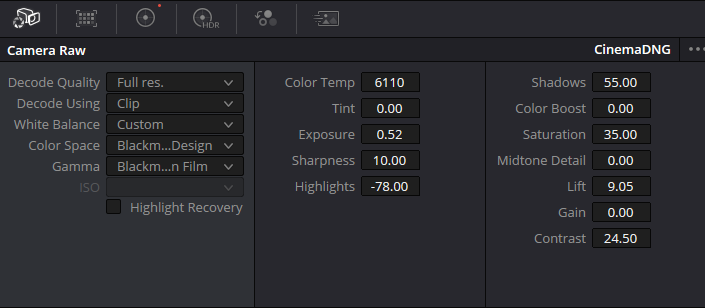

This is where the next step improves things. Here, a CCM (compromise color matrix) is applied to the image data. Like the whitebalancing coefficients, the CCM can come from a variety of places. For starters, there are usually two CCM embedded in the .dng-file. The picamera2-lib is here slightly special as it includes only one CCM in the .dng-file. If you do not prevent the raw converter from doing so, it will use this CCM matrix to arrive at the next step of development

Note that this CCM is actually again calculated by libcamera during capture time. So it will heavily depend on the tuning file you had been using at the time of the capture. As one can see, the banding in the red channel is more noticable. This is probably caused by the CCM mixing negative components from the green and blue channels into the red channel.

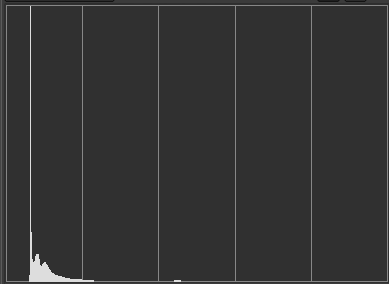

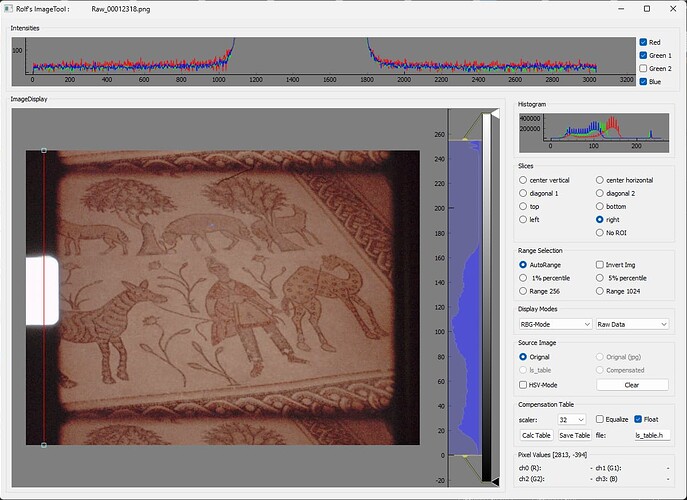

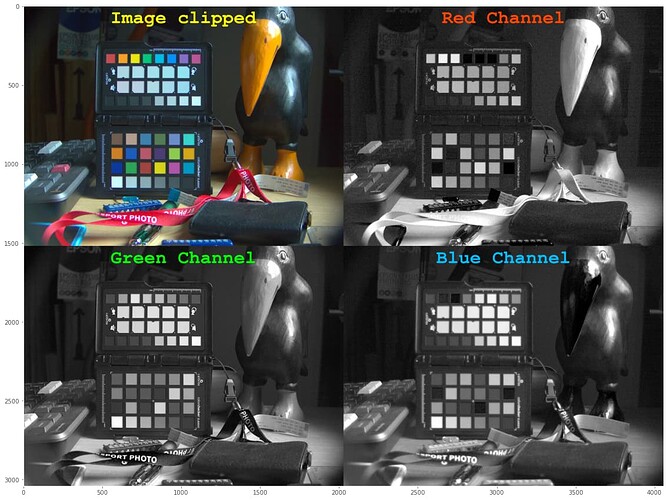

In fact, I cheated somewhat when processing and presenting the above images. All the calculations above were done in floating point numbers. And indeed, looking at the minimal values in the color channels, one discovers that they are negative - that is, not displayable on any screen. Clipping the above image to the displable range, we finally end up with this image

and here the banding in the sky is enhanced by the clipping process!

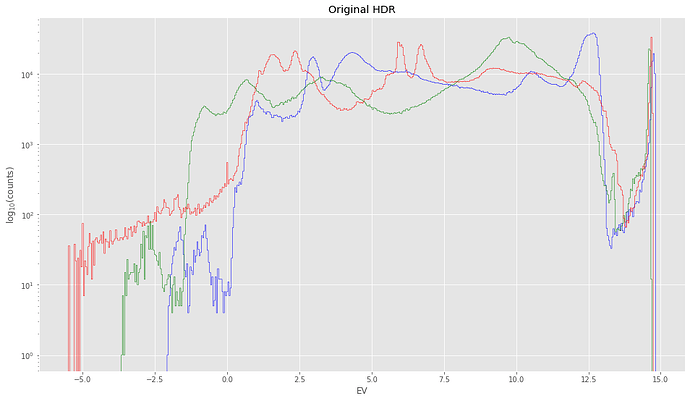

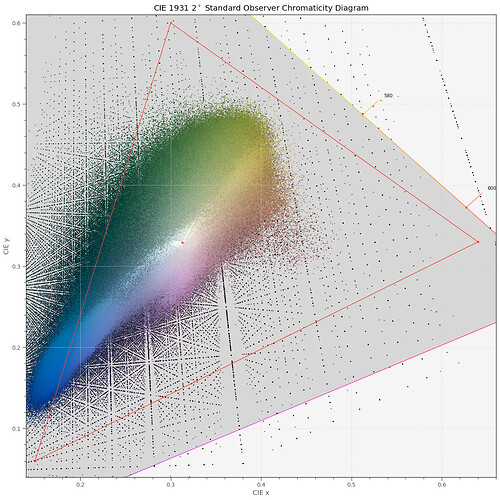

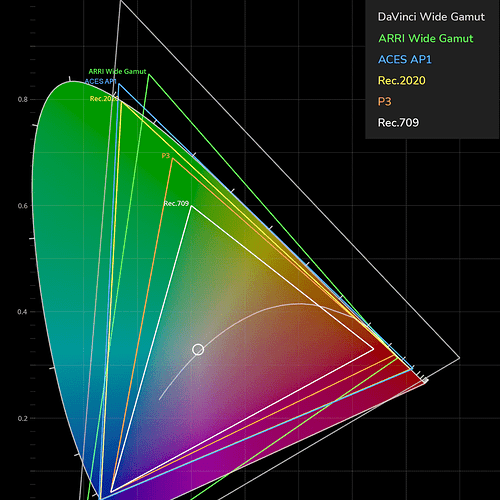

To give you another perspective, here’s the CIE diagram of the image tagged above as “Scene whitebalanced”:

The red triangle indicates limits of the rec709 color space. All pixels lying outside will be clipped, leading occationally to hard borders in the final image.

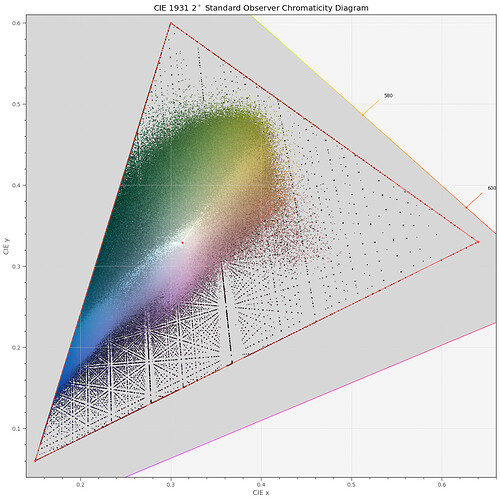

To make this explictly blunt, these are the pixel values of the image tagged “Scene clipped”, for comparison:

Clearly, rather large areas in the blues and greens are just gone.

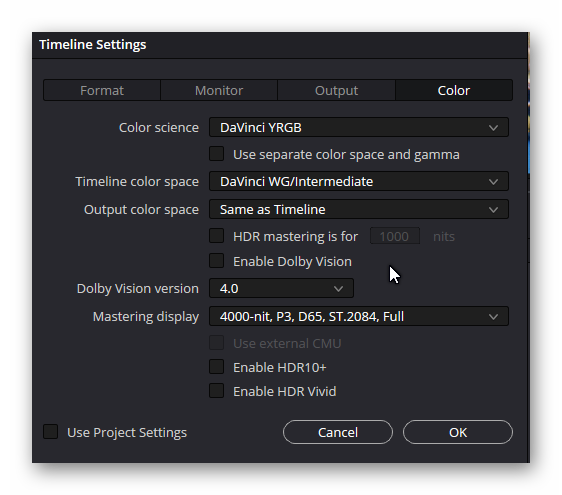

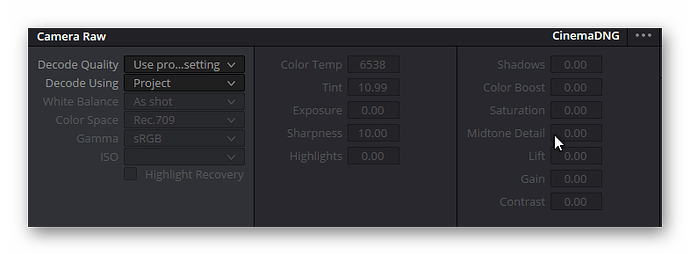

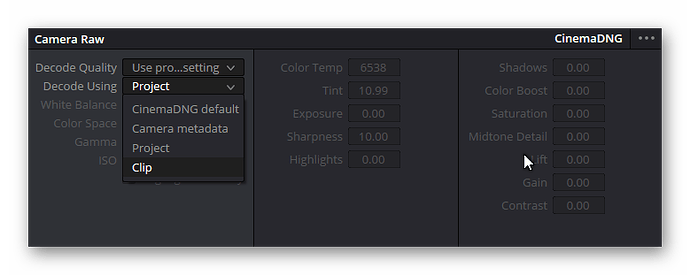

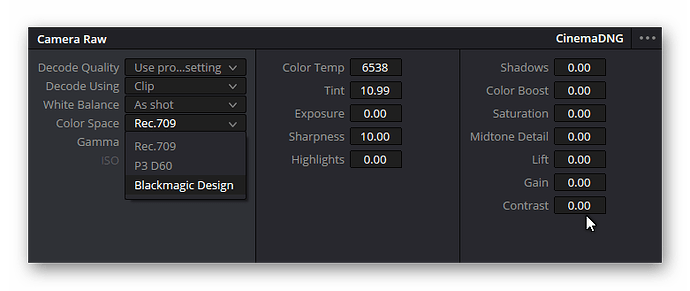

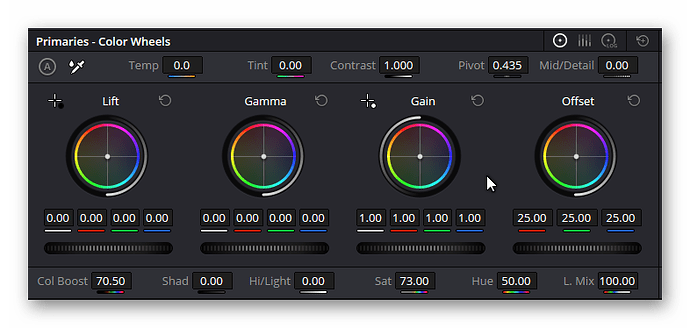

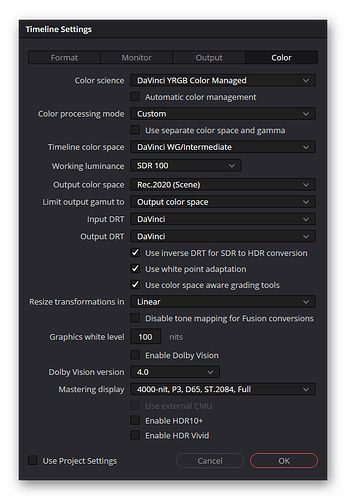

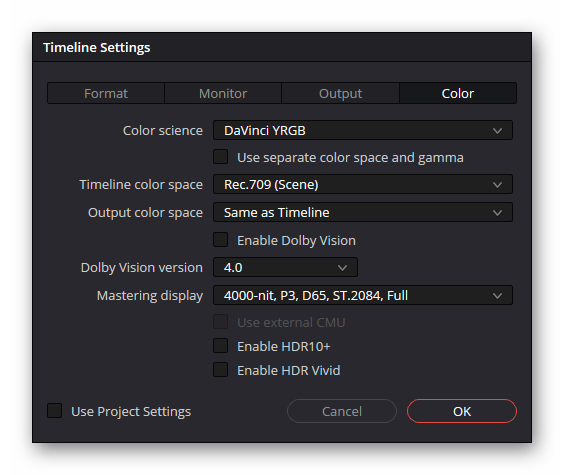

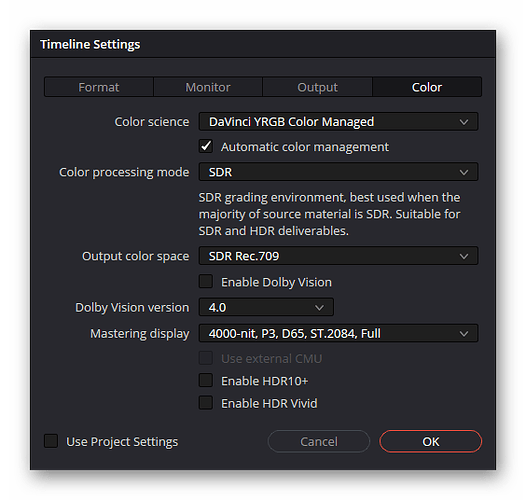

There are two ways to dealing with this: either manually tweaking the settings during the raw development, ignoring the data embedded in the .dng (as @npiegdon did above) or changing the color science of the HQ sensor to another, larger color space by default. (would it be really possible? What primaries would be the best? Would the RP5 log-like raw encoding be usable? Or another log-color space?)

The option of tweaking the processing of the libcamera seems to be an interesting option for me, but I have not yet looked into this in greater detail.