@PM490 / @npiegdon: …as I remarked several times, I am still in the experimental phase on the issue which started this thread. Nevertheless, I think it’s about time to summarize my thoughts so far. The title of this thread is:

“Strange encounter in the red channel/RPI HQ Camera”

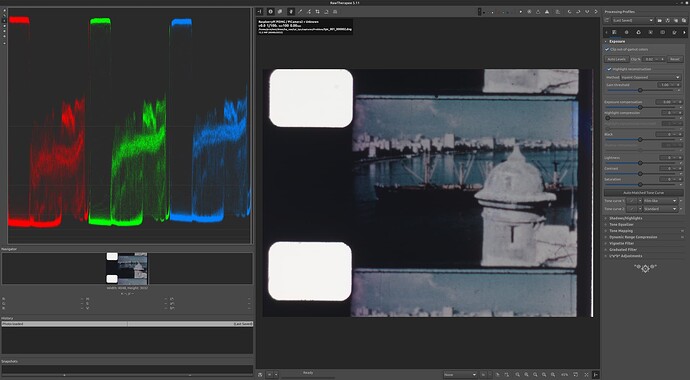

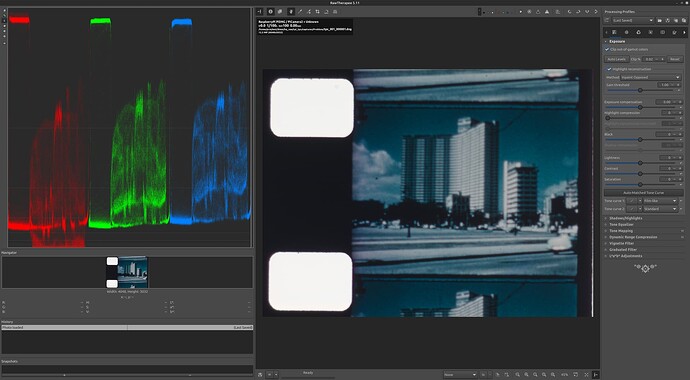

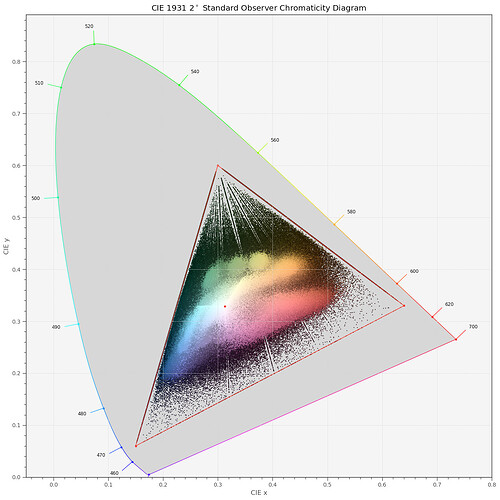

This was specifically discussing .dng-files and the answer is hidden in the way these .dng-files work. The short answer: the camera sensor is able to see colors which are non-valid colors in any given color space. These out-of-gamut colors cause the issues discussed here.

A .dng-file contains a lot more information than the sensor’s raw intensity values recorded. This metadata is used by raw development software to get a good guess on what colors the sensor actually saw, in an effort to come up with mostly “life”-like colors when capturing a normal scene. When we capture old, faded film stock, we are actually operating the camera in a way which is very different from capturing normal photos. More on that later.

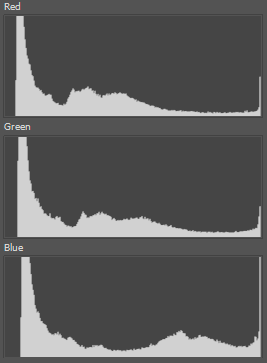

Coming back to the normal use case of a camera - capturing for example a market scene on a sunny morning. There are two ingredients here which define the colors we as a human observer would notice: the spectrum of the light (early morning sun), interacting first with the absorption spectrum of the vegetables on the market stands, producing yet another spectrum which is interpreted by our visual system’s sensitivity curves to be of a certain color. Due to the nature of this process (complex spectra are reduced to only three independent color informations “per pixel”), quite different spectra will yield identical colors.The goal of an ideal digital camera/display combination is to produce exactly this color impression we human observers would have when watching this scene. Of course, that’s not possible - especially current display technology falls below the necessary capabilities - both in terms of the available color gamut as well as dynamic range. But we can get quite close, for practical purposes.

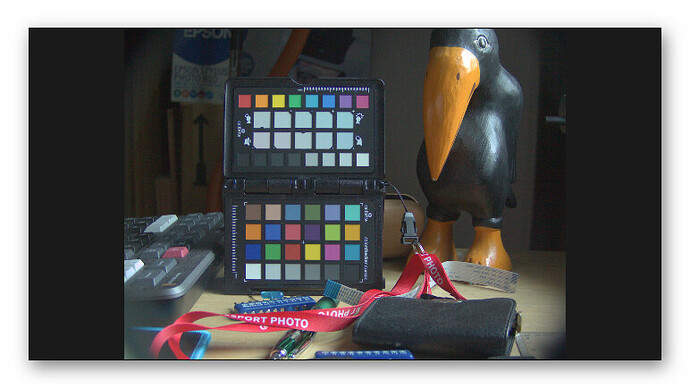

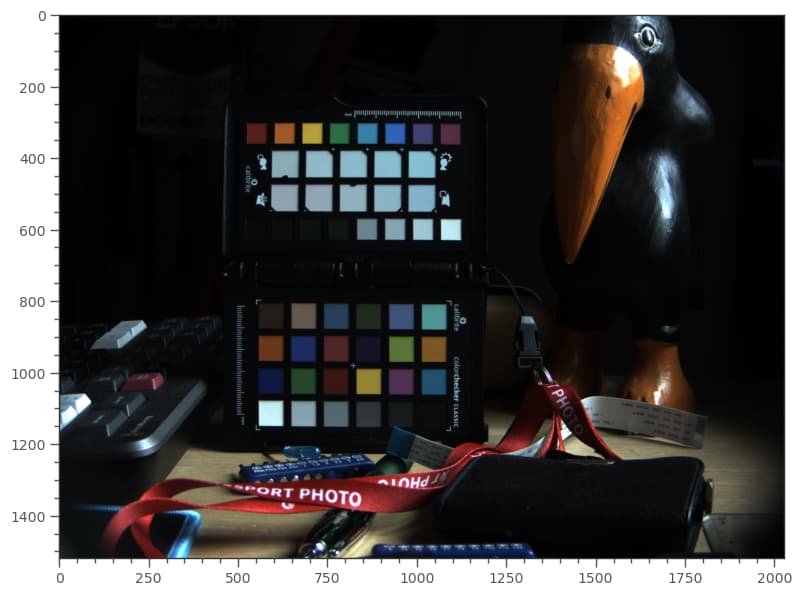

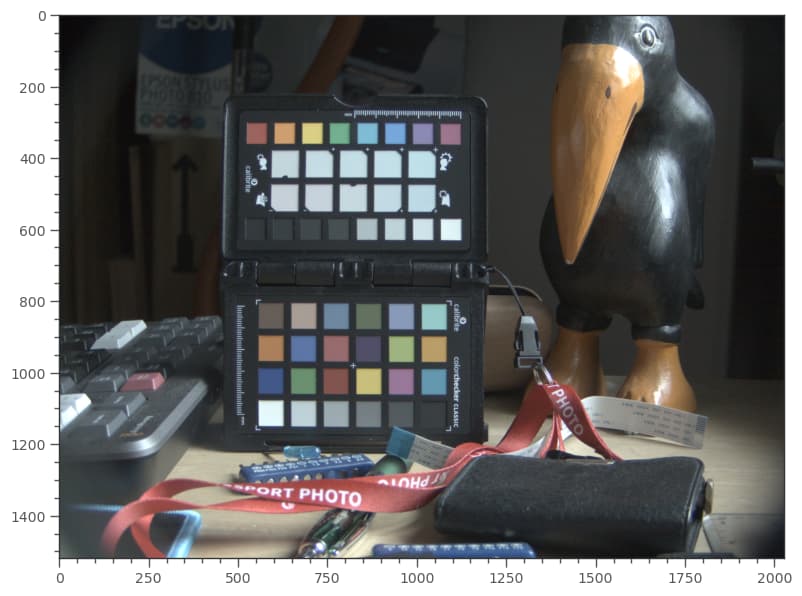

In order to achieve that goal, the camera needs to be calibrated. Simply speaking, you introduce a bunch of colors of known appearance (spectral responses), image the scene and try to come up with a way to obtain matching colors in your digital image. For this, typically images like this are used:

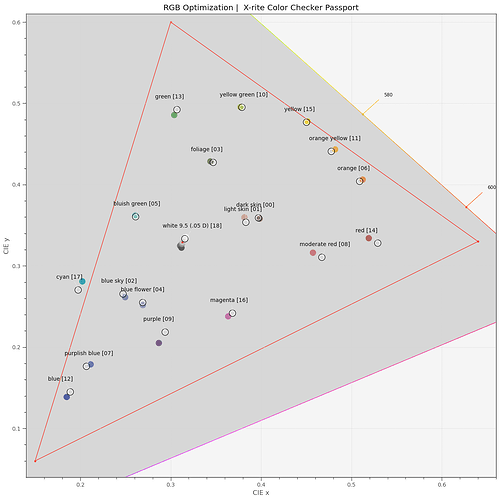

As the precise color appearance of all the color patches are known, you can optimize a color matrix in such a way that all patches end up more or less where they should end up. Here’s the result of such an optimization:

The dark circles indicate in an abstract color space how the colors of the color checker should look like, and the colored filled circles indicate actually where they ended up. Not too bad, in this case.

The red triangle in the plot above indicates all the colors a sRGB/rec709 display can faithfully reproduce - note that the “cyan [17]” patch actually is outside the rec709 color gamut!

Now, this color matrix is valid only for the specific illumination spectra with which the image was taken. For perfect colors, you would use another color matrix in the evening than in the morning, for example. This is where in our use case the libcamera tuning file comes into play. The tuning files contain usually a full set of different color matrices, indexed mainly by the color temperature of the illumination source. If it’s a candle light, another matrix will be used than when it’s simply daylight. The important point here is that the color matrix libcamera/picamera2 comes up with at the time of capture is one of the important metadata entries in our dng-file. And it’s going to be used in your raw developement software!

That is, by the way, the reason that, even when capturing in dng, you want to make sure to use the correct tuning file for your purposes. The color matrix contained in the tuning file is chosen on the basis of your red and blue color gains (or, if you are working with auto whitebalance, on the basis of your image content) from the color matrices embedded in the tuning file.

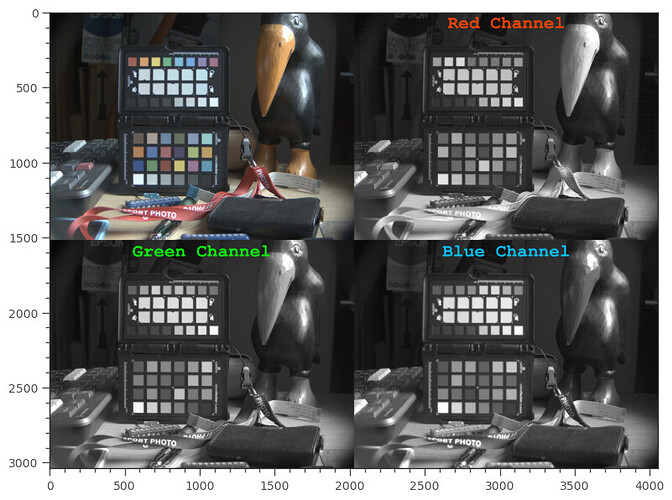

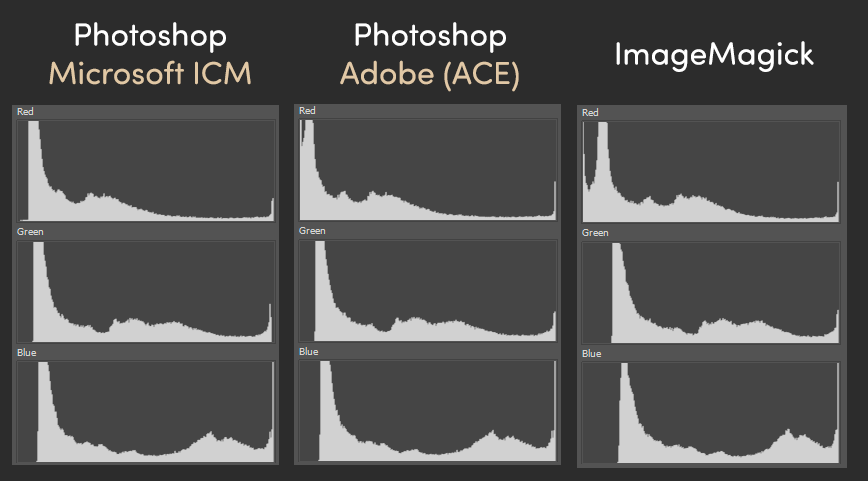

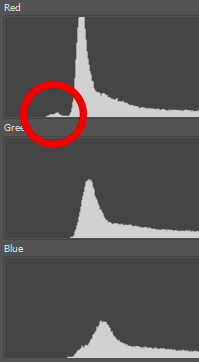

The color matrices in the tuning file are the result of the above sketched optimization - and the color matrix embedded as metadata in your dng is a derivative of these matrices. Most notable for our discussion, they mix the camera’s original color channels in additive, but also in subtractive ways. So you will end up with extremely saturated original colors (colors outside of the color gamut you are working in) with RGB-components which are negative (less than zero) or oversaturated (larger than one). This causes the “Strange encounter…” issue this thread did start with.

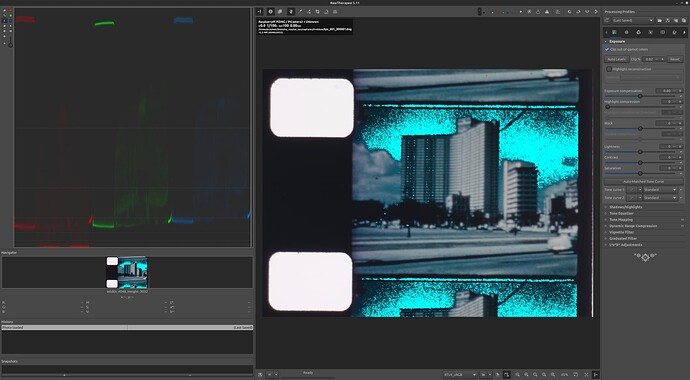

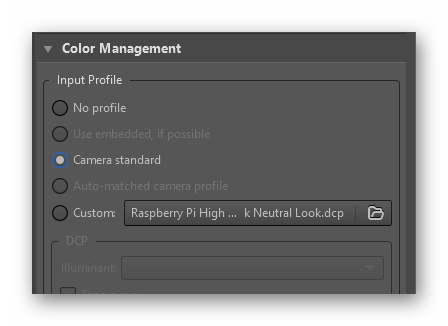

One obvious way to deal with this is not to work with this embedded matrix. How to do this depends on your software; within RawTherapee, it’s this section

“Camera standard” refers to use the color matrix which is embedded in the dng. @PM490 - what you are doing with your .tif-based approach is probaby equivalent to selecting here “No profile” when using dngs.

Now, after this rather technical detour, let us discuss this a little bit more in detail. The color matrices in the scientific tuning file have been optimized based on various daylight illuminations with varying color temperature. They should give you nice colors when taking outside images under normal daylight conditions. However, for starters, not a single one of our scanners will work with such a daylight spectrum. Given, a nice whitelight LED with a high CRI will get pretty close to this. (Not so sure about the combination of three narrowband LEDs (red/green/blue) with this respect…)

So, having a good film stock with negligible fading (Kodachrome), we might actually get rather close to the actual colors of the scene recorded ten’s of years ago. But that is probably not the standard case we are dealing with when digitizing old footage (various reasons have been discussed at the end of this post).

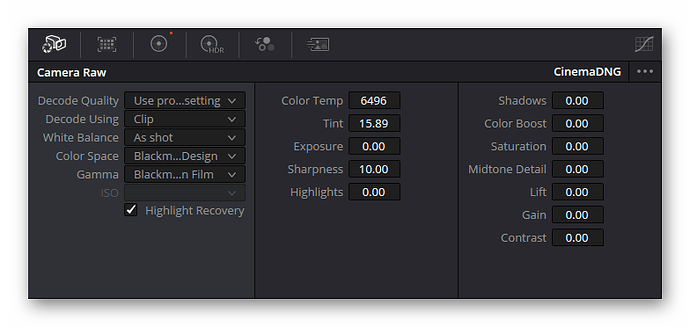

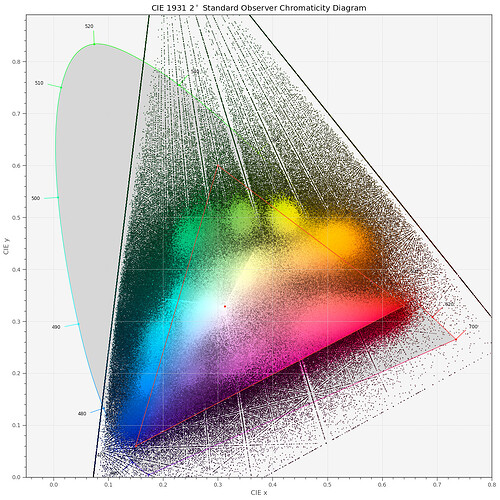

From my experience, I usually end up with color grading each and every scene of a given footage according to my taste, trying to equalize colors at least across scenes belonging together. With the kind of faded scenes @PM490 displayed above, there is not even a slight chance of getting colors correctly - you can only improve the overall appearance in such a way that the results looks ok. One way is certainly the way Pablo (@PM490 ) described above with tif-files. In DaVinci, one might get close to the same result by setting up the raw input like so:

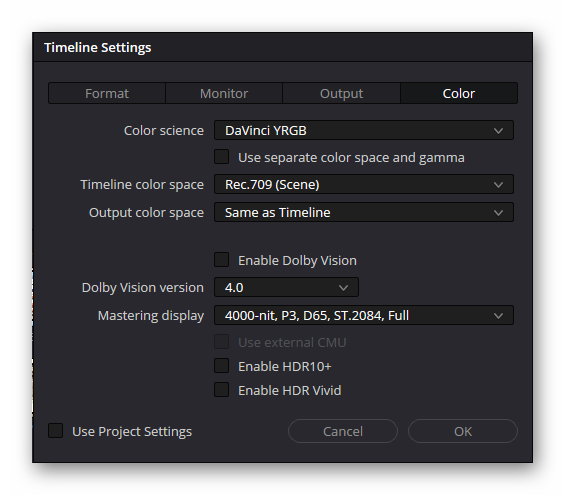

which is only possible in unmanaged color science - so your timeline should be set up with something like

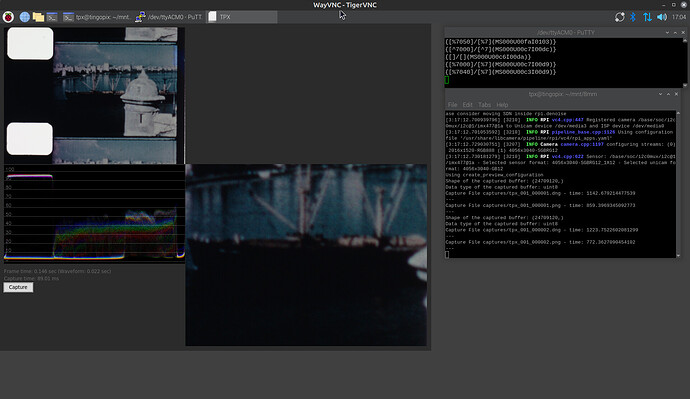

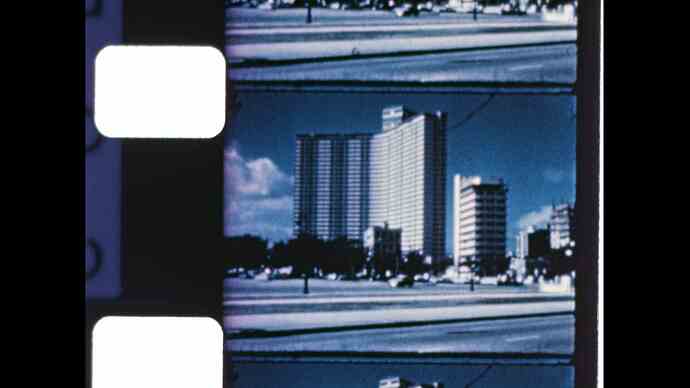

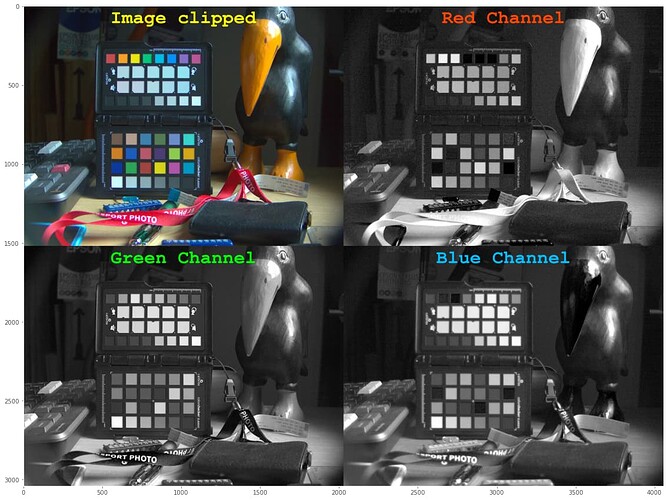

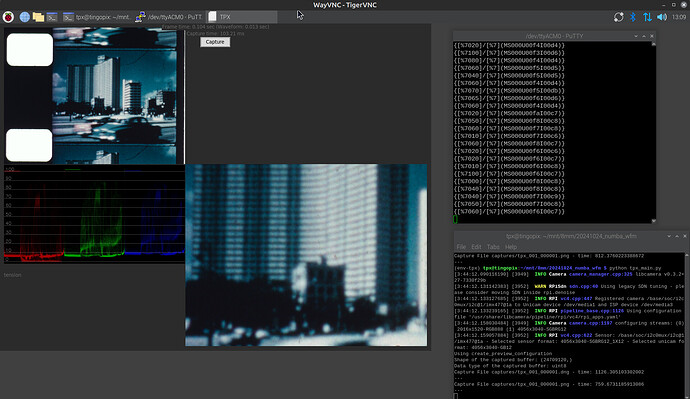

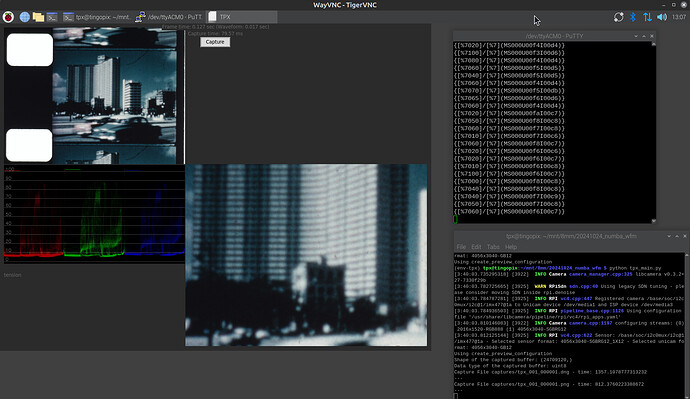

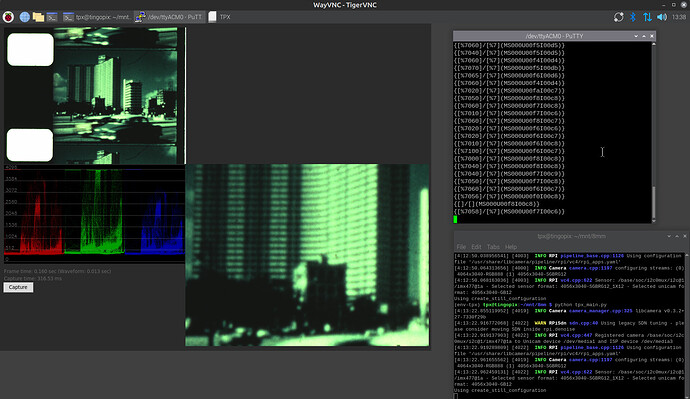

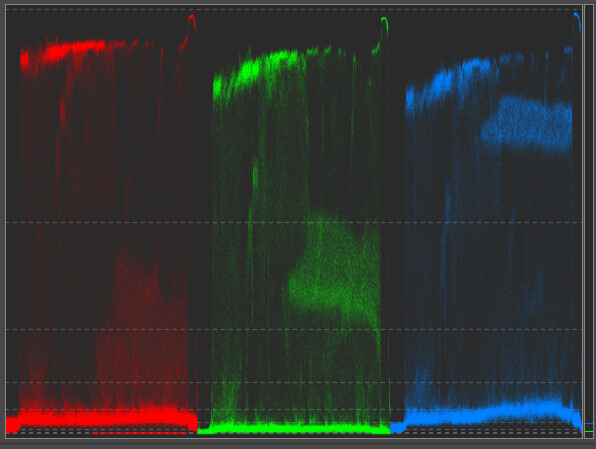

In doing so, I get initially the following data:

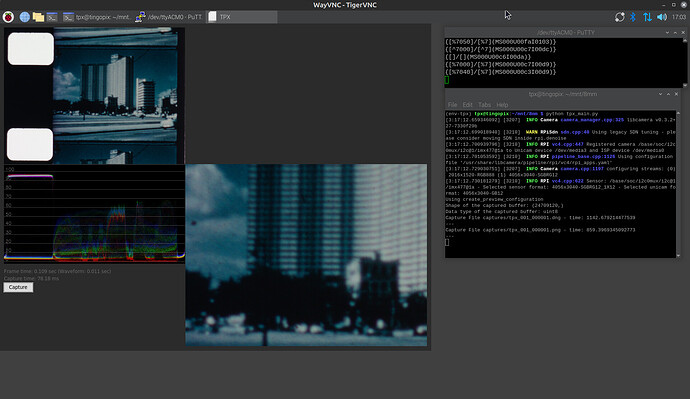

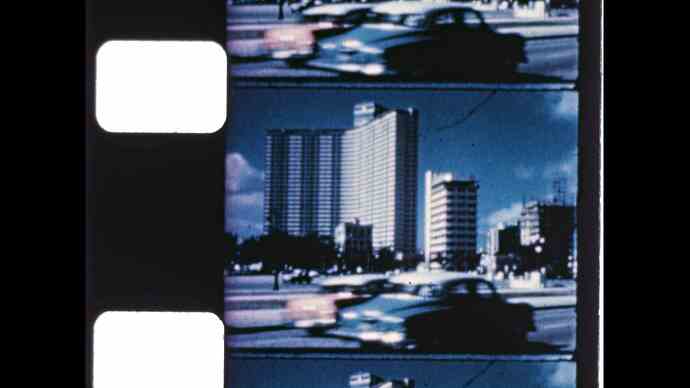

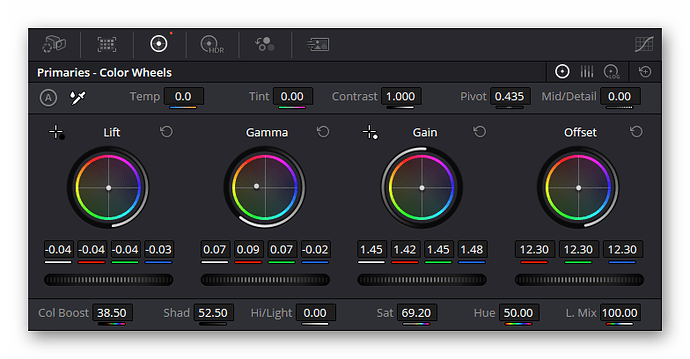

A quick node adjustment results in the following:

Here are the settings I came up with:

Of course, there’s no color science involved here at all - only our own visual system (and personal taste)…

(Note: I do not usually work like this. I use a whitelight LED with a sufficient CRI, my film stock shows usually no fading, so I simply load the dng-files into DaVinci using the embedded camera matrix for a rec709 transformation. Works most of the time ok and get’s me already very close to a final grade.)

Finally, let me comment on the way to set up the camera’s exposure and/or the light source for such severely faded material. If you have separate red/green/blue LEDs (a setup which I used previously, but transitioned away from), you can and should use this facility to set up the illumination in such a way that your color signals all use the maximum dynamic range possible. Colors in the capture will look weird, but you should be able to recover that in post, at least when using DaVinci (the internal processing of DaVinci is based on 32bit-floats).

Try to set your exposure as best as you can. For example, the dng of yours I used above features a maximum intensity value of 3859 in the green1-channel. It could easily be ramped up to 4095 - otherwise, you are missing in your capture a tiny piece of dynamic range. As this maximum value occurs in the sprocket hole - and nobody is interested in this part of your capture - you could ramp up your exposure even more. The goal would be to place burned-out parts of the original image just below the 4095 limit. This will give you a few more bits where you need them, in the dark shadow areas, but will of course also burn-out the sprocket area. Which again, nobody should be interested in.