That is kind of interesting, considering that you replaced the stock IR-blockfilter on your system. And, accordingly, you are working with color gains redGain = 1.62 and blueGain = 1.9. While the blueGain is similar to what I and @verlakasalt are operating with, your ‘redGain’ is nearly half of the values we are using. That’s similar to my old setup where I replaced the IR-blockfilter as well. However, as mentioned earlier, I think the color science gets screwed by this.

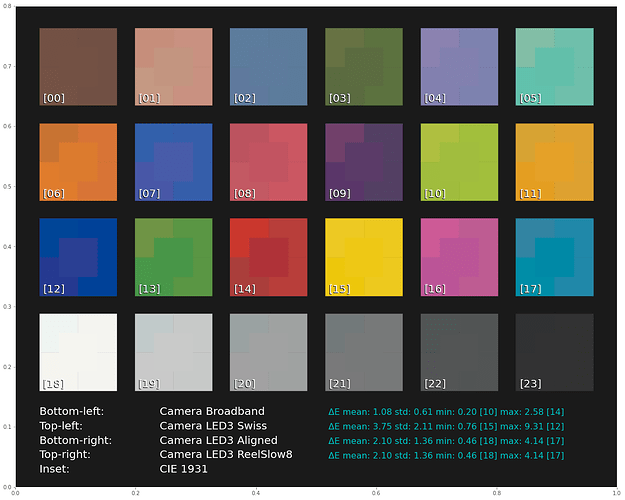

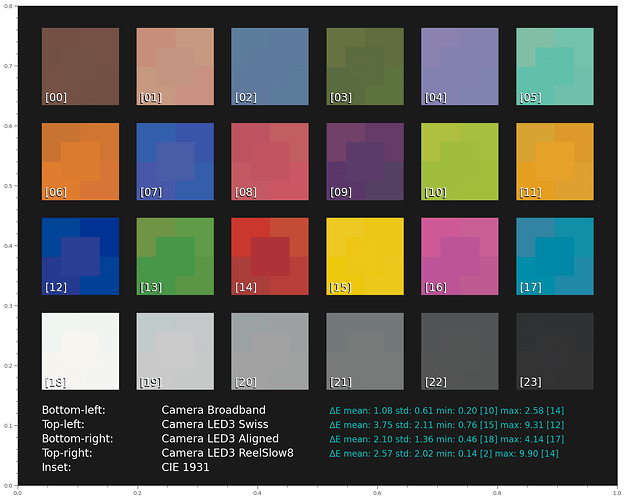

In fact, I think at one point in time it is worthwhile to analyze this in detail, especially if we want to optimize for the case of severly under-exposed or badly faded film stock. But: that is a lot of work. One of the first things to check would be how good the performance of the combined light source plus modified IR-blockfilter would perform with respect to mapping the raw RGB values of the sensor into the XYZ-perceptual space describing human vision. The original IR-blockfilter performs here quite good. That is, it is recreating the human eyes sensitivity curves rather well. So color metamerism of our camera is quite similar to the metamerism we experience. This might not be the case with the changed IR-blockfilter.

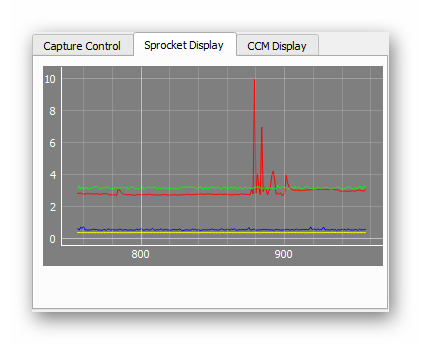

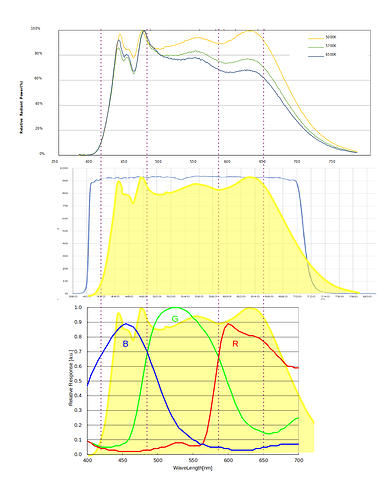

The light source is also playing some role here. A whitelight LED with a high CRI is probably close enough to the spectrum assumed during camera calibration (D65 if I correctly remember), and such a light source samples the spectrum of the film dyes broad enough that everything should work out well. Not so sure about this if we switch to sampling the spectra only at the three rather discrete and narrowbanded positions in an illumination created by separate red, green and blue LEDs. I used such an setup at the time I was still using v1 or v2 RP sensors, and at that time it was not possible to capture raw data. So I at the moment, I have nothing to experiment upon. And rebuilding my RGB-LED setup (and/or replacement of the IR-blockfilter) would require quite some effort. Too much work for me at the moment - I am currently looking into ways to detect tiny movements which are happening during capture. There exists a perfect solution by @npiegdon, I even have the hardware for building one, but I want to look whether there are alternative approaches.

Reflecting again upon the noise stripes - I think they are caused by too high gains in the red and blue color channels. They are present in all color channels, even the green one, under certain circumstances, they are also noticable in normal imagery taken by the HQ sensor, they are content related - it seems to me that we are “seeing” here the working of an internal noise optimization in the HQ sensors imaging chip, the IMX477. In my approach, namely degraining as much as possible in order to recovering image information which is normally covered by the excessive film grain of S8-material, even retiming the 18/24 fps footage of S8 to 30 fps: the noise stripes are gone. Barely, but sufficiently gone. They are no issue at all within the context of the film footage I am processing.

In the context of keeping as much as possible the film grain visible, one could try to optimize the light source in order to lower the necessary red gain, maybe by using a lower temperature light source (more Tungsten like) - this should be handled well with the scientific tuning file. Or resort to capture multiple exposures (either with different exposure settings for highlights and shadows (@dgalland’s way) or multiple exposures with the same exposure speed (@npiegdon’s way) and combine them appropriately. Or optimize the exposure times/light sources to take full advantage of the HQ sensor’s dynamic range (@PM490’s way). For very heavily faded material, the idea of developing a suitable color science is certainly out of the question anyway.

Yep, that could be one way to “improve” things. Fiddling around with the black level. In essence, these would put the noise back into the dark. However, difficult to do directly, for example in DaVinci.

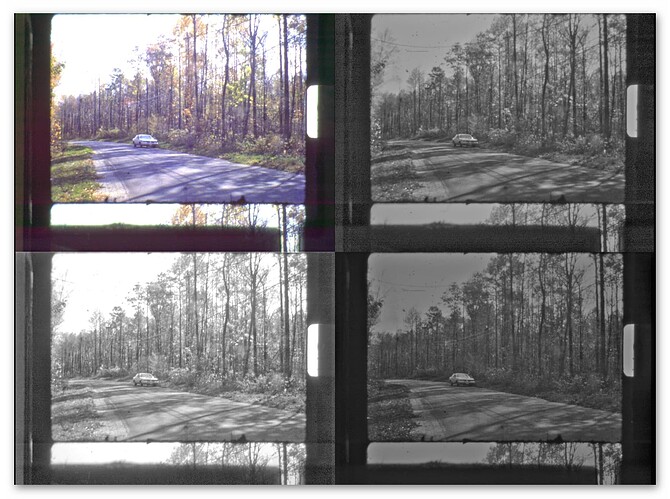

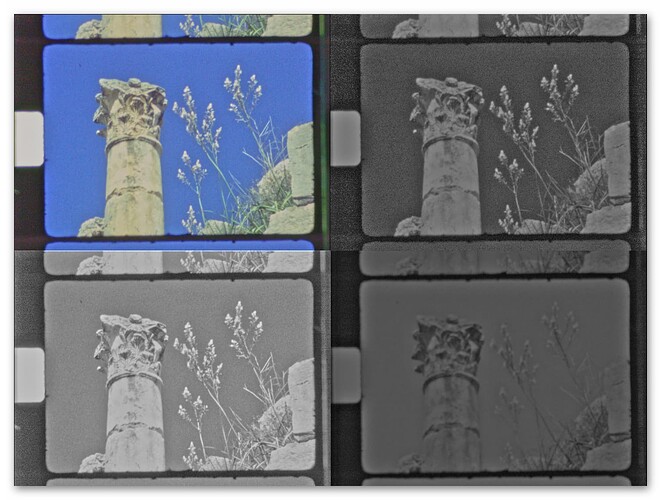

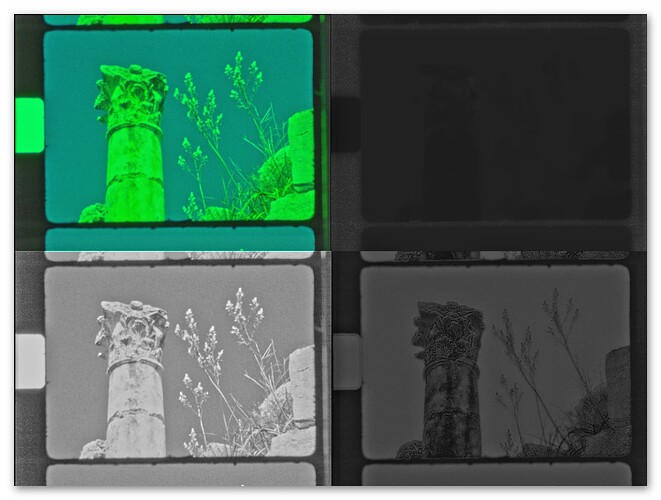

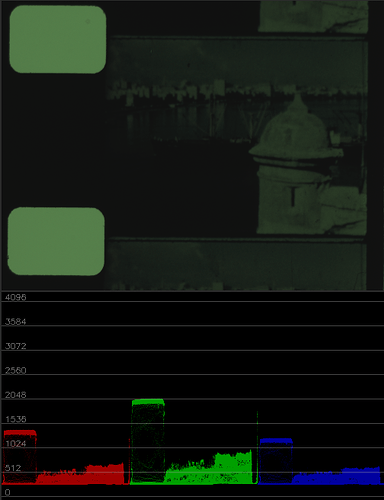

Update: I had a look at a few .dngs posted here. Comparing @verlakasalt’s “cob led”

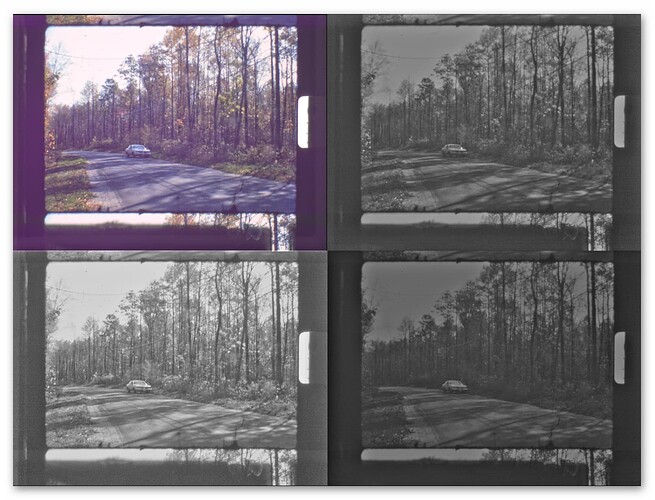

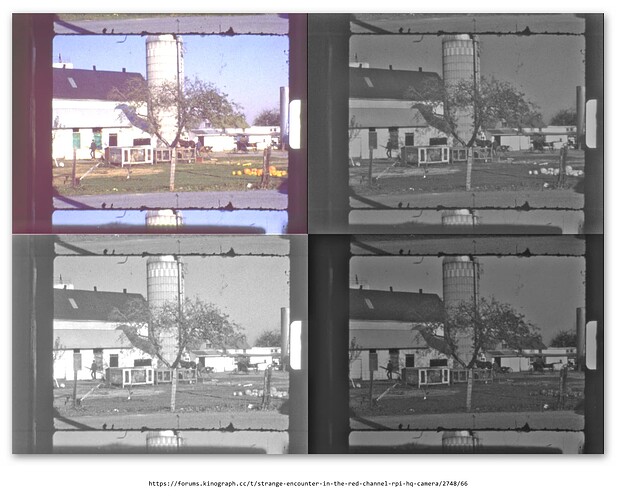

with his “lepro led” (top left: RGB image, top right: red channel, bot left: green channel, bot right: blue channel):

one can notice that the later shows less of the noise stripes. Now, for both images the quoted exposure time is the same, namely 1/603. But the whitebalance coefficients are different:

cob led - 0.3203177552 1 0.6680472977

lepro led - 0.4945598417 1 0.3575387036

which translates into the following color gains at the time of capture (red/green/blue gains):

cob led - 3.12 1.0 1.5

lepro led - 2.02 1.0 2.7

So the red color gain of the lepro LED is less than the one used in the cop LED, and it seems that the noise stripes are less pronounced. This is a tiny hint that an appropriate light source with sufficient power in the red part of the spectrum could help in obtaining imagery with less noise stripes. Of course, one would really need to look into this in a more defined way to arrive at a real solution. Now the question is: where do I get 10x10 mm LEDs with a good Tungsten spectrum approximation?