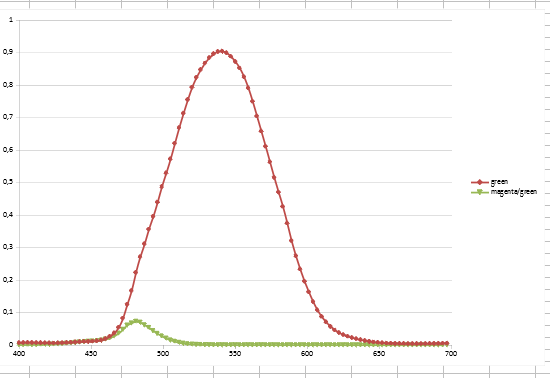

@johnarthurkelly: well, that was long ago (2018) and did not result in anything usable. What I did was basically the following: I searched the internet for characteristic curves of movie stock and the camera I was using at this point in time (a Raspberry Pi v1 camera). I then digitized these curves from 400 nm to 700 nm and combined them. This allowed me for a specific LED to calculate the response as well as the cross-talk to other channels. Here’s an example:

The red graph is the expected main response, the green line is a slight cross-talk. The idea was to maximize the difference in the color channels to capture most of the colors. However, the set of LEDs that I ended up with did not perform well. Specifically, as far as I can remember, the following issues arouse:

- The LEDs choosen had quite different efficiences. I had only access to relative intensity graphs, and there was no easy way for me to correct that.

- The Red LED turned out to be too much on the IR-edge, actually enhancing some fixed dark red pattern which might have been caused by the uneven coverage of the daylight filter of the Super-8 camera. This was only visible in a test movie I bought via eBay and in my scans of that movie, not during normal projection of that movie. However, it prompted me to abandon that approach. I do not have a good example available any longer, but here

you can notice a dark read glow in the lower right corner of the frame, in the shadows. It is a pattern fixed to the film frame and has a form similar to a too small filter. - I discovered that the cross-talk of the Raspberry Pi camera chip v1, if combined with my Schneider 50mm lens is much more noticable than the cross-talk I was trying to minimize with my transfer calculations anyway…

So in the end, I simply bought a bunch of LEDs and swapped them around until I got something which worked… ![]()

Ok. Now for the camera/lens setup. Initially, I started with a Raspberry Pi v1 camera. I was aware that there is a slight color shift introduced by the mismatch of the microlens array of the sensor and the lens I was going to use: a Schneider Componon-S 50 mm.

But I developed a technique to compensate that, basically taking several exposures, calculating an HDR image from that and then compensating in HDR-space. However, that was a slow process.

When the v2 version of the Raspberry Pi camera came out, I immediately tried to switch to that camera - only to find out that the mismatch between microlens array and a long focal length lens (compared to the standard lenses these cheap sensor are designed for) is even worse. However, at that point in time the Raspberry camera software introduced the possibility of a lens shading table. I implemented that in my software, and I got resonable results with the v1 camera sensor, using a modified picamera library. It was much faster than my HDR-space based compensation.

With the newer v2 camera sensor however, the results kept being disappointing. I could compensate the color shifts, but the cross-talk resulted in a noticable loss of saturation towards the edges of the image frame. So I reverted to the v1 sensor chip.

I finally abandoned the Raspberry Pi setup alltogether because I discovered that certain colors were not really reproduced good enough - especially brown tones still showed a tendency to be saturated in the center of the frame, but desaturated on the edges of the frame.

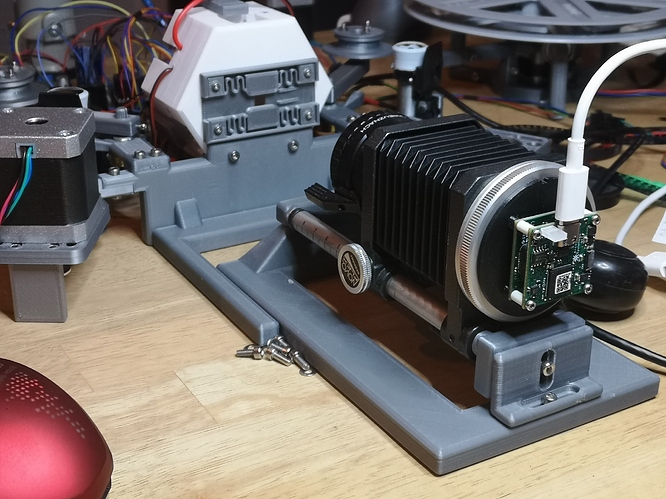

My current setup consists now of a See3CAM_CU135 camera, mounted onto a Novoflex bellows with a custom 3D printed attachment. On the other side, the Schneider lens is mounted, fixed at an f-stop of 5.6. Supposably, this is the f-stop with the sharpest image. This setup is currently on a freely movable platform which is moved as a single unit to get the frame into sharp focus. There is certainly room for improvement here.

Here’s an image for illustration

In the background, you can see the film gate attached to an integrating sphere, which I described in detail above. A note about the See3CAM_CU135 camera: if you consider this camera, it is best to use a Linux-based host computer and v4l2. At least I was not able to interface the camera well enough with Win7 or Win10 systems to use it. Example captures can be seen in my post found in this thread: HDR / Exposure Bracketing

And finally: thanks @johnarthurkelly for the link to the paper. Indeed interesting!