Recently, the Raspberry Pi foundation released an offical alpha-release of a new python library, “picamera2” (alpha = things might still change). There are quite a few film scanner approaches using the HQ camera of the foundation in combination with the old “picamera” library. This old library was based on what was available at that time, namely the propriatary Broadcom-stack. This library hasn’t seen any update since about two years.

The new “picamera2”-library is using the new open-source “libcamera”-stack instead of the Broadcom-stack. It is also an offical library supported by the Raspberry foundation. I will report in the following a few insights for people considering to upgrade their software to the new library.

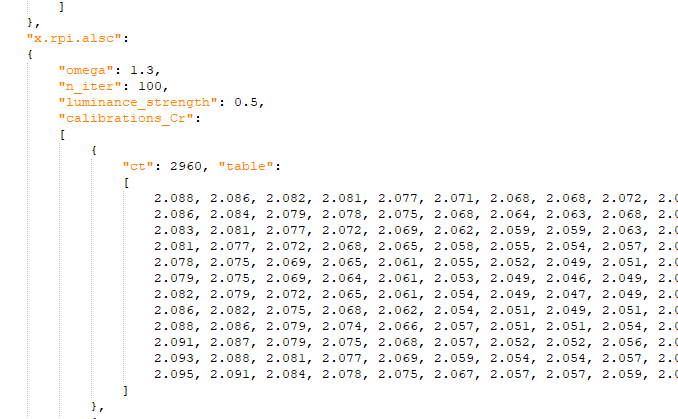

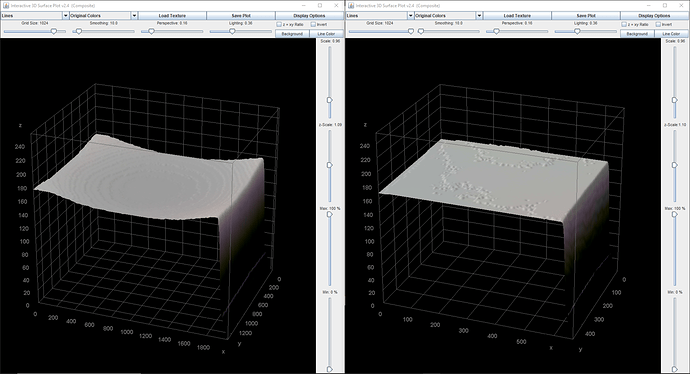

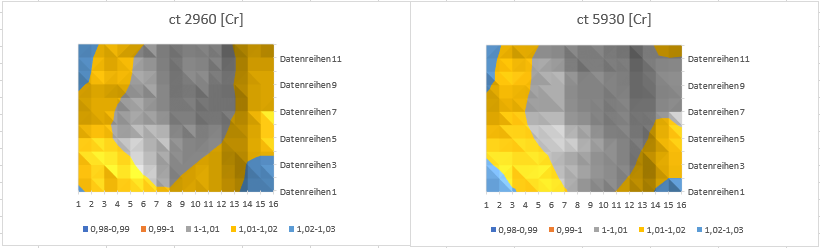

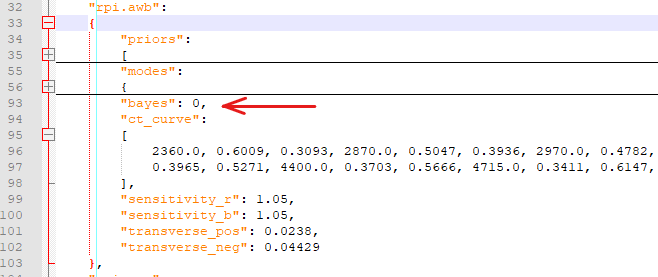

As the “picamera2”-library is based on the “libcamera”-approach, there now exists a tuning file for several sensors, including the v1/v2 and HQ camera of the Raspberry foundation. In principle, this tuning file allows for fine-tuning of the camera characteristics for the task at hand. Because “libcamera” handles things quite differently from the old “Broadcom”-stack, the python interface is also based on a totally different philosophy.

Accessing the camera with the new software is simple, as a lot of functionality is taken care of behind the scenes. Here’s an example taken directly from the “example”-folder of the new libraries github:

#!/usr/bin/python3

import time

from picamera2.encoders import JpegEncoder

from picamera2 import Picamera2

picam2 = Picamera2()

video_config = picam2.video_configuration(main={"size": (1920, 1080)})

picam2.configure(video_config)

picam2.start_preview()

encoder = JpegEncoder(q=70)

picam2.start_recording(encoder, 'test.mjpeg')

time.sleep(10)

picam2.stop_recording()

After the necessary imports, the camera is created and initialzed. Once that is done, a preview is started (which in this case also silently creates a loop capturing frames from the camera). After this, an encoder (in this case a jpeg-encoder set to a quality level of 70) is created and appended to the the image path (picam2.start_recording(encoder, 'test.mjpeg')). The camera is left running and encoding to the file ‘test.mjpeg’ in the background for 10 seconds, after which the recording is stopped.

For a film scanning application, one probably wants to work closer to the hardware. Central to libcamera’s approach are requests. Once the camera has been started, you can get the current image of the camera by something like:

request = picam2.capture_request()

array = request.make_array('main')

metadata = request.get_metadata()

request.release()

From aquiring the request until you release it, the data belongs to you. You have to hand it back by request.release() to make it available again for libcamera.

Besides the image data (which you can get as numpy-array (request.make_array('main')) as well as a few other formats) every request has also a lot of metadata related to the current image. This metadata is bascially a dictonary which is retrieved by the request.get_metadata()-line. Libcamera operates with three different camera streams, one of them is ‘main’ (which was used in the above example). These streams feature several different output formats. One called ‘lores’ operates only in YUV420, another one called ‘raw’ delivers just that: the raw sensor data.

Some (but not all) of the data returned in the metadata dictionary can not only be read, but also set. For example, the property AnalogueGain is available in the metadata - this is usually the gain the AGC has selected for you. It can also be set to a desired value by using the command:

picam2.set_controls({'AnalogueGain':1.0})

One notable example of a property which can not be set is the digital gain. More about that later.

Some properties of the camera can also not be set in the way described above, mainly because they are related to requiring a reconfiguration of the sensor. One example is switching on the vertical image flip, which can only be done in the inital configuration step, like so:

video_config["transform"] = libcamera.Transform(hflip=0, vflip=1)

picam2.configure(video_config)

Another important deviation from the old picamera-lib is the handling of digital and analog gain. In the new picamera2-lib, there is actually no way to set digital gain. As the maintainer of the library explained to me, a requested exposure time is realized internally by choosing an exposure time close to the value requested and an appropriate multiplicator to give the user an approximation of the exposure time requested.

An exposure time of, say, 2456 is not realizable with the HQ sensor hardware. The closest exposure time available (due to hardware constraints) is 2400. So the requested exposure would be realized in libcamera/picamera2 by selecting: (digital gain = 1.0233) * (exposure time = 2400) = (approx. exposure time = 2456).

This behaviour spoils a little bit serious HDR-work, as the data from the sensor is only an approximation of what you actually requested. And there exist differences between frames taken for example with exposure time = 2400/ digital gain=2 (a combination the AGC will give you occasionaly when requesting exposure time = 4800) and exposure time = 4800/ digital gain = 1.0 (which is the actual desired setting).

One approach to circumvent this is to choose exposure times which are realizable hardware-wise and request this exposure time repeatably (thanks go to the maintainer of the new picamera2-lib for this suggestion), until the digital gain has settled to 1.0. For example, the sequence 2400, 4800, 9600, etc. should give you in the end a digital gain = 1.0. And usually, it takes between 2 and 5 frames to obtain the desired exposure value.

By the way, the old picamera-lib had a similar issue. With the old lib it took sometimes several frames until the exposure time settled to the requested value.

I am currently experimenting with a few approaches to circumvent this challenge. Based on a suggestion from the maintainer of the library, namely to request a single exposure time until it is delivered, before switching to the next one gets me a new exposure value every 5 frames on average.

With this approach, my current scan speed is about 3 secs per movie frame (2016x1502 px). This includes taking five different exposures and a waiting period of 0.5 sec to settle out mechanical vibrations of the scanner. Not too bad.

As the maintainer of the library indicated, some work might be done to improve the performance of the library with respect to sudden (and large) changes of exposure values - however, as this might require to change things in the library picamera2 is based upon(libcamera), it might take some time.

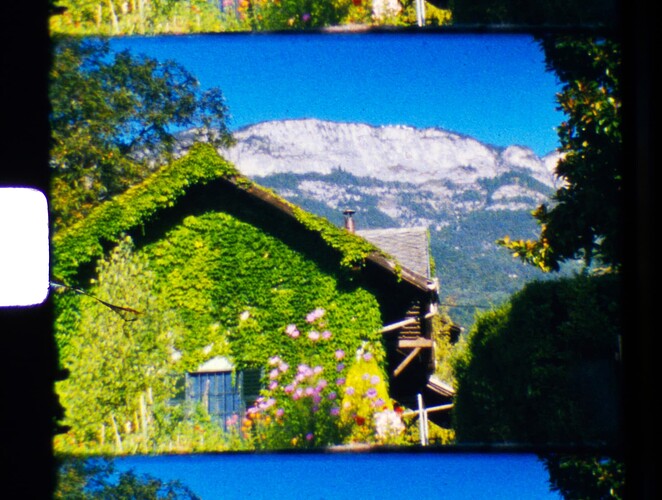

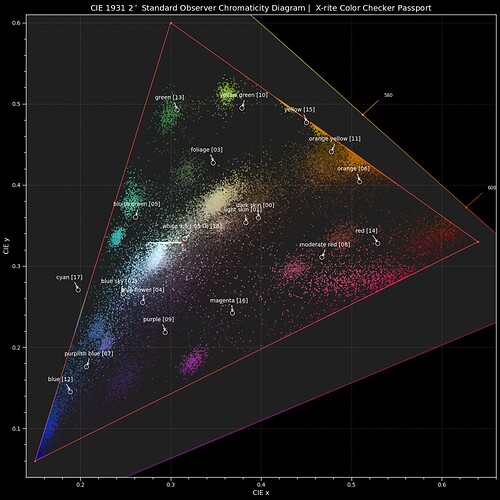

Finally, here’s a comparision of the color differences with the old and new library. The old picamera-lib/Broadcom-stack delivered for example the following frame:

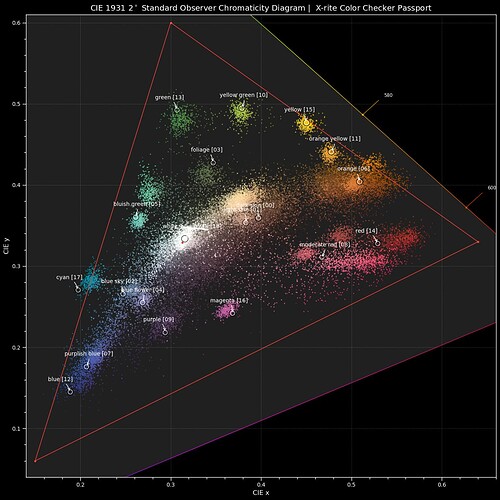

The new picamera2-lib/libcamera-stack gets the following result from the same frame:

This capture is from Kodachrome film stock. Here’s another capture from Agfa Moviechrome material with the old picamera/Broadcom-stack combination

and this is the result of the same frame, captured with the new picamera2/libcamera-stack:

Two things are immediately noticable: the new picamera2 delivers images which have a better color definition. The yellow range of colors is actually more prominent, which should help the rendering of skin tones. Overall, the new picamera2-lib/libcamera-stack features a stronger saturation, which creates more intense colors. Also, it seems that the image definition in the shadows is improved.

One last note: JPEG/MJPEG encoding is currently done in software, with the simplejpeg-library. So the quality settings of the old library, which used hardware encoding, are not directly transferable. Also, a higher system load is experienced, especially if using the available multiple thread encoder