@Roland: Well, we all have different opinions, emphases and experiences. I worked with Super 8 in the 70’s and 80’s of the last century and high quality cameras and projection equipment were used then - admittedly not by everyone.

Now to the issue of scanning. As certain types of film stock are fading away over time, one of my goals is to preserve what’s left - 100%, today. Ideally, such an archival scan should be able to digitize the content of the film in question in full glory. That is not possible directly, with current hardware. As most camera sensors max out at about 14 bit/color channel, you will need to work with multiple exposures - or at the very least with precisely chosen exposure settings, metered to every frame individually, while utlizing the full dynamic range of a 14 bit camera. Anything less will leave parts of frames either blown out or pitch-black, with the image information in these frames missing.Or, quantization effects become noticeable (see below).

Any of the more elaborate approaches mentioned above (plus a few more) is able to deliver an archival scan. That is, the available information of the scan is sufficient to reconstruct the full HDR data contained in the movie frame (there are additional other conditions on such a setup like scan resolution and so on that I won’t discuss here).

In any case, the ultimate goal is to get a true HDR (unlimited dynamic range) for every movie frame.

For me, this is important: that my archival scan is future-proof. I do not plan to rescan a film sometime in the future. It might already have faded away.

Note that the archival scan is not intended to be used for presentation. This is the purpose of a secondary output, let’s call this the presentation scan. The presentation scan might have colors corrected, unsteady camera motion compensated, film grain added or removed, and so on.

From a technical point of view, the presentational scan needs to adapt to the limits of current display technology, transmission and distribution channels. Todays standard are 8-bit/color channel displays, being slowly replaced by displays and other technology approaching the contrast ratios you need for true HDR. But we are not there yet.

So generally, one of todays goals is to map the archival HDR (or a pre-representation of that) to the limited dynamic range (usually 8-10 bit) available in todays transmission formats and display hardware. This goal is known as the magical area of “tone-mapping” within the HDR context. There has been a lot of efforts spend in developing approriate algorithms for that task (i.e, adapting true HDR to be displayed on limited contrast displays) and I guess I studied ( = read the original papers) and tested most of these algorithms.

With respect to non-manual operation, the Mertens algorithm outperforms every other algorithm known to me.This can be directly traced back to the structure of this algorithm, which mimics processes similar to those of the human visual system (I worked over 10 years in researching neural algorithms of the human visual system). However, my favorite point is again: exposure fusion is fully automatic, no manual tuning required.

Of course, once the technological threshold of full HDR display and transmission has passed, tone-mapping won’t no longer be necessary. As from the archival scan described above (a stack of multiple exposures for each frame) actually a true HDR can be computed, this process is as future-proof as it can be.

In closing, thanks for your nice description of manually tuning the original scan towards the automatic exposure fusion result. I do have a program which does exactly this (it matches a source histogram to a reference histogram) automatically:

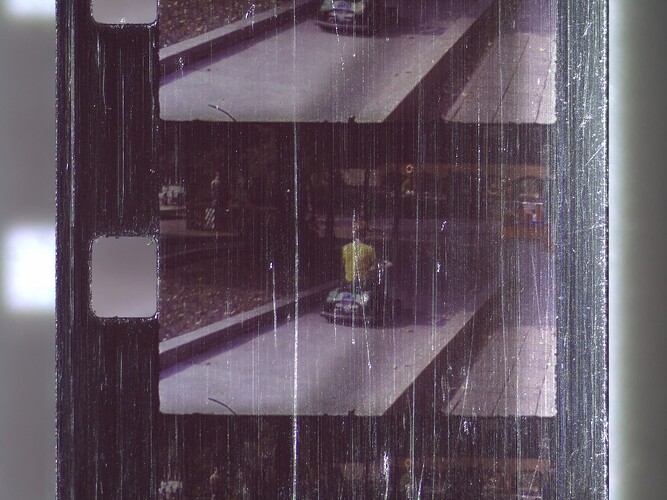

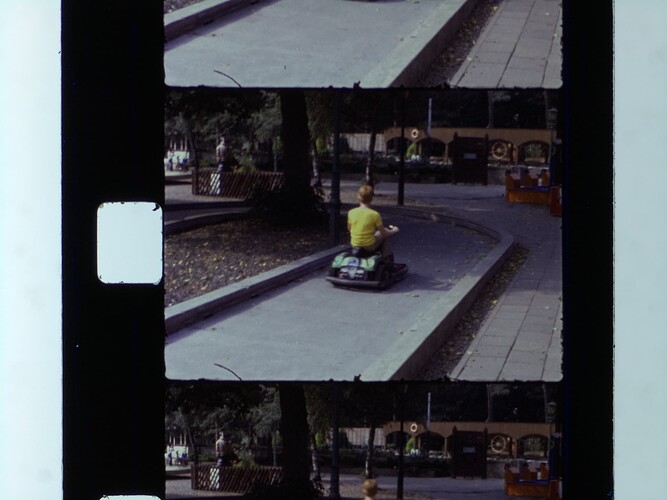

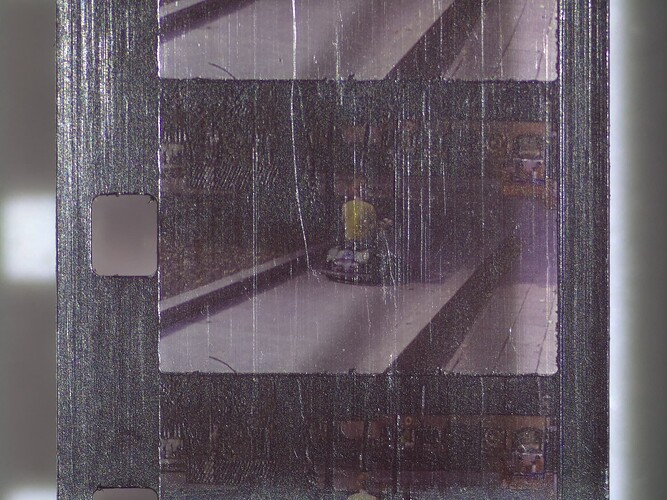

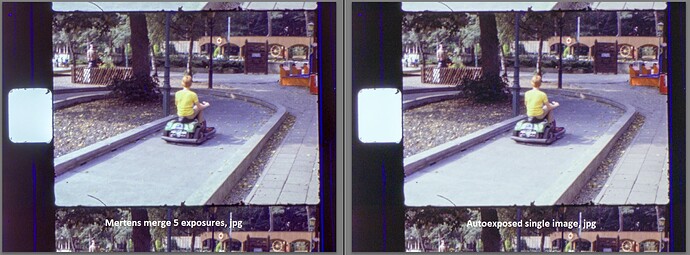

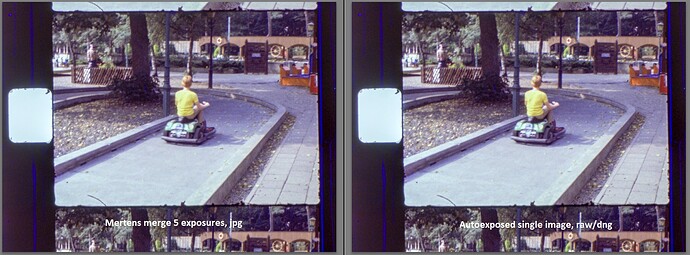

The single exposure scan is now on the left, the exposure fusion reference is on the right. While the intensities of both images are now aligned, you may notice quantization errors in the left image, most notably on the jacket of the man speaking to the guide. Also, the texture of the sand in the shadow is barely visible in the single scan result. Given, the quality on the left can easliy be achieved with real-time scanning (i.e. 3 min for a 15 m roll), but for the quality on the right you need to throw in more machine time (a little less than 3 hours on my system). I am choosing the right side, mainly because a single scan per frame is not future-proof and the results are visually more pleasing without manual interaction.

![]() . I’m also planning on using a fixed shutter speed and (analog)gain for capturing, so the brightness of all images in sequence is the same. Also I would like to get as close to raw as possible (or even use raw/dng for capturing), and do any after editing needed in Davinci Resolve.

. I’m also planning on using a fixed shutter speed and (analog)gain for capturing, so the brightness of all images in sequence is the same. Also I would like to get as close to raw as possible (or even use raw/dng for capturing), and do any after editing needed in Davinci Resolve.