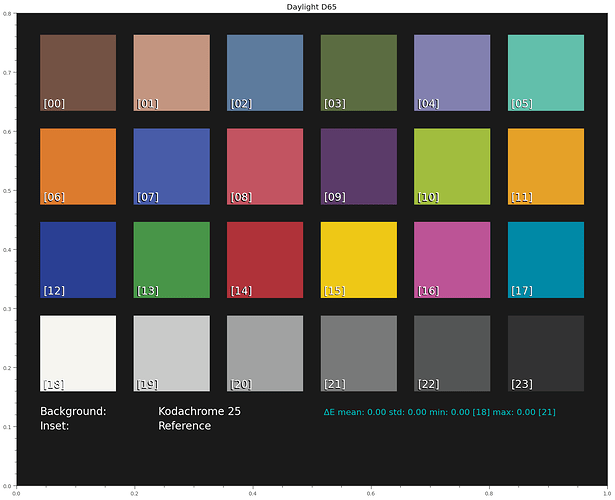

I want to report on some investigations about Kodachrome 25 film stock. My primary intention is to create a virtual color checker “made” out of Kodachrome film stock.

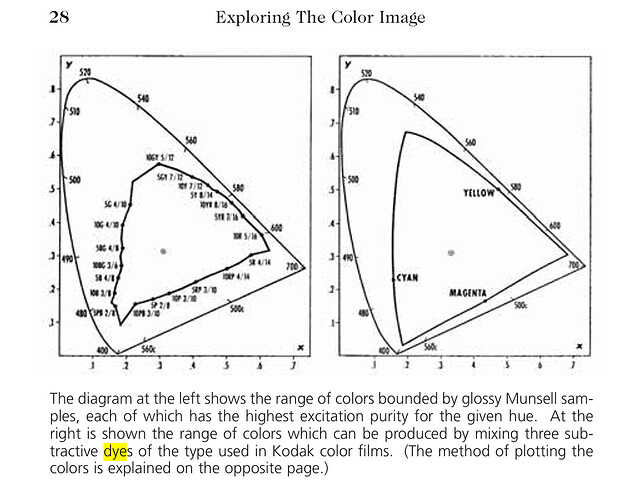

While doing this research, I stumbled over an internet page, “How Good Was Kodachrome?”, which is in part an interesting read. For starters, the page discusses the issue whether maximal color separation is a valid goal, or whether one should rather opt for overlapping filter channels (I support that later view).

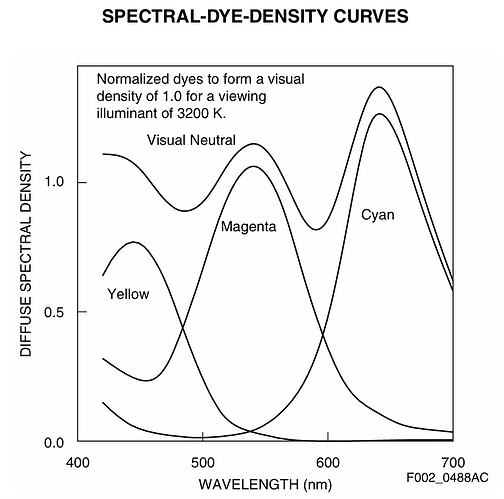

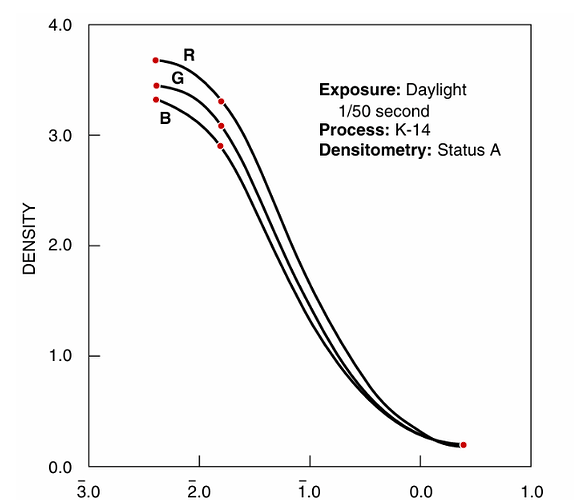

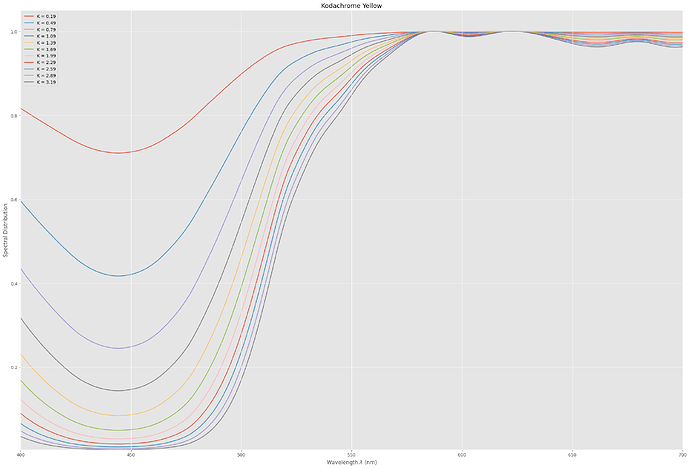

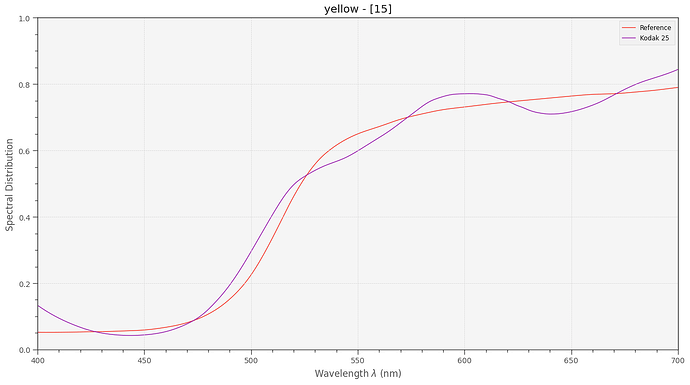

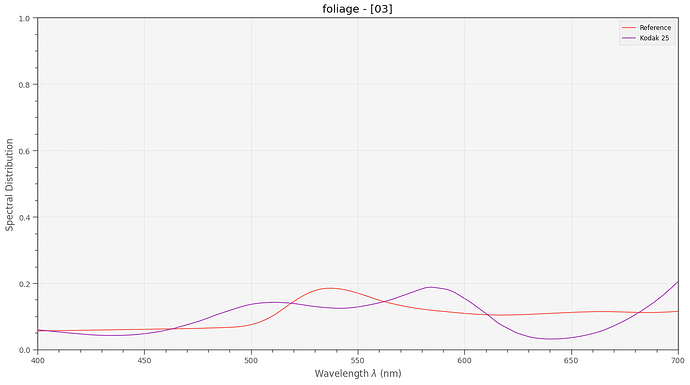

Anyway - I did the following experiment. From the Kodachrome 25 data sheet I took the spectral density curves of the yellow, magenta and cyan dye layers,

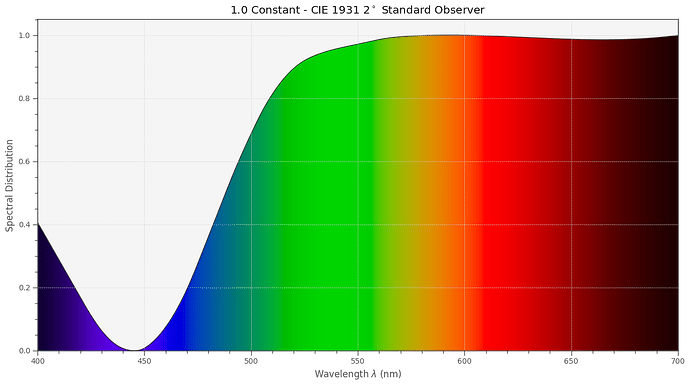

In order to arrive at a filter characteristc for each of these dye layers, I basically calculated 1.0 - d(lambda) (see below for an actual code segment). So the filter action of the yellow dye should yield a filter spectrum like this:

with equivalent handling of the magenta and cyan layers. In

Python/colour science code, this is handled by the following code segment

MagentaAbsorption = one - KodachromeM*scaleM

CyanAbsorption = one - KodachromeC*scaleC

YellowAbsorption = one - KodachromeY*scaleY

totalAbsorption = MagentaAbsorption*CyanAbsorption*YellowAbsorption

Here, KodachromeY is for example the spectrum of the yellow dye, scaleY is the density of the the yellow dye, and totalAbsorption the complete film absorption.

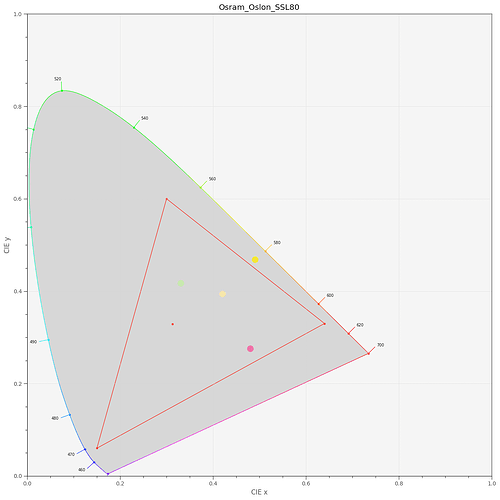

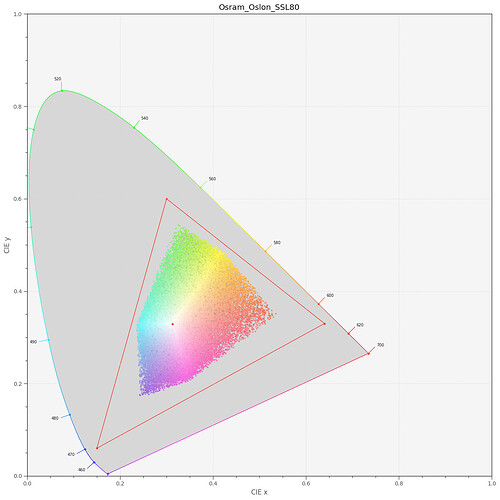

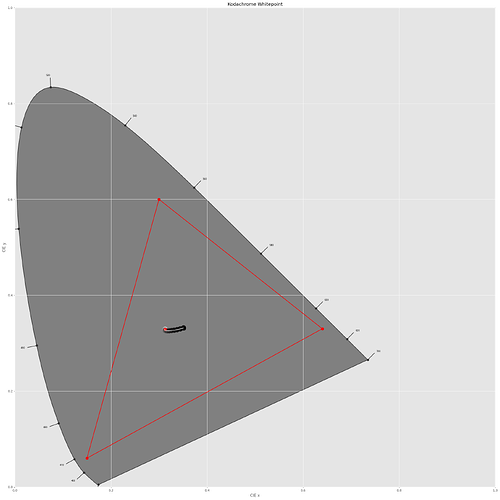

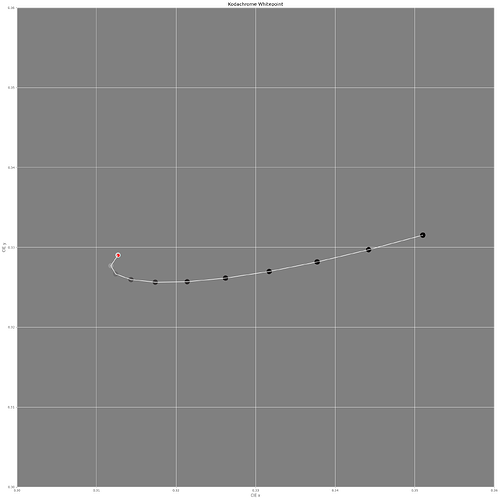

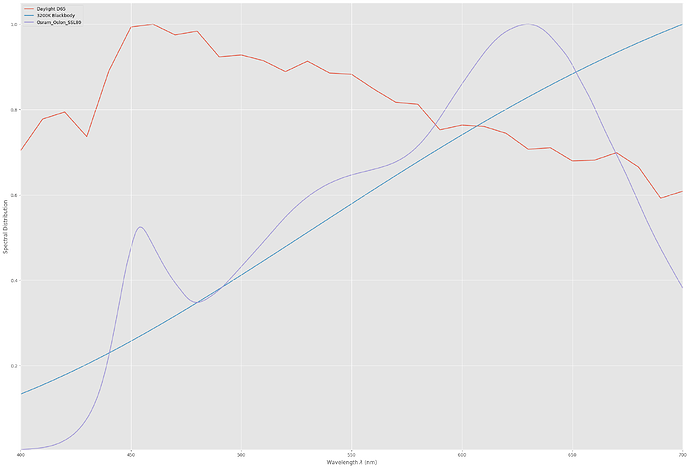

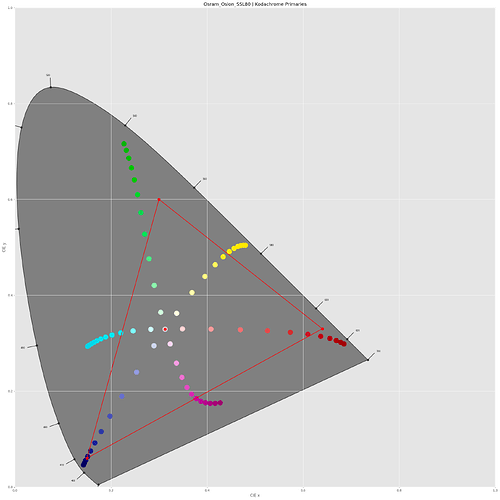

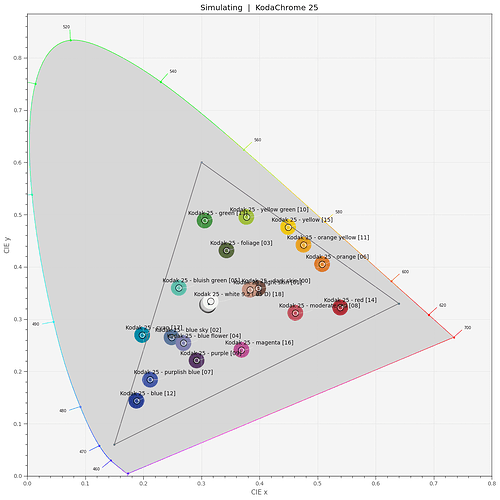

With this in place, I can check where the dye layers end up in a CIE-diagram. For this, I need to select a light source, I opted for my trusted Osram SSL80 whitelight LED:

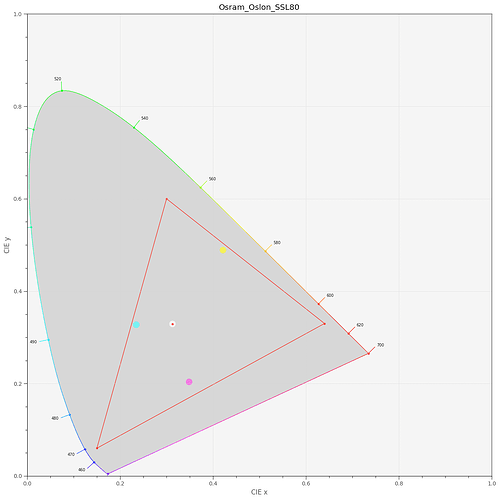

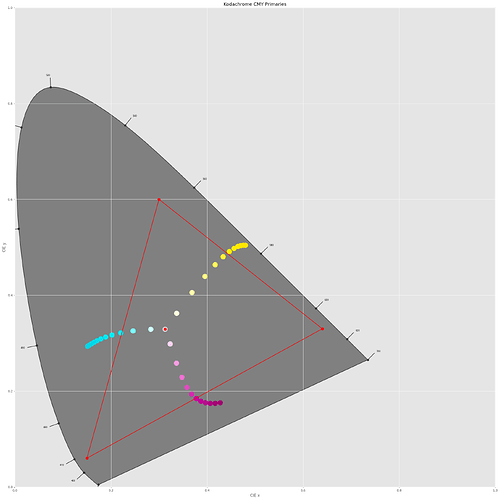

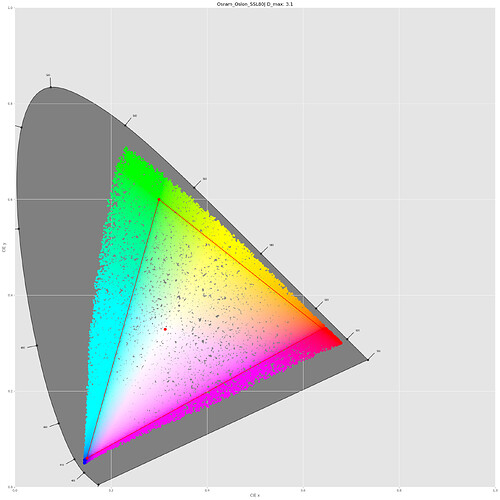

In the above diagram, the sRGB color gamut including its primaries is indicated by the red triangle. The yellow dye (yellow dot) is slightly out of the sRGB gamut, the two others (magenta and greenish dots) are within this color gamut. Of importance is the center dot - this is the position of the whitepoint - clearly, it’s off from the sRGB one (the small red center dot). So I guess I have to throw in a color space adaption transformation (CAT) here. I use the linear Bradford method (which is the same as recommended in the Adobe DNG-spec) and finally arrive at the following diagram:

Well, the whitepoints do match now and all Kodachrome 25 primaries have moved into the sRGB space!

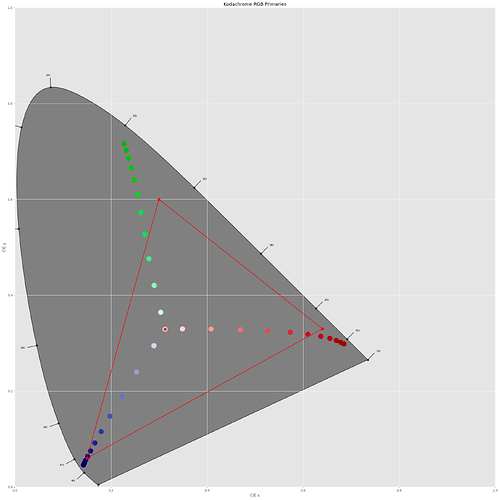

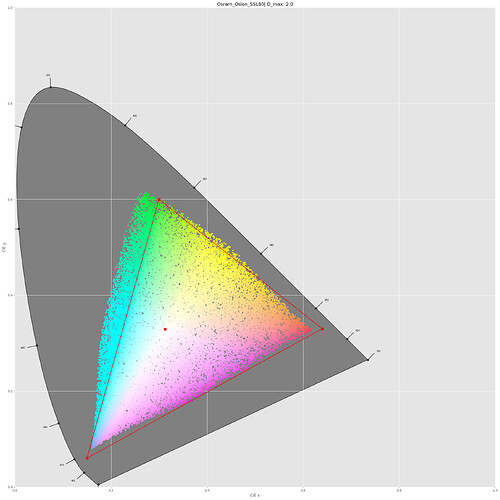

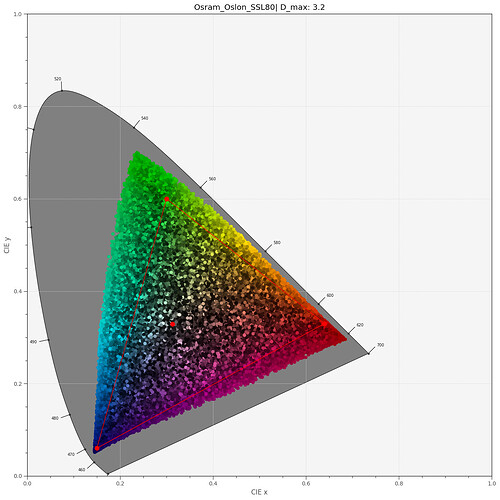

To get an idea of the total color gamut representable by the above dye spectra, I run a simulation of 50000 random combinations of the three dye spectra, basically picking random values for the scaleY, scaleM and scaleC amplitudes, keeping them in the range [0.0:1.0]. This is the result:

So it seems that all colors which are representable by a combination of Kodachrome 25 dye spectra easily fit into the sRGB color gamut. Frankly, I did not expect that.

I do not know whether my approach presented above is valid, but I do not see any obvious mistake. I take a virtual light source, pipe this light spectrum through yellow, magenta and cyan filter layers representing the dye layers and compute the resulting overall spectrum. This spectrum is converted into a XYZ color which in turn is mapped into the xy-diagrams above.

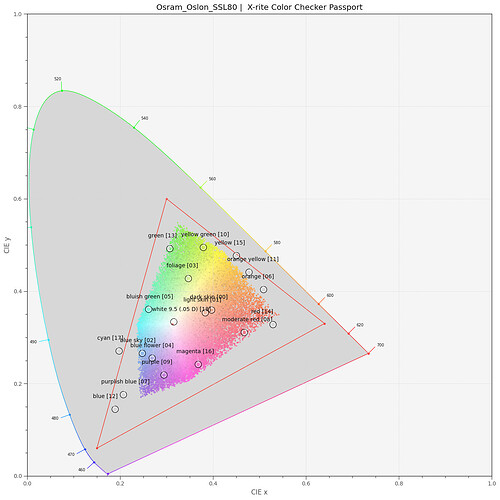

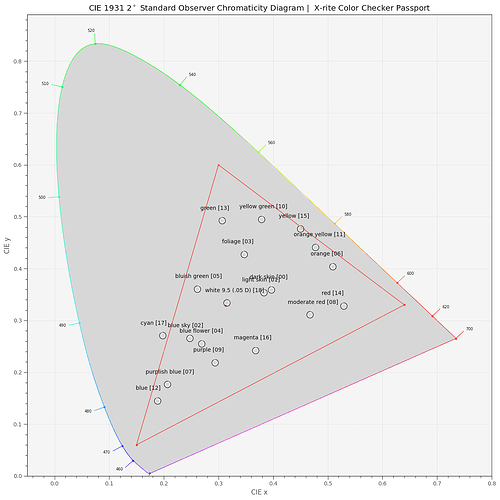

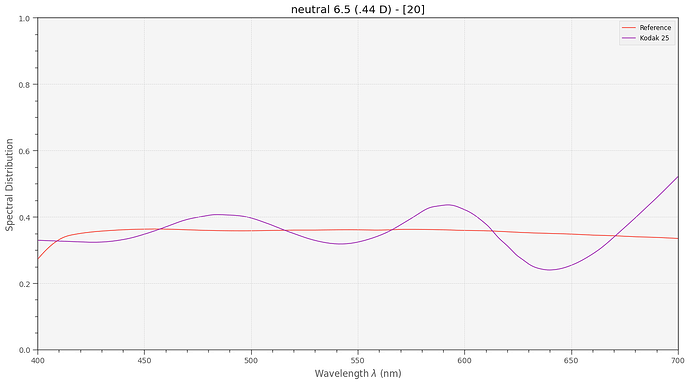

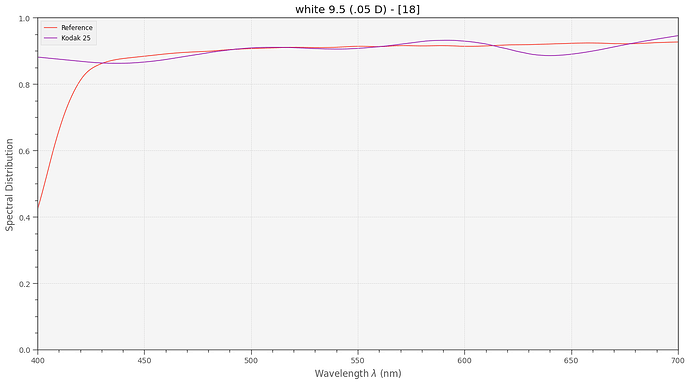

If this results is true, my actual project, namely creating a virtual Kodachrome 25 color checker, is not possible. Overlaying a standard color checker board,

I can count 7 patches of the color checker for which a representation with Kodachrome 25 dyes seems impossible. These are mainly blue/cyan and yellow/orange/red colors. Note that the correlated color temperature (cct) of the SSL80 is

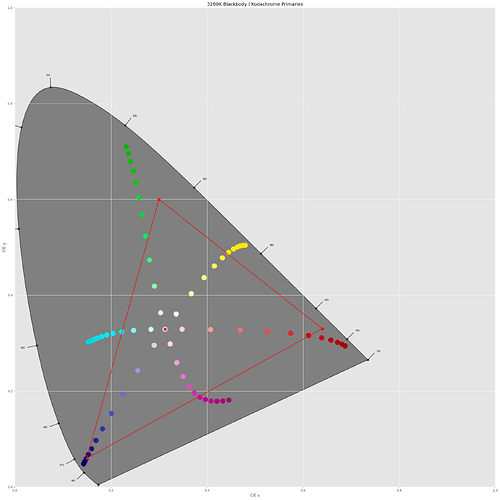

3212 K, which matches closely the illuminant of 3200 K cited in the Kodachrome 25 data sheet. The CRI of my (simulated) SSL80 is computed to be 97.286. A simluation with a real 3200 K blackbody radiator does not change the results.

EDIT: I think I interpreted the original Kodachrome 25 data wrong. First, it is noteworthy that the SDs given are not normalized - overlooked that. Also, I need to take into account that these are densities which can vary more than just in the interval [0:1]. Lastly, the mentioning of a “illuminant of 3200 K” in the data needs further investigation. Normally, SDs are not dependent on the illumination source. Please refer to later posts in this thread for further aspects.