… slowly approaching a “solution” to the issues discussed in this thread - in a way. I want to present in the following a few images and discuss them. I hope my musings might be helpful to others…

Does it matter?

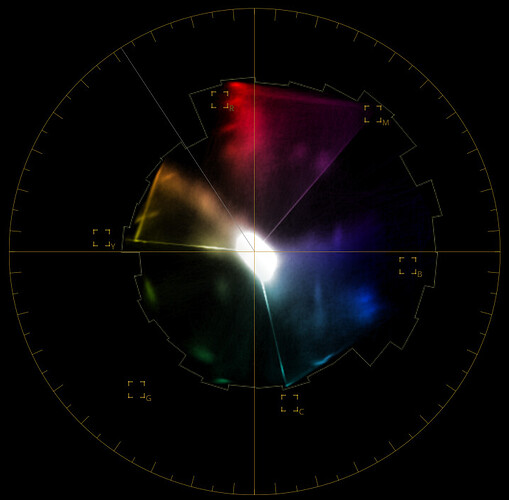

The two things discussed here and elsewhere are out-of-gamut colors and stripes of noise in very dark areas. I think these issues are not important generally. To make my point by an example, have a look at this image:

In the top-left, the raw .dng-file was processed in a standard conforming way into a rec709/sRGB image. It’s a daylight capture of a carefully arranged desktop scene - and in my opinion, it looks just fine. There’s only one obvious burned-out highlight on the beak of the bird but otherwise, the colors of the color checker look just fine (they are, I measured them).

In reality, this image has the same issues we discussed at length - noisy stripes and out-of-gamut colors. They are marked in the three other versions of the image, channel by channel. Everything below a certain threshold (<1/2046) is marked with yellow color, everything close to the maximal intensity (>1-1/2024) is marked with red.

The first thing to notice is that the color checker patch no. 17 (cyan) is below the lower threshold, completely marked yellow. In fact, it has to be, because the color of this patch is outside the rec709/sRBG color gamut. Searching for more yellow stuff, we easily discover in all channels our stripes of noise. Remember, this image was taken in pure daylight - so no issues with any unstable illumination.

Looking at the red areas, we discover that in addition to the beak of the bird, other areas are burned out - different ones in different channels. Again, these areas indicate out-of-gamut colors. But: did you notice any of this when looking at the original picture?

That’s my point in this section: the out-of-gamut colors usually will go unnoticed in most situations, as will the stripes of noise.

Exposure

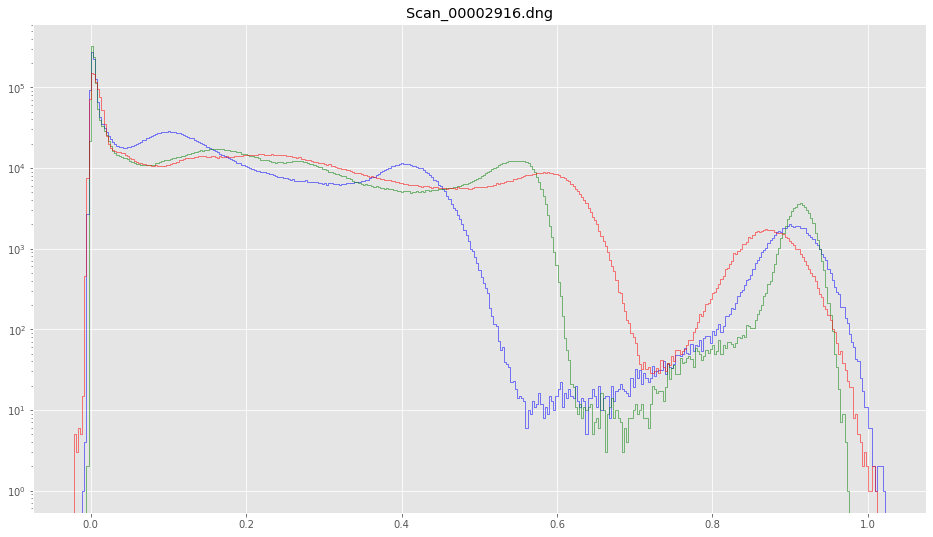

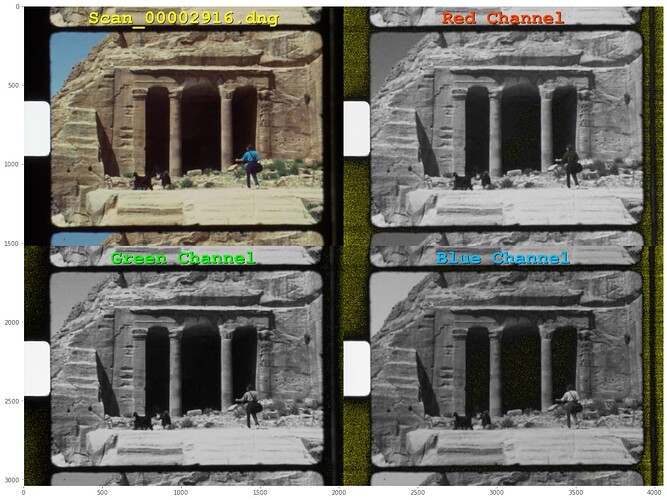

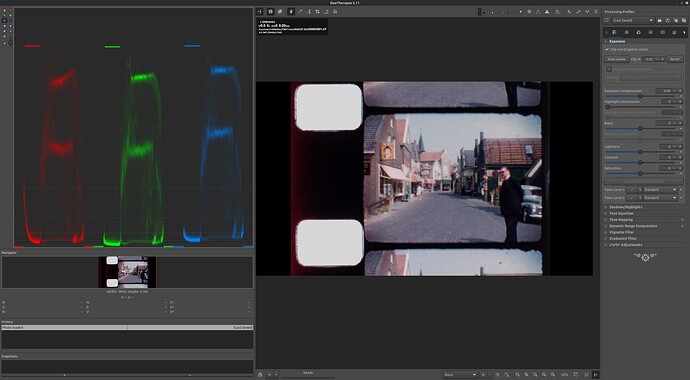

Let’s look at a real film scan, Scan_00002916.dng. The histogram of this scan

shows that there should be no out-of-gamut colors - everything stays within the interval of valid colors, namely

[0.0:1.0]. Looking at the green trace, we see that the pixel values of the real frame are from slightly above 0.0 to approximately 0.6. The values above that correspond actually to the sprocket hole, with the maximum at around 0.95. Ok, and here’s the corresponding image:The stripes of noise are there, but mostly confined to the area outside of the actual frame. This is almost good, save of the fact that we throw away with that exposure setting half of the (linear) dynamic range of our sensor.

I usually use a slightly higher exposure value, one which does not try to digitize the sprocket hole values. Here’s a corresponding histogram of such an exposure:

If you compare this with the previous histogram you will notice that that the pixels of the frame image reach much further towards the limit

1.0 - in our case the maximum in the red channel approaches something close to 0.9. Here’s the corresponding image:The same situation as above, only that the sprocket hole is now marked as overexposed.

This is actually the exposure setting I am working with - mostly. It has a slight probability of causing burned out areas in the actual film frame, namely if again out-of-gamut colors are encountered, but as I mentioned above - no one will notice.

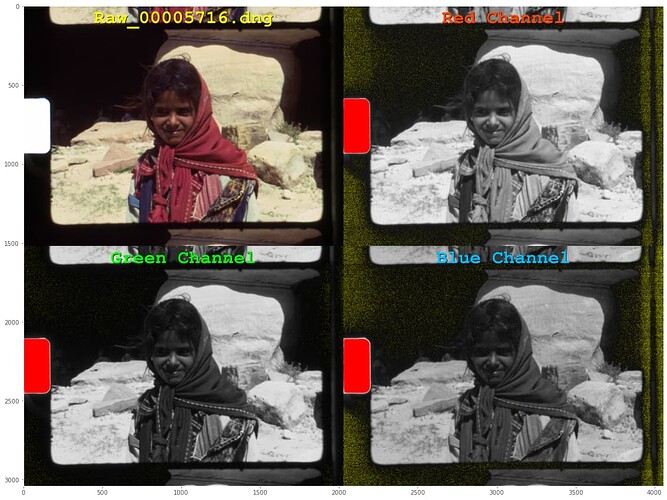

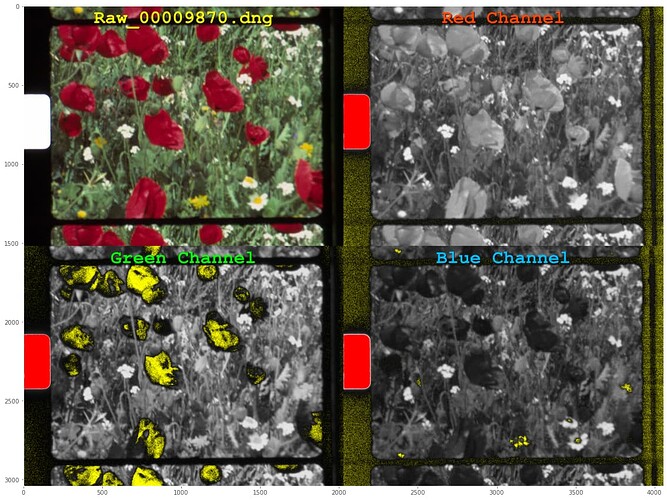

Here’s an example:

The poppy flowers lead to out-of-gamut colors (yellow marked) in the green channel, and we have a few intense yellow daisy colors which are marked again yellow in the blue channel.

Here’s an example where this effect happens in the red channel:

I know that this is happening, but I do not care. The improved dynamic range is for me more important than the slightly reduced fidelity in these areas.

Forum data

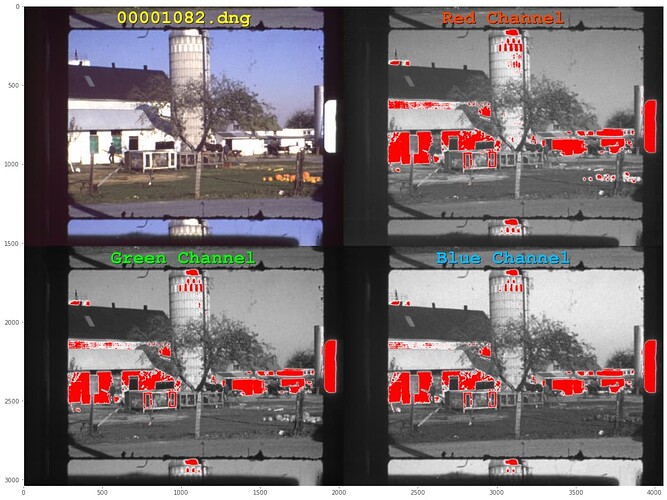

While we’re at that, I thought it would be interesting to have a look at some of the data posted here on the forum. Here’s 00001082.dng (@verlakasalt):

No noise stripes, as the frame border is rather bright, but heavily burned out image areas in the brighter parts of the capture.

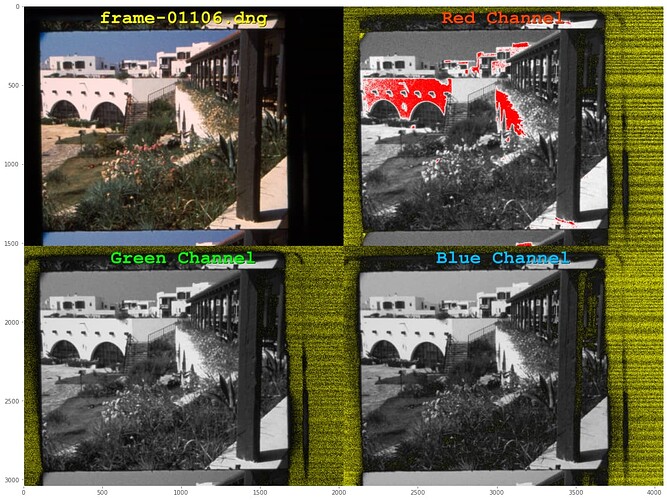

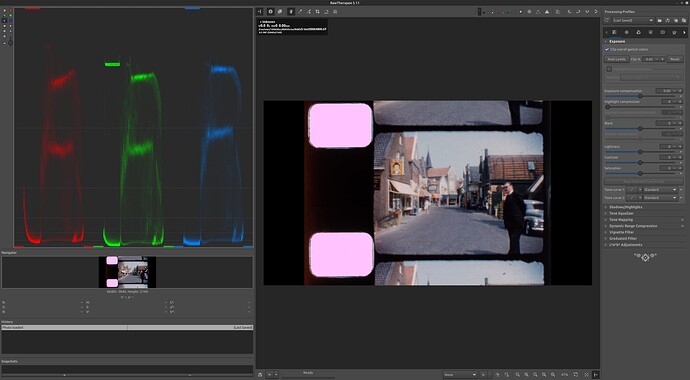

Now, ‘frame-01106.dng’ (@Manuel_Angel):

There’s no sprocket hole, but a few areas of the house wall clip in the image. Noisy stripes are there - but again, both issues are not noticable in the real image top-left.

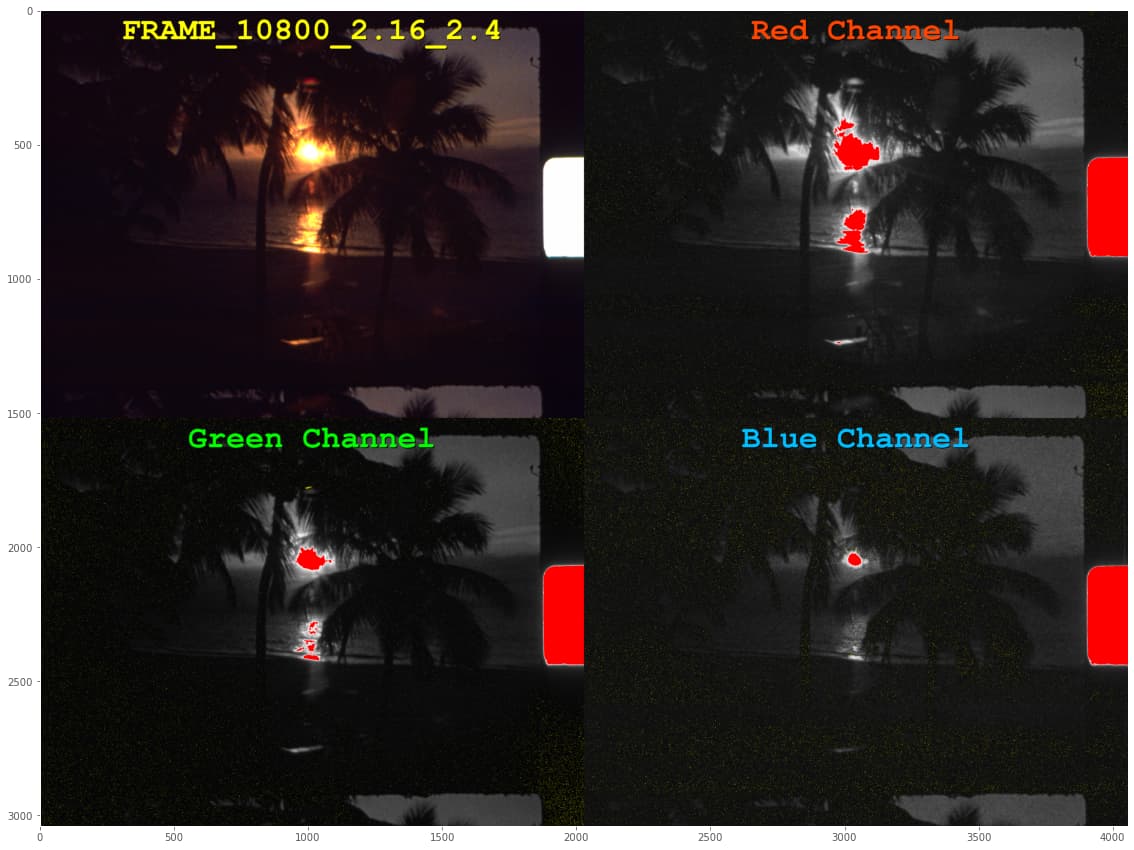

Continueing, let’s look at FRAME_10800_2.16_2.46_R3920.0_G4128.0_B3952.0.dng (@verlakasalt):

This is the type of images which challenges the dynamic depth of our sensor quite a bit. There’s highlight clipping in all color channels, but again, not noticable in the real image. Stripes of noise are also there, but very faint.

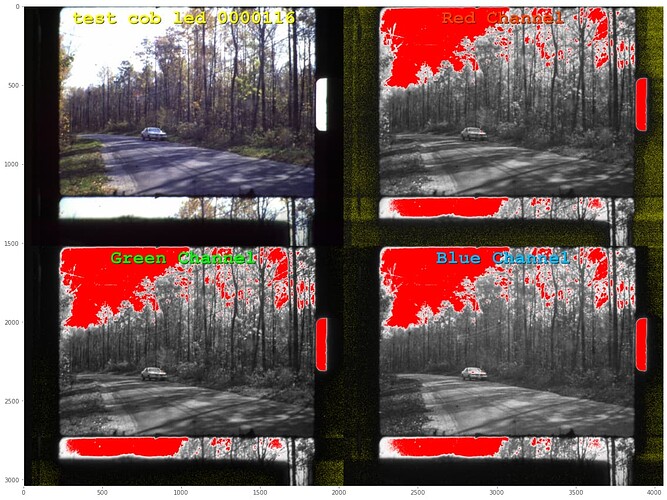

Next, test cob led 00001160.dng (@verlakasalt):

Clearly, an overexposed film scan. Lots of noise, especially in the red channel. The red channel operated on a gain of

3.12 - that’s a more than two times higher gain than the blue channel.

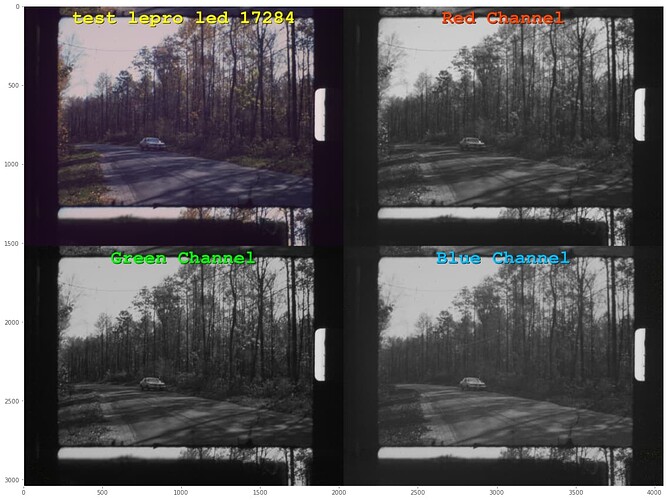

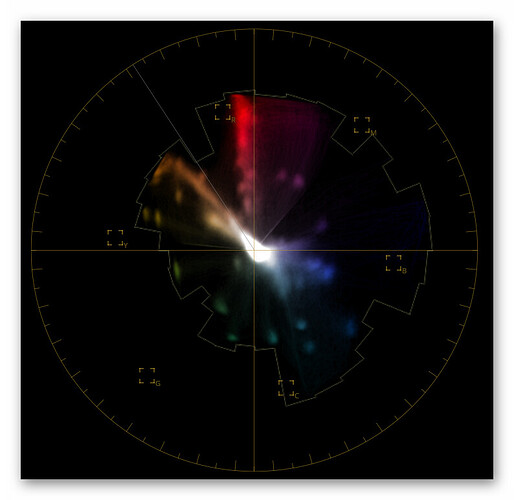

A similar frame was captured in test lepro led 1728464695.2264783.dng (@verlakasalt) with another light source:

Here, the red gain was

2.02, the blue gain 2.8. No noisestripes at all, probably because again the really dark areas of the film are not dark. For whatever reasons. Note that the white balance was quite off in this capture - there is a visible magenta cast all over the frame.

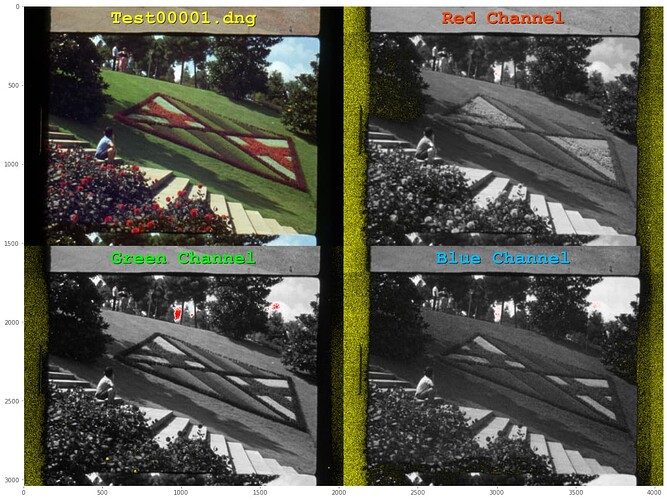

The following image, Test00001.dng, (@Manuel_Angel )shows our usual setup:

That is, noisy stripes outside of the area of interest (the film frame) and a barely noticable burn-out in the green channel (in the sky).

Seemingly the same image comes from Test00002.dng:

The real image (top-left) looks almost identical to the

Test00001.dng image - but note that a lot more areas show some close proximity to the maximum image brightness (all areas marked in red).

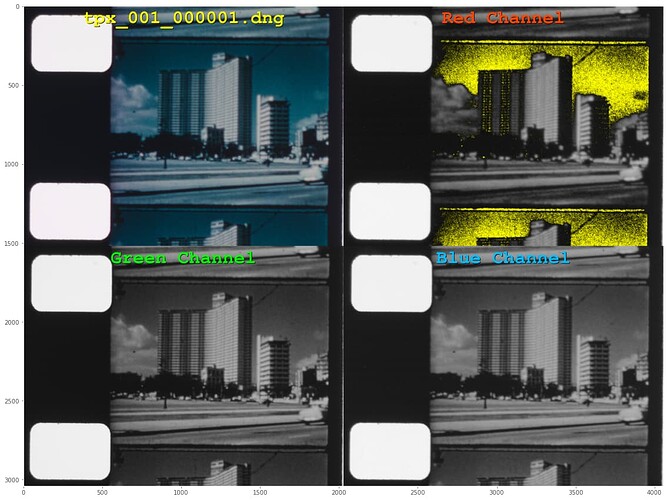

Continueing, here’s tpx_001_000001.dng (@PM490):

There is quite an amount of noise in the red channel, but noticably no noisy stripes. That is probably caused by a dynamic range in the red channel exceeding the 12 bit of dynamic range the HQ sensor is capable of. Note that the sprocket hole is exposed correctly - the real image data is occupying only a fraction of the available dynamic range, as discussed above.

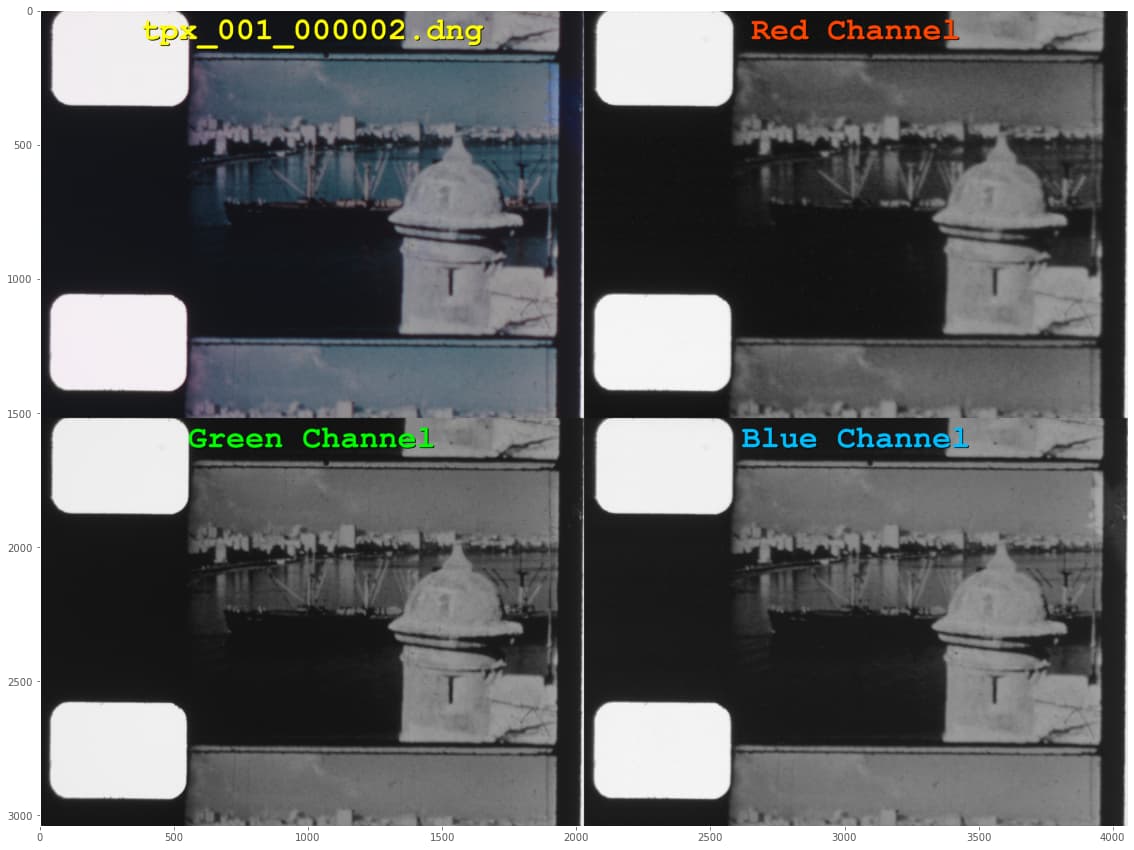

In comparision, with other image content and presumably the same setting, in tpx_001_000002.dng (@PM490) we see no noise at all:

Nor any other issues. Still, since the dynamic range is not optimal, the grading of this image might show banding effects if extreme measures would need to be taken.

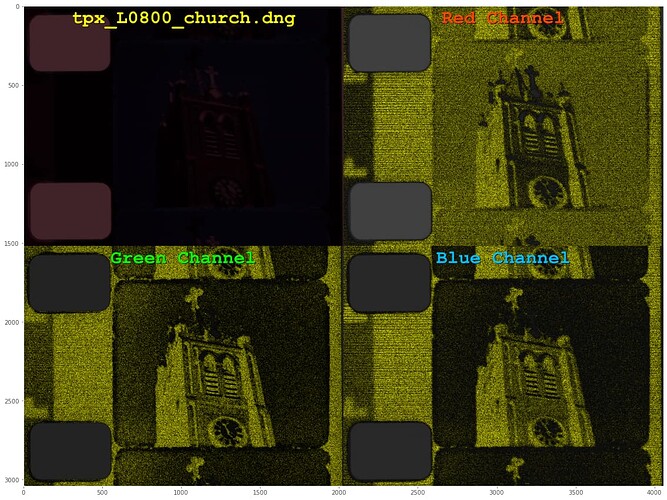

In closing, here are some testimages analyzed the same way as all examples above. First, tpx_L0800_church.dng (@PM490):

This image was severely underexposed on purpose. Noisy stripes are visible in all channels. Somewhat interesting, the red channel seems to be a sort of negative of the blue channel.

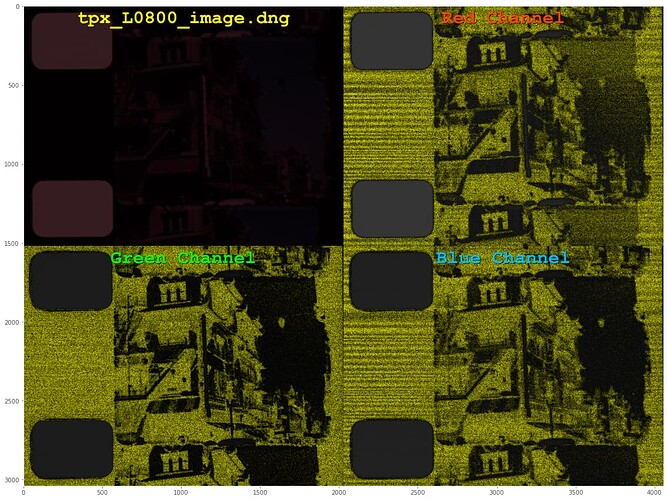

The same effect can be noticed with tpx_L0800_image.dng (@PM490):

But note also that the characteristics of the noisy stripes have changed in comparision with the previous image. I have no idea what might have caused this.

Ok, that’s it for now. Hope you found this little journey somewhat interesting.